The UCLA dataset has been the benchmark for DT recognition for several years, even though a much larger and more diverse database (the DynTex database) exists.

In spite of many dataset efforts for human action recognition, current computer vision algorithms are still severely limited in terms of the variability and complexity of the actions that they can recognize. This is in part due to the simplicity of current benchmarks, which mostly focus on simple actions and movements occurring on manually trimmed videos. We introduce ActivityNet, a new large-scale video benchmark for human activity understanding.

- Memorability scores for all 3,412 objects and 850 images from the PASCAL-S dataset

- Memorability maps corresponding to each of the 850 PASCAL-S images

- All original 850 PASCAL-S images and object segmentations used for the experiment

Two new sketch datasets for Stroke Authorship Recognition collected from experienced artists.

For details, kindly refer to the publication titled SAR: Stroke Authorship Recognition.

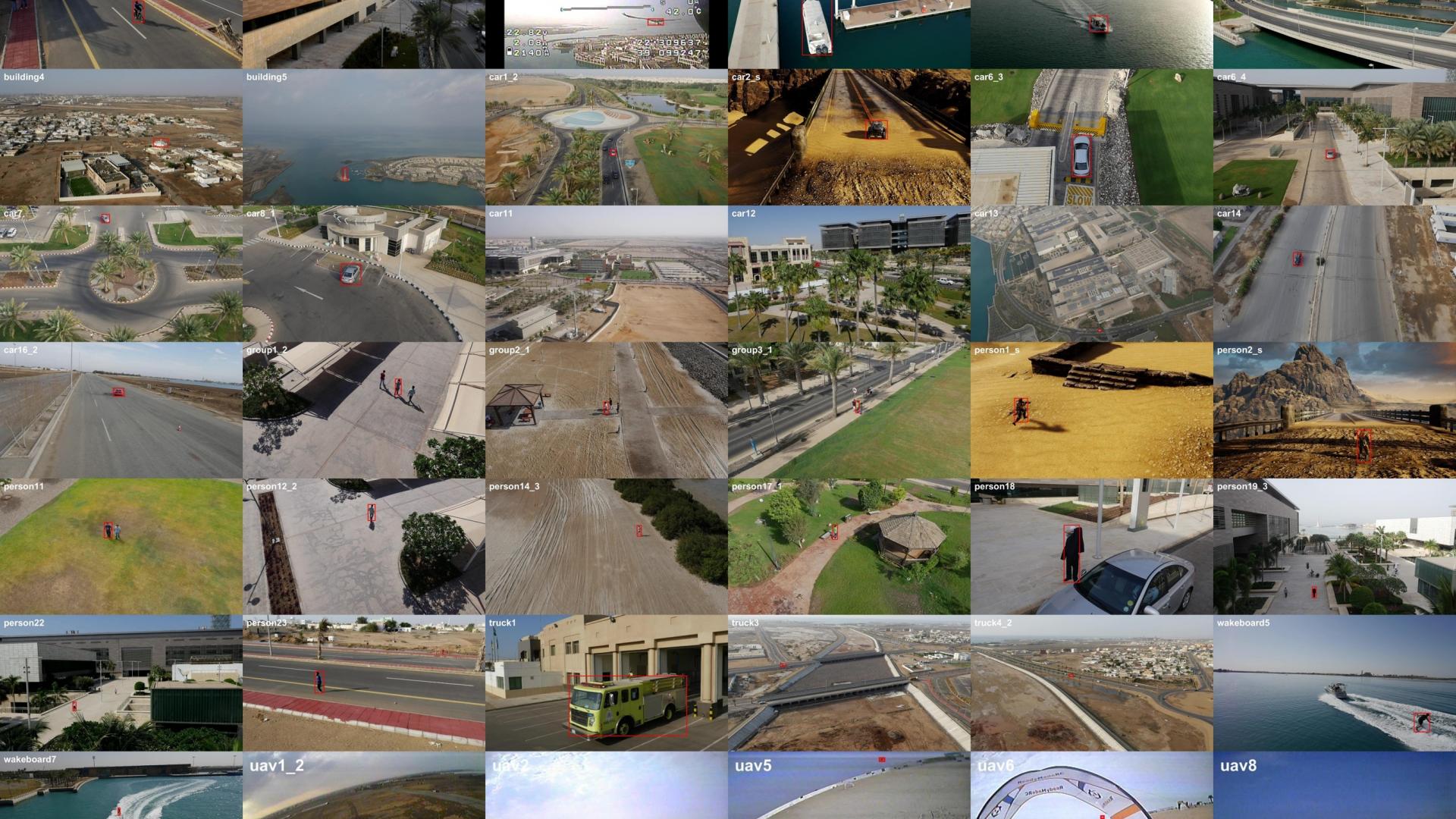

Video captured from low-altitude UAVs is inherently different from video in popular tracking datasets like OTB50, OTB100, VOT2014, VOT2015, TC128, and ALOV300++. Therefore, we propose a new dataset (UAV123) with sequences from an aerial viewpoint, a subset of which is meant for long-term aerial tracking (UAV20L). Our new UAV123 dataset contains a total of 123 video sequences and more than 110K frames making it the second-largest object tracking dataset after ALOV300++. All sequences are fully annotated with upright bounding boxes.

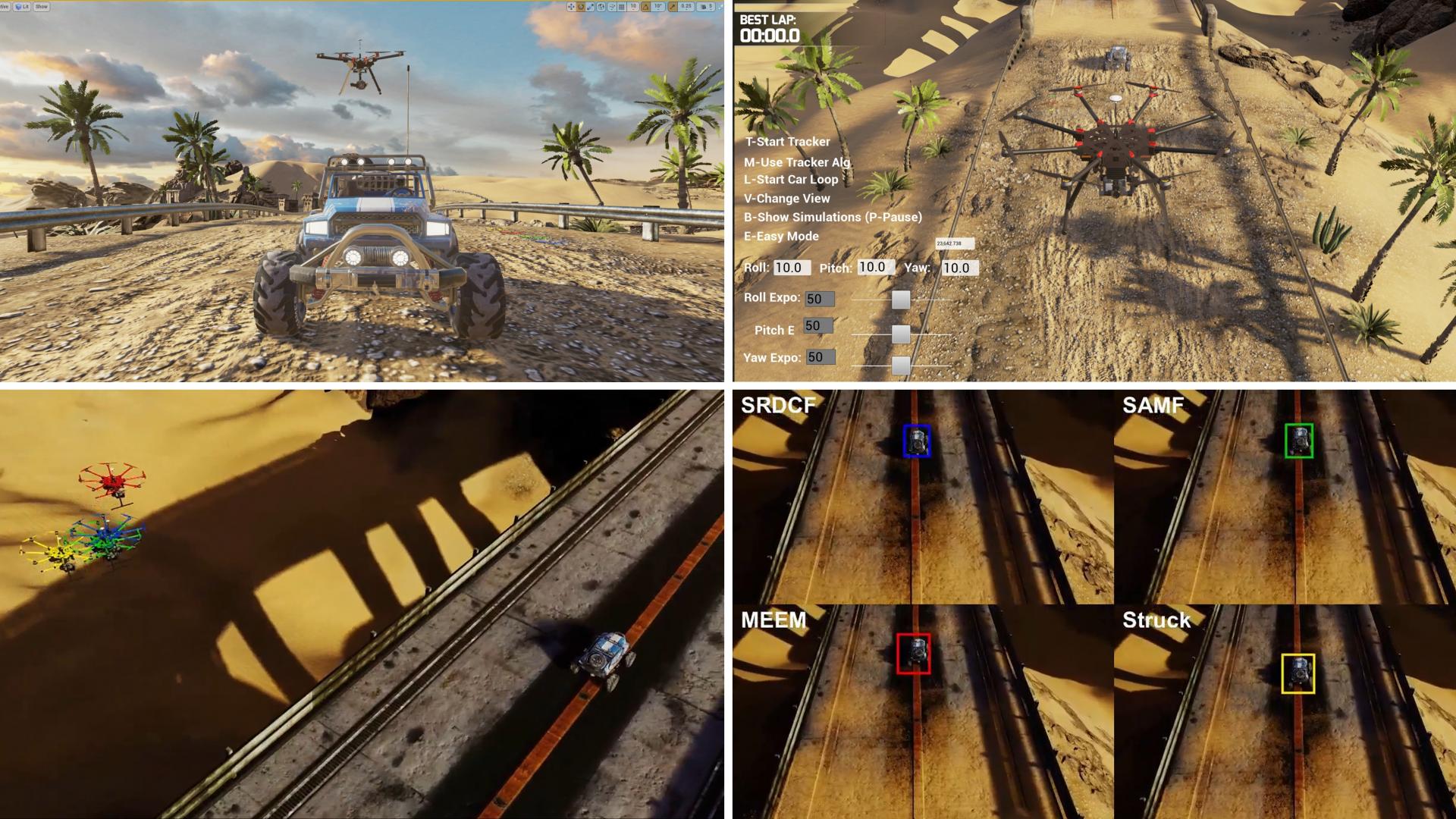

UAV Simulator: You can download the complete project binaries including a free demo world here.

In this work, we introduce SoccerNet, a benchmark for action spotting in soccer videos. The dataset is composed of 500 complete soccer games from six main European leagues, covering three seasons from 2014 to 2017 and a total duration of 764 hours. A total of 6,637 temporal annotations are automatically parsed from online match reports at a one-minute resolution for three main classes of events (Goal, Yellow/Red Card, and Substitution). As such, the dataset is easily scalable.

Despite the numerous developments in object tracking, further improvement of current tracking algorithms is limited by small and mostly saturated datasets. As a matter of fact, data-hungry trackers based on deep-learning currently rely on object detection datasets due to the scarcity of dedicated large-scale tracking datasets. In this work, we present TrackingNet, the first large-scale dataset, and benchmark for object tracking in the wild. We provide more than 30K videos with more than 14 million dense bounding box annotations.