Different variants of first-order SGD methods have been the popular optimization methods used in practical machine learning applications, particularly for deep learning tasks. However their well-acknowledged shortcomings including relatively slow convergence, sensitivity to hyper-parameter tuning, stagnation due to ill-conditioning, and difficulties in escaping saddle points in non-convex problems, make them unattractive at scale. Second-order methods, which consider the curvature of the loss landscape, promise to remedy these shortcomings. The dimension-independent convergence rate of second-order methods and their robustness with respect to the condition number of the Hessian make them appealing for large scale training tasks and related problems.

The main computational obstacles to the successful development of second-order, Newton-type, methods, in models with large data and parameter dimensions, are the operations on the Hessian. While subsampling the Hessian can reduce the cost of managing large data dimensions, the storage of the formally dense matrix and the solution of the Newton system are prohibitive for large parameter dimensions. The key to successful Newton methods is to approximate the Hessian in a way to make its computation, storage, and inversion manageable.

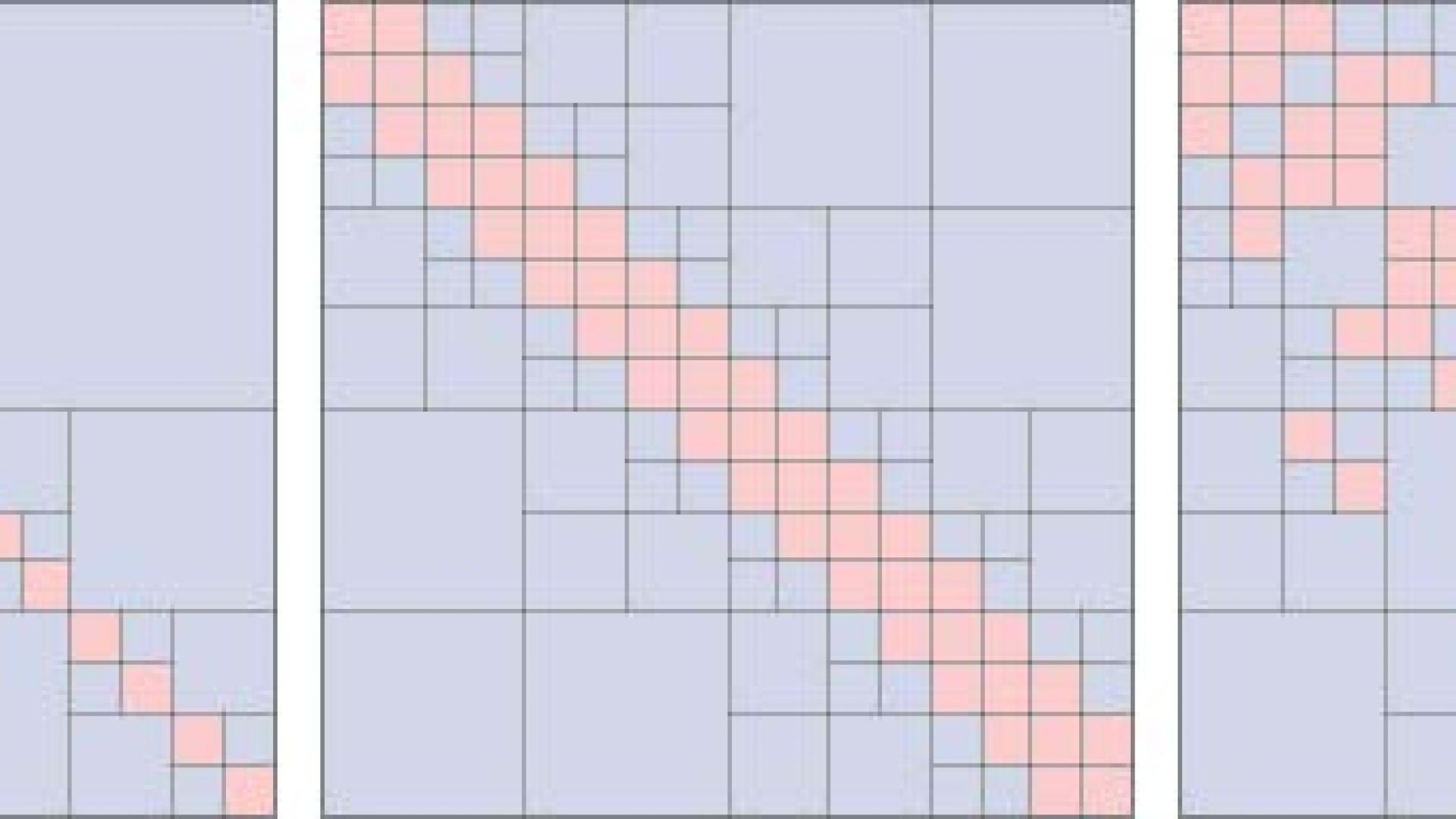

In this work, we explore a hierarchical matrix approximation of the Hessian that allows an accuracy-tunable linear-complexity memory footprint to be used. The hierarchical representation allows the construction, update, and matrix-vector multiplication operations on the Hessian to be done in linear or log-linear complexity, and provide support for efficient Newton-Krylov optimization methods. We plan to demonstrate the effectiveness of the hierarchical matrix representation on a number of representative NN training problems, and consider the use of the Hessian in assessing the sensitivity of the converged solution.

Investigator: