Machine learning models gather momentum

New methods for training machine learning models are quicker and more accurate than current approaches, previously considered state-of-the-art.

About

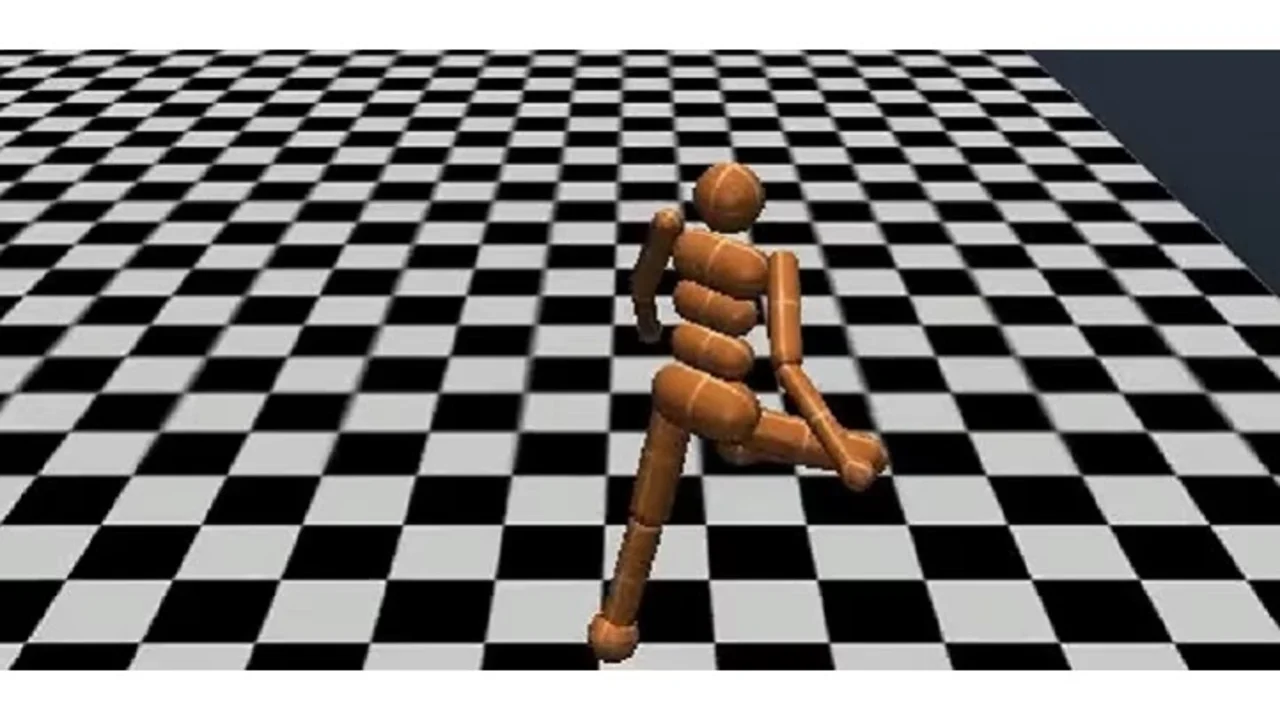

A fast, accurate and more rigorous method of training algorithms in reinforcement learning tasks has been developed by KAUST researchers. Improving on current best-practice optimization methods, the new approach has broad applications, from training robots to walk, to developing video games that can outsmart players.

Similar to the learning process in animals and humans, reinforcement learning works by providing reward feedback when an algorithm is close to achieving a specified goal. The closer the algorithm gets to performing a task correctly, the more it is rewarded.

While many machine learning methods, such as stochastic gradient descent, use derivative information to plot the fastest path to convergence, this information is not available in reinforcement learning. This makes it more challenging to train algorithms.

As a result, reinforcement learning methods tend to rely on guesswork. “Much of the work on algorithms in this area are heuristics; there is no mathematical guarantee that they will work,” says Peter Richtárik, computer scientist at KAUST’s Visual Computing Center.

To tackle this issue, Richtárik developed a derivative-free algorithm that was able to reach an optimal value using just one parameter1. Known as simplified direct search (SDS), the algorithm provided a quicker and more reliable alternative to the standard direct search method.

“It was the best approach at the time, but we have since improved it,” says Richtárik.

With computer scientist, El Houcine Bergou, Richtárik applied randomness to the SDS algorithm, which narrowed the process further. The modified approach, called the stochastic three points method, pinpoints the best objective function for proceeding to the next step in the training process.

“This method is extremely simple to use in practice,” says Bergou. “Its analysis is also simple compared to state-of-the-art similar methods,” says Bergou.

Read the full article