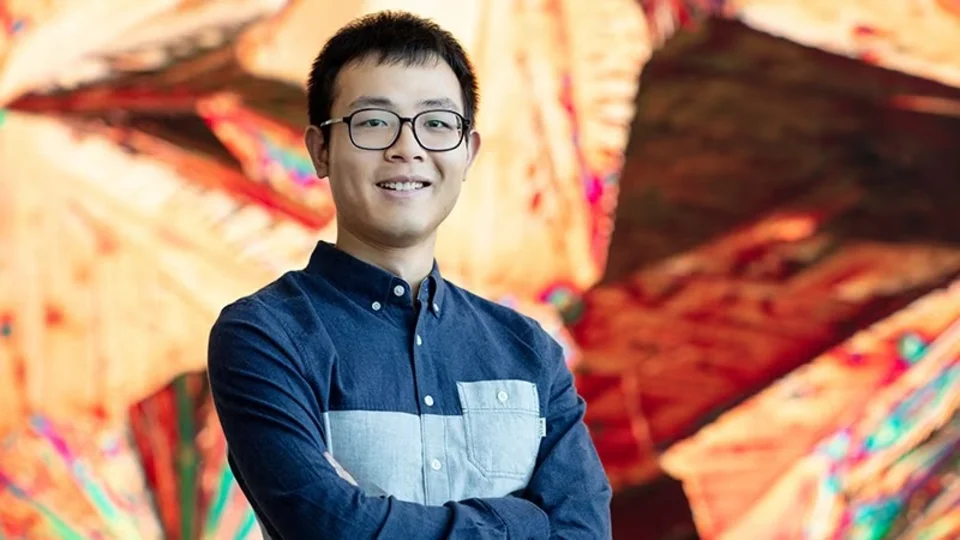

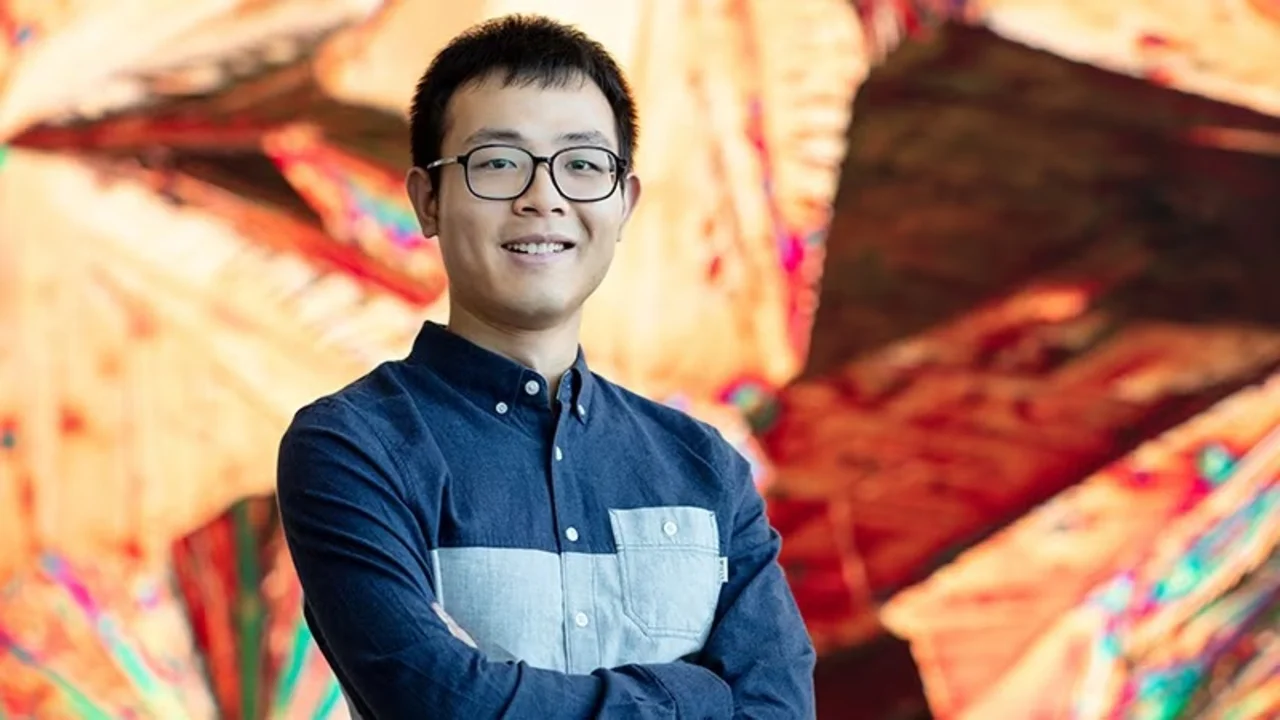

KAUST Ph.D. student Jinhui Xiong wins best paper award

KAUST computer science Ph.D. student Jinhui Xiong recently won the best paper award at the 24th International Symposium on Vision, Modeling, and Visualization. The symposium took place from September 30 to October 2, 2019, at the University of Rostock, Germany, and provided the opportunity for researchers to discuss a wide range of topics in computer science, including computer graphics, vision, visualization and visual analytics.

About

By David Murphy

KAUST computer science Ph.D. student Jinhui Xiong recently won the best paper award at the 24th International Symposium on Vision, Modeling, and Visualization. The symposium took place from September 30 to October 2, 2019, at the University of Rostock, Germany, and provided the opportunity for researchers to discuss a wide range of topics in computer science, including computer graphics, vision, visualization and visual analytics.

Xiong's winning paper titled "Stochastic Convolutional Sparse Coding" highlighted research carried out by Xiong—together with KAUST Professors Peter Richtárik and Wolfgang Heidrich, director of the KAUST Visual Computing Center and the leader of the new KAUST AI Initiative—into the investigation of how natural images can be represented by a set of filters with a small spatial size. The paper outlined the three researchers' proposed novel stochastic spatial-domain solver for Convolutional Sparse Coding (CSC), in which a randomized subsampling strategy is introduced during the learning of sparse codes.

"I am really excited to win this first award during my Ph.D. program," Xiong said. "In this paper, we present a new randomized algorithm to learn the dictionary from thousands of sample images. In general, it outperforms the state-of-the-art solutions in terms of execution time, [and it] offers an improved representation. Modern methods for CSC usually employ Fourier-domain solvers to speed up the convolution operators; however, this approach is not without shortcomings."

"In our work, a randomized subsampling strategy is introduced during the sparse code learning stage. Afterwards, we extend the proposed strategy in conjunction with online learning, scaling the CSC model up to very large sample sizes," he continued. "In both cases, we show experimentally that the proposed subsampling strategy, with a reasonable selection of the subsampling rate, outperforms the state-of-the-art frequency-domain solvers in terms of execution time without losing the learning quality."

Read the full article.