Approximating a kernel of truth

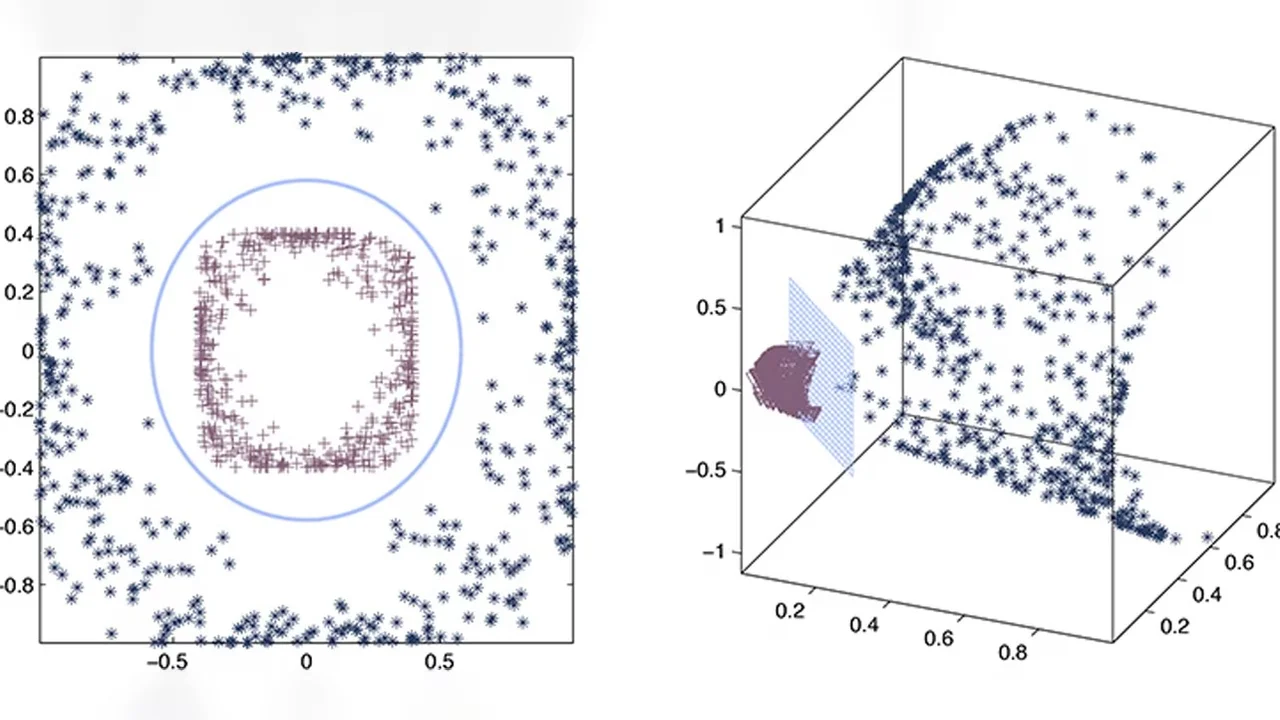

Machine learning tasks using very large data sets can be sped up significantly by estimating the kernel function that best describes the data.

About

By using an approximate rather than explicit "kernel" function to extract relationships in very large data sets, KAUST researchers have been able to dramatically accelerate the speed of machine learning. The approach promises to greatly improve the speed of artificial intelligence (AI) in the era of big data.

When AI is exposed to a large unknown data set, it needs to analyze the data and develop a model or function that describes the relationships in the set. The calculation of this function, or kernel, is a computationally intensive task that increases in complexity cubically (to the power of three) with the size of the data set. In the era of big data and increasing reliance on AI for analysis, this presents a real problem where kernel selection can become impractically time consuming.

With the supervision of Xin Gao, Lizhong Ding and his colleagues have been working on methods to speed up kernel selection using statistics.

“The computational complexity of accurate kernel selection is usually cubic with the number of samples,” says Ding. “This kind of cubic scaling is prohibitive for big data. We have instead proposed an approximation approach for kernel selection, which significantly improves the efficiency of kernel selection without sacrificing predictive performance.”

Read the full article