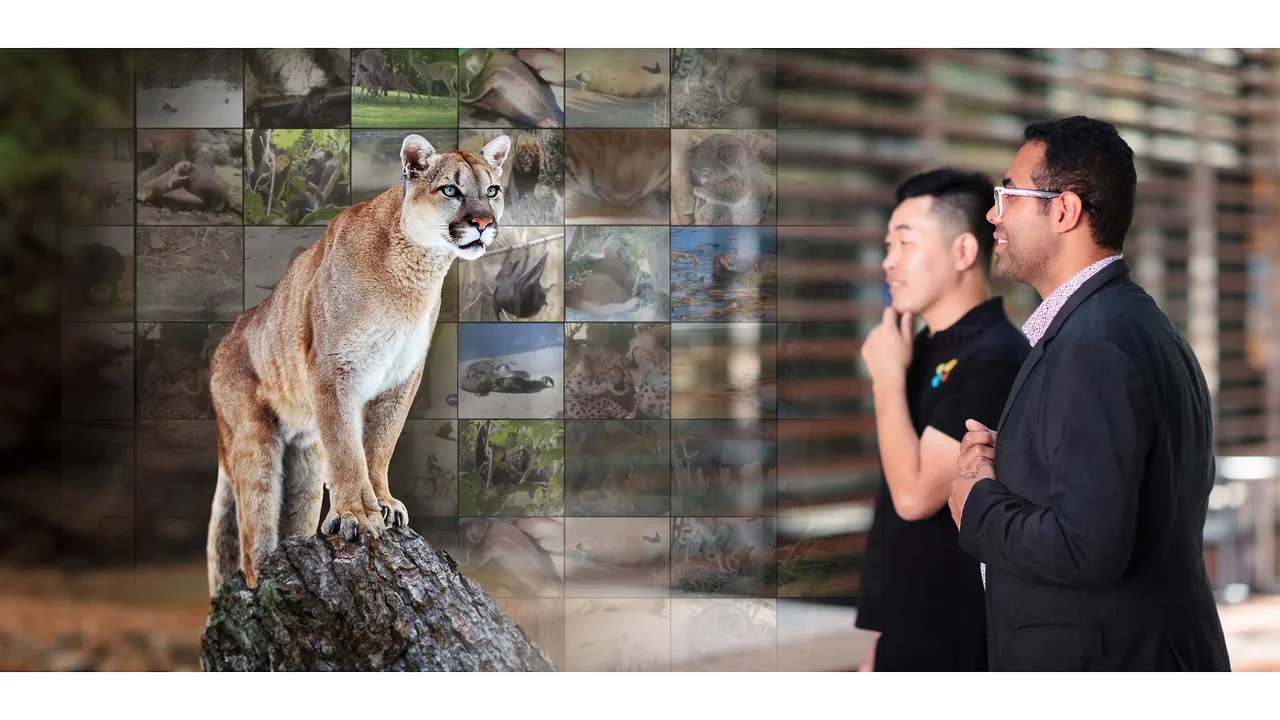

A knack for data and love of animals breeds a tool for mammal conservation

A Ph.D. candidate’s passion for animals is helping to drive the development of an AI system that informs conservation practice.

About

Advances in sensor technology, including those mounted on cameras, drones and smartphones, are empowering animal researchers to accumulate vast amounts of video data about wildlife. Analyses of videos at a large scale may provide new insights about animal behavior and ecology and ultimately guide conservation initiatives.

But how do we process and interpret such vast amounts of data, especially in a way that is genuinely applicable to conservation? A large-scale video dataset is a key first step, explains Jun Chen, a Ph.D. candidate in KAUST’s computer science program.

“To build an AI system that automatically recognizes animal species and behaviors from video footage, it needs to be trained on a diverse range of animals and associated behaviors,” says Chen. “For that, it’s crucial to first establish a large dataset of videos labeled with the animal species name and the behavior it is engaging in, so that the AI system can use it to learn.”

Chen created MammalNet, a dataset of more than 18,000 video clips each identifying the type of animal from 17 orders, 69 families and 173 mammal categories, as well as the behavior from 12 categories. This was made by downloading 60,000 videos from YouTube and later categorizing the type of animal and behavior automatically. The videos amount to 539 hours of footage, 10 times more footage than the previous largest video dataset on animal behavior.

“The videos amount to 539 hours of footage, 10 times more footage than the previous largest video dataset on animal behavior.”

The idea for MammalNet was conceived when Chen, whose work covers vision and language data sets, became interested in video. “I realized that most studies in video datasets were for understanding human activity,” says Chen. “I love animals, so I thought, ‘Couldn’t we be applying video datasets for many other animals as well?’” While large-scale datasets have been established with static images, videos offer more nuanced insights into animal behavior.

Chen’s advisor, Mohamed Elhoseiny, assistant professor of computer science at KAUST, helped assemble a cross-disciplinary team that included conservation experts and researchers with experience on video understanding. In previous works, Elhoseiny developed text-based datasets of birds using images.

“The trickiest part for MammalNet was to build a taxonomy that the AI system can use to infer family, genus and tribe,” says Elhoseiny.

One of the most important goals for MammalNet was to recognize animals accurately from a diverse range of possibilities. Datasets for individual species like elephants, sheep and tigers are useful, but these are unable to train AI systems to identify every possible combination of animal type and behavior.

To the team’s surprise, a complete and structured animal taxonomy was not available on the internet. They manually created a taxonomy by combining information from encyclopedia sites and websites about mammals. “For MammalNet to be useful in real practice, it was crucial that the taxonomy we built in was aligned with what ecologists actually use,” adds Elhoseiny.

In MammalNet, the team also aspired to identify the meaning behind actions automatically. For example, running could result from a need to chase prey, escape from a predator or play. “It’s more useful for ecologists and zoologists to identify behaviors at this level,” explains Elhoseiny. “It’s not so much actions like drinking and eating that conservation managers care about. At the end of the day, what they want to know are whether the animals are getting enough food and water.”

“In MammalNet, the team also aspired to identify the meaning behind actions automatically.”

They also found that it was possible to recognize behaviors of rare mammals — therefore small sample sizes — by making inferences from other animals.

Recognizing both the type of animal and behavior at the same time was a key achievement for MammalNet. “This was a contrast from video datasets focused on humans, a single species,” says Chen. “This made the task more like a complex computation problem, so our collaborators’ expertise was instrumental in making this work.”

Furthermore, Chen built in the ability to pinpoint when a behavior begins and ends within each video footage, which prior datasets were unable to do automatically. “When filming animals with trap cameras, it’s uncertain when animals will begin displaying certain behaviors. That’s why it’s important to have a system that is naturally capable of identifying whether nothing is happening, or if something does start to happen, but also when that is, which animal and what activity,” says Elhoseiny.

Part of the project’s success, explains team member Anna Rohrbach, a professor of multimodal grounded learning at Technical University Darmstadt in Germany, is because it had domain experts on board. “One of the best things about this project was the ensemble of scientists across disciplines spanning computer vision, artificial intelligence, behavioral ecology and biodiversity,” she says.

“One of the best things about this project was the ensemble of scientists across disciplines.”

Team member Sara Beery, assistant professor of AI and decision making at Massachusetts Institute of Technology, explains that “MammalNet is an important step toward understanding wildlife behavior in the wild across a diverse set of both species and ecologically relevant behavior categories. This dataset provides a valuable testbed for researchers to iterate and improve on existing species and behavior categorization AI methods, an essential tool for research progress that was previously a major bottleneck,” she says.

The team envisions that MammalNet could be used to train AI systems that analyze animal behavior, for example footage from from trap cameras placed in forests or monitoring in zoos. “There are also many other users we haven’t even imagined because every behavior ecology lab will have their own research questions and a subset of animals they look at,” says Elhoseiny.

While they are currently only focused on mammals, Chen and Elhoseiny hope to expand the scope of the project to cover the entire animal kingdom, including marine species.