-By Valentina De Vincenti

If you ask Data Scientists, no matter which specific datasets they mine, they would all lament the same need for a faster parallel data mining methods.

The ultimate answer is ScaleMine, cost-effective and agile scalable parallel frequent subgraph mining in a single large graph. Panagiotis Kalnis, Professor of Computer Science (CS), and the team from the KAUST Extreme Computing Research Center (ECRC), under the Computer, Electrical and Mathematical Sciences and Engineering (CEMSE) Division signed this novel approach.

In the age of data deluge, Kalnis and co-workers recognize the strict cross-field dependency of scientific knowledge evolution based on Frequent Subgraph Mining (FSM).

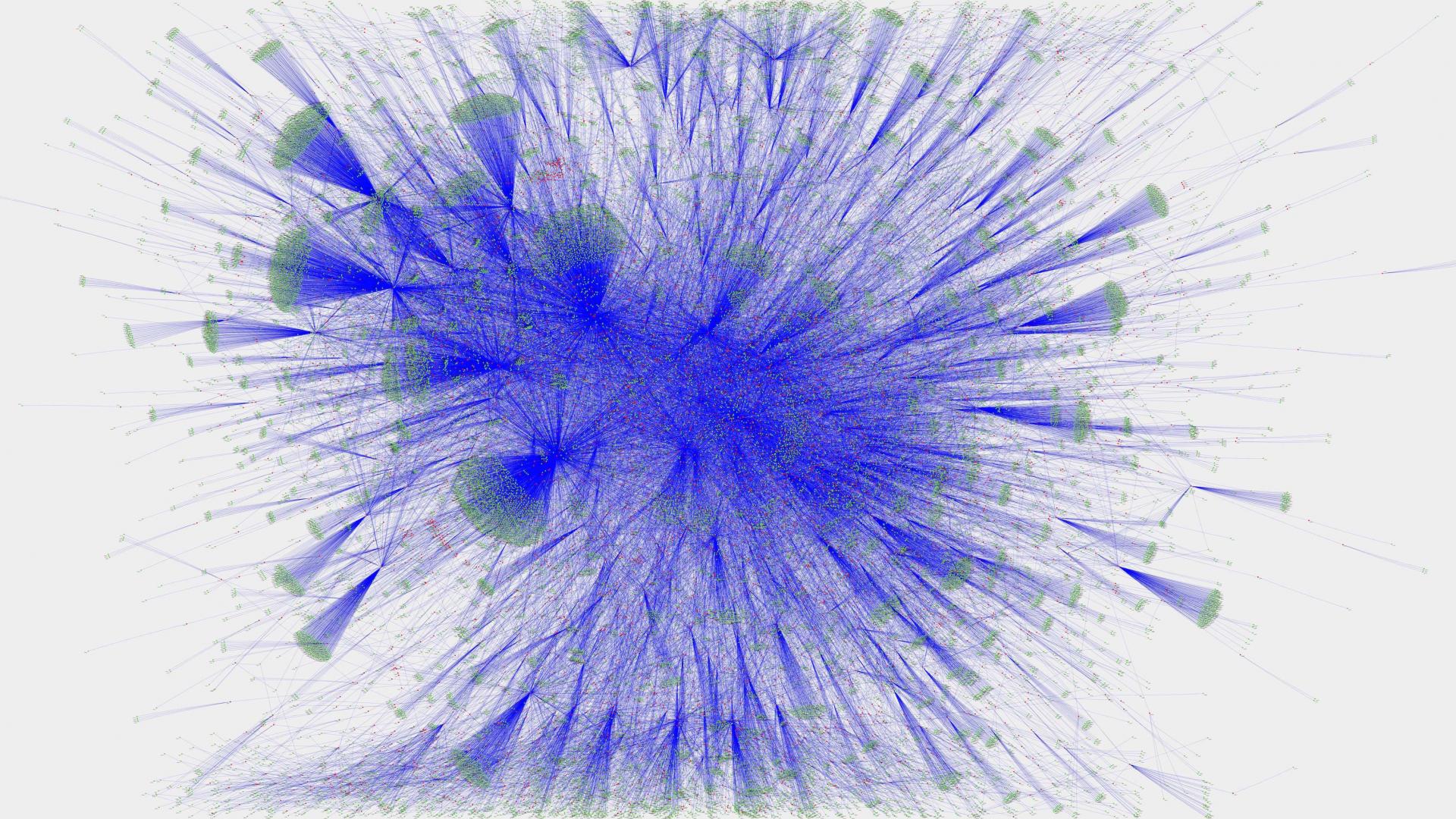

Such pivotal process is used to extract information seeking for recurring patterns or graphs - data structures that model complex relationship among objects in large datasets.

"FSM involves a vast number of graphs operations, processing hundreds of millions of objects and billions of relationships, each of which is computationally expensive. Thus, the only practical way to support FSM in large graphs is by massively parallel computation," Kalnis stressed.

The ECRC team set a solid milestone in parallel computing, with ScaleMile setting apart from obsolete parallel FSM strategies. Based on traditional parallel approaches, data scientist could extract information by simultaneously running different graph-based tasks, but the objective limits in scaling the large graphs proportionally cause tremendous delays and continuous adjustments in programming.

"We provided a better algorithm, instead. All the applications that depend on Frequent subgraph Mining are able to perform deeper analysis on longer data with a ten-time faster respond," Kalnis explained.

Unprecedentedly, the novel approach by the ECRC team allows mining long strings of data in two steps: firstly, an approximation step determines the search space and the optimal division of tasks; then, large tasks are split dynamically into the optimal number of subtasks. Thus, the computational workload is accurately scaled and FSM process runs in a more agile way.

Already waking the attention of fellow data scientists, the team's future work will now focus on extending such performance improvement to extract innovative knowledge in a faster and cost-effective strategy.