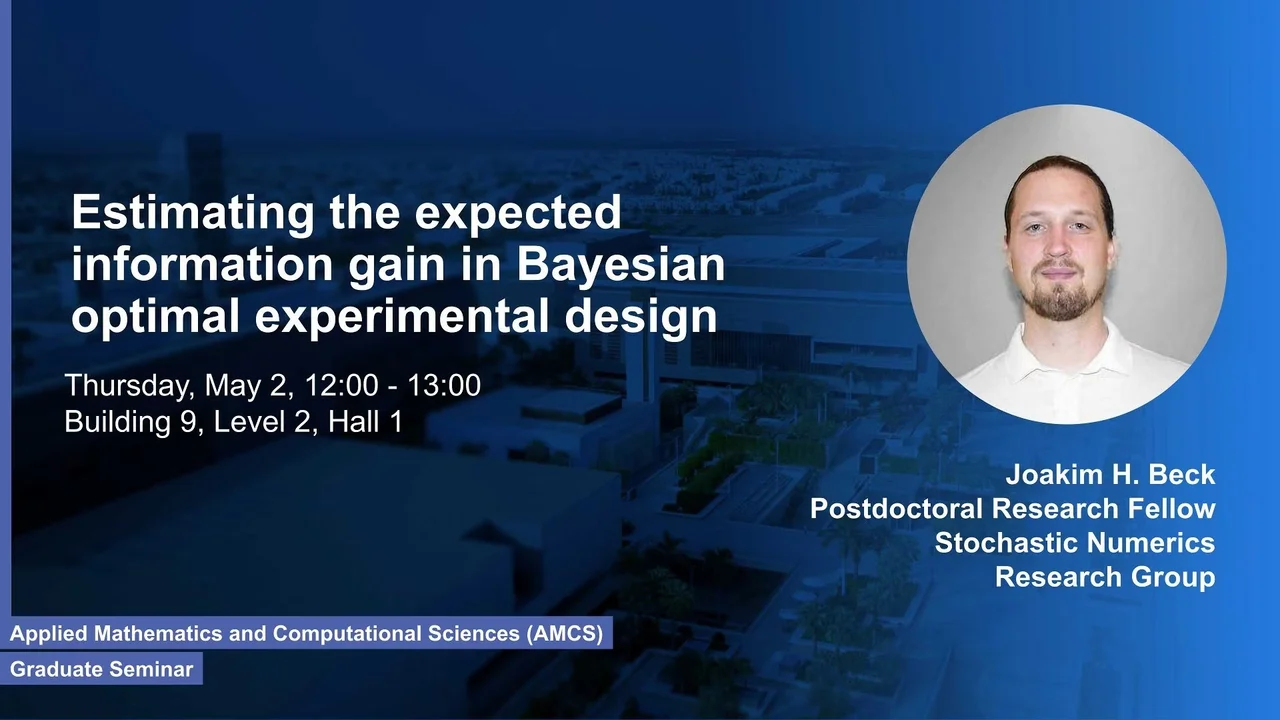

Estimating the expected information gain in Bayesian optimal experimental design

B9 L2 H1

Optimal experimental design for parameter estimation is a fast-growing area of research. Let us consider the experimental goal to be the inference of some attributes of a complex system using measurement data of some chosen system responses, and the optimal designs are those that maximize the value of measurement data. The value of data is quantified by the expected information gain utility, which measures the informativeness of an experiment. Often, a mathematical model is used that approximates the relationship between the system responses and the model parameters acting as proxies for the attributes of interest.

Overview

Abstract

Optimal experimental design for parameter estimation is a fast-growing area of research. Let us consider the experimental goal to be the inference of some attributes of a complex system using measurement data of some chosen system responses, and the optimal designs are those that maximize the value of measurement data. The value of data is quantified by the expected information gain utility, which measures the informativeness of an experiment. Often, a mathematical model is used that approximates the relationship between the system responses and the model parameters acting as proxies for the attributes of interest.

The focus of this talk is on how to reduce the computational cost associated with estimating the expected information gain, which is known to be computationally challenging. The most common es- timator is the standard double-loop Monte Carlo (DLMC), which typically requires millions of samples to reach acceptable accuracies. To improve upon the standard DLMC, one can use the Laplace ap- proximation for posterior densities in the inner averaging as a surrogate or as an importance sampling distribution, reducing the number of required samples to the order of thousands. DLMC with Laplace- based importance sampling (DLMCIS) has been shown to substantially reduce the statistical error of the inner averaging, and, in a recent work, we derived an optimized DLMCIS estimator subject to a prescribed error tolerance being satisfied with some specified probability.

We use three numerical examples to demonstrate the computational efficiency of our proposed method. The first two are synthetic examples: a linear and a nonlinear scalar problem. The third example in- volves a complex system, more specifically, an electrical impedance tomography design problem where the goal is to infer the fiber orientation in laminate composites.

- Beck, J., Dia, B. M., Espath, F. R., Long, Q., and Tempone, R., “Fast Bayesian experimental design: Laplace-based importance sampling for the expected information gain” Comput Method Appl M, 334, 523–553, 2018.

- Beck, J., Dia, B. M., Espath, F. R., and Tempone, R., “Multilevel Double Loop Monte Carlo and Stochastic Collocation Methods with Importance Sampling for Bayesian Optimal Experimental Design” arXiv preprint, arXiv:1811.11469, 2018.

Brief Biography

Dr. Joakim Beck is a post-doctoral research fellow of the Stochastic Numerics research group lead by Prof. Raúl Tempone in the Computer, Electrical and Mathematical Sciences & Engineering (CEMSE) division at KAUST. Before joining KAUST in 2016, he held a research associate position (2013-2016) at the Department of Statistical Science, University College London (UCL). He obtained a PhD in Engineering at UCL in 2014 after completing an MSc (Eng.) degree in Engineering Physics at the Royal Institute of Technology [KTH] in Stockholm, Sweden.

Refreshments: Light lunch will be provided.