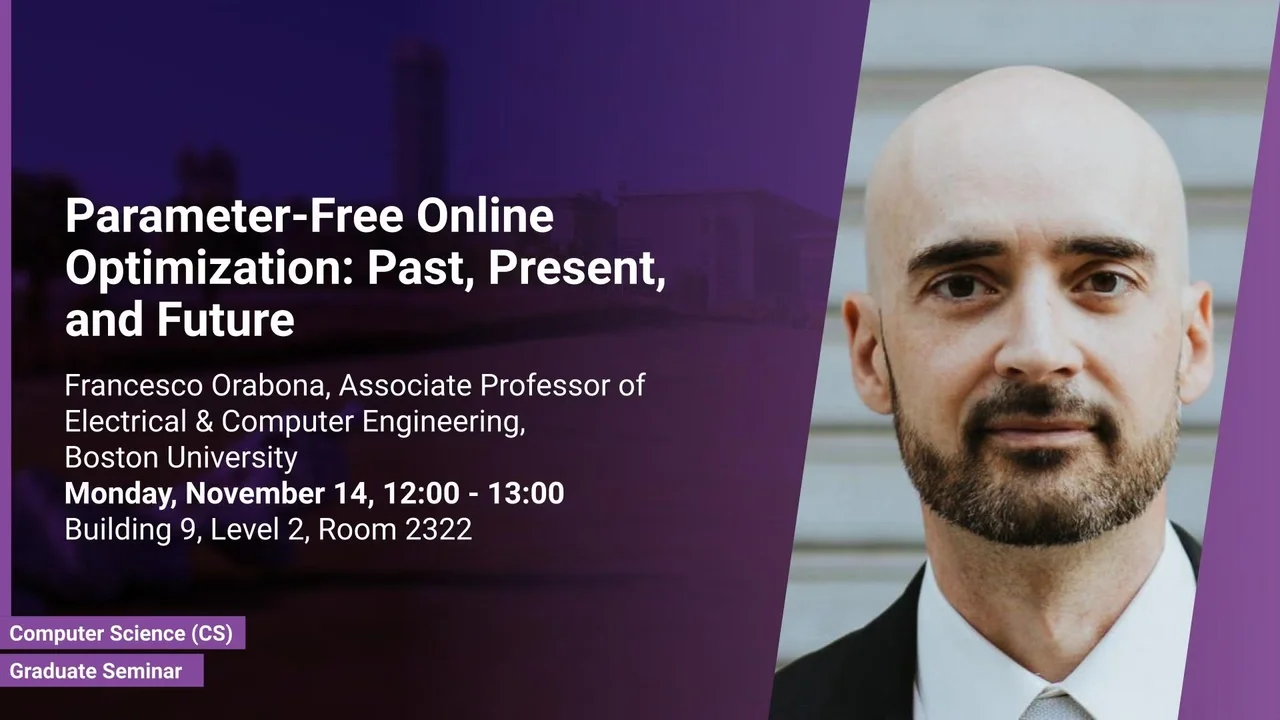

Parameter-Free Online Optimization: Past, Present, and Future

- Francesco Orabona, Associate Professor of Electrical and Computer Engineering, Boston University

B9 L2 R2322

Parameter-free online optimization is a class of algorithms that does not require tuning hyperparameters, yet they achieve the theoretical optimal performance. Moreover, they often achieve state-of-the-art performance too. An example would be gradient descent algorithms completely without learning rates. In this talk, I review my past and present contributions to this field. Building upon a fundamental idea connecting optimization, gambling, and information theory, I discuss selected applications of parameter-free algorithms to machine learning and statistics. Finally, we conclude with an overview of the future directions of this field.

Overview

Abstract

Machine Learning has been described as the fuel of the next industrial revolution. Yet, current state-of-the-art learning algorithms still heavily rely on having a human in the loop in order to work properly. The most common options for the practitioners are to follow their intuitions or to exhaustively evaluate all the possibilities. However, in certain cases, we can do better. Parameter-free online optimization is a class of algorithms that does not require tuning hyperparameters, yet they achieve the theoretical optimal performance. Moreover, they often achieve state-of-the-art performance too. An example would be gradient descent algorithms completely without learning rates. In this talk, I review my past and present contributions to this field. Building upon a fundamental idea connecting optimization, gambling, and information theory, I discuss selected applications of parameter-free algorithms to machine learning and statistics. Finally, we conclude with an overview of the future directions of this field.

Brief Biography

Francesco Orabona is an Associate Professor of Electrical & Computer Engineering at Boston University. His research interests lie in online learning, optimization, and statistical learning theory. He obtained his Ph.D. from the University of Genova in 2007. He previously was an Assistant Professor of Computer Science at Stony Brook University, a Senior Research Scientist at Yahoo Labs, and a Research Assistant Professor at the Toyota Technological Institute at Chicago. He received a Faculty Early Career Development (CAREER) from NSF in 2021 and a Google Research Award in 2017.

Add individual feedback