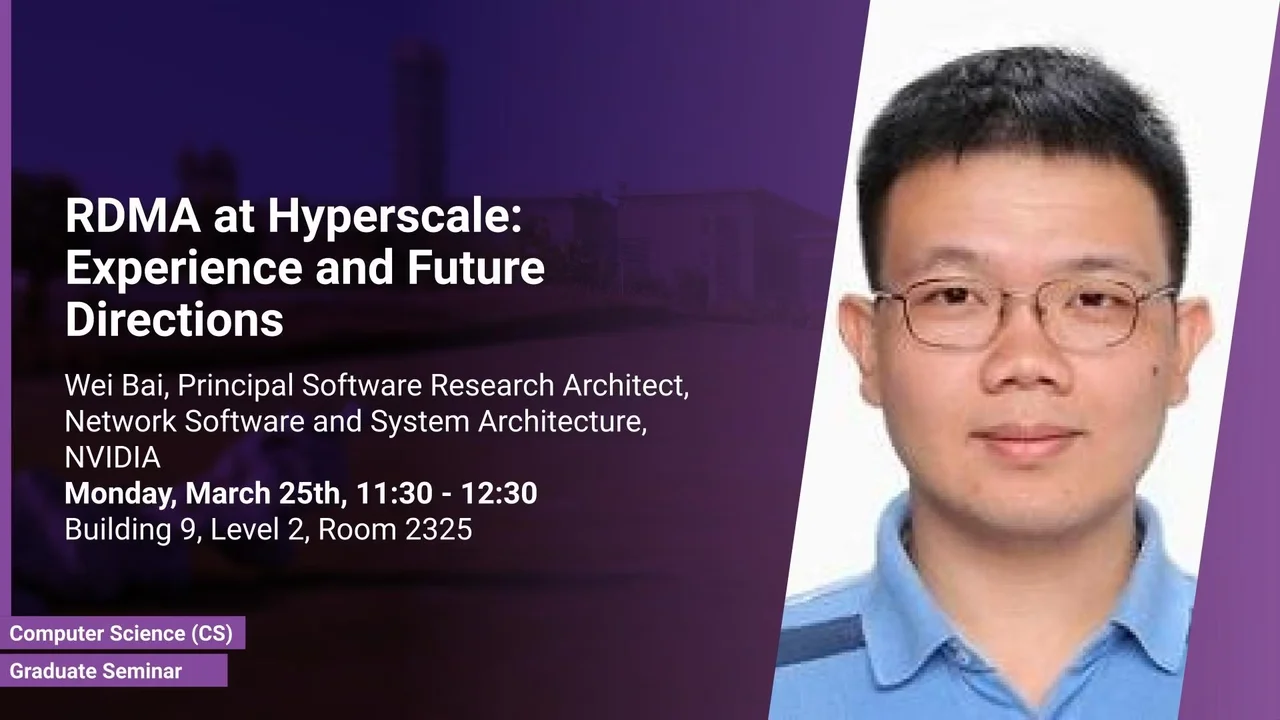

RDMA at Hyperscale: Experience and Future Directions

- Wei Bai, Principal Software Research Architect, NVIDIA

B9 L2 H2

Remote Direct Memory Access (RDMA) has long been recognized as a powerful technology for high-performance computing and data-intensive applications. In this talk, I will present our experience in deploying intra-region RDMA to support storage workloads in Azure.

Overview

Abstract

Remote Direct Memory Access (RDMA) has long been recognized as a powerful technology for high-performance computing and data-intensive applications. In this talk, I will present our experience in deploying intra-region RDMA to support storage workloads in Azure. Today, around 70% of traffic in Azure is RDMA and intra-region RDMA is supported in all Azure public regions. I will also discuss some open problems we see going forward and our early efforts in these directions.

Brief Biography

Wei Bai recently joined NVIDIA as a principal software research architect. Before that, he was a senior researcher in the Networking Research Group at Microsoft Research Redmond. He received his PhD degree in computer science from Hong Kong University of Science and Technology. Wei is broadly interested in computer networking with a special focus on data center networking. His research work has been published in many top conferences and journals, such as SIGCOMM, NSDI, and IEEE/ACM Transactions on Networking. Wei also has rich experience in developing and operating production cloud networks. Currently, he is mainly focusing on network infrastructure to support hyperscale AI workloads.