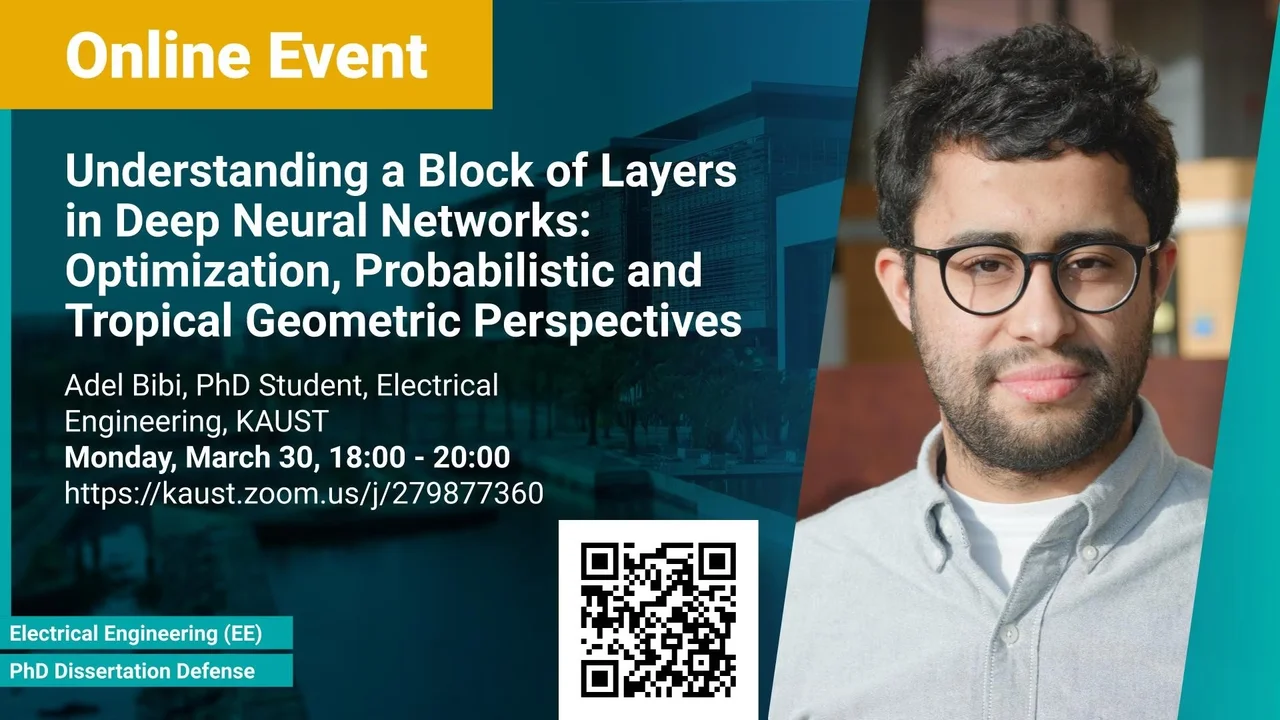

Understanding a Block of Layers in Deep Neural Networks: Optimization, Probabilistic and Tropical Geometric Perspectives

In this dissertation, we aim at theoretically studying and analyzing deep learning models. Since deep models substantially vary in their shapes and sizes, in this dissertation, we restrict our work to a single fundamental block of layers that is common in almost all architectures. The block of layers of interest is the composition of an affine layer followed by a nonlinear activation function and then lastly followed by another affine layer. We study this block of layers from three different perspectives. (i) An Optimization Perspective. We try addressing the following question: Is it possible that the output of the forward pass through the block of layers highlighted above is an optimal solution to a certain convex optimization problem? As a result, we show an equivalency between the forward pass through this block of layers and a single iteration of certain types of deterministic and stochastic algorithms solving a particular class of tensor formulated convex optimization problems.

Overview

Abstract

Deep learning has revolutionized plethora of countless domains and applications since Alex Krizhevsky introduced AlexNet winning the ImageNet visual object recognition challenge. Since then, deep learning found its way to various applications from computer vision, machine learning, natural language processing and speech recognition to bioinformatics and medicine. All of this has led several renowned researchers as Andrew Ng to refer to deep learning as the "new electricity". To that end, various deep learning architectures, nonlinear activations and training schemes are introduced every now and then demonstrating even further new impressive empirical performances on several tasks. However, most of such new variations lack theoretical justification and that, unfortunately, a full theoretical characterization of deep learning models remains elusive.

In this dissertation, we aim at theoretically studying and analyzing deep learning models. Since deep models substantially vary in their shapes and sizes, in this dissertation, we restrict our work to a single fundamental block of layers that is common in almost all architectures. The block of layers of interest is the composition of an affine layer followed by a nonlinear activation function and then lastly followed by another affine layer. We study this block of layers from three different perspectives. (i) An Optimization Perspective. We try addressing the following question: Is it possible that the output of the forward pass through the block of layers highlighted above is an optimal solution to a certain convex optimization problem? As a result, we show an equivalency between the forward pass through this block of layers and a single iteration of certain types of deterministic and stochastic algorithms solving a particular class of tensor formulated convex optimization problems. As a consequence of this analysis, we show and derive for the first time a formula for computing the exact singular values of convolutional layers surpassing the need for the prohibitively expensive construction of the underlying convolutional matrix. With this equivalency, we show that several deep networks can have this block of layers replaced with the corresponding optimization algorithm predicted by our theory resulting in a network that can enjoy better generalization performance. (ii) A Probabilistic Perspective. We try addressing the following question: Is it possible to analytically analyze the behaviour of the output of a deep network upon subjecting the input to an arbitrary noise distribution? To that regard, we derive analytical formulas for the first and second moments (mean and consequently variance) of the block of layers highlighted above, with only piecewise linear activation functions, under Gaussian noise at the input. We demonstrate that through approaches of two stage linearizations, the derived expressions can indeed be used to efficiently analyze the output of an arbitrary deep network. Moreover, we show that such expressions can be very useful for constructing, for the first time, Gaussian adversarial attacks surpassing any need for prohibitive data augmentation procedures. (iii) A Tropical Geometry Perspective. While tropical geometry is the younger twin to algebraic geometry, it seems to be the right tool to analyzing and studying piecewise linear functions. To that regard, we are interested in addressing the following questions: Is it possible to study the behaviour of the decision boundaries of the block of layers highlighted above as a geometric structure that represents the solution set to a certain class of polynomials (tropical polynomials)? If yes, then is it possible to utilize this new geometric representation of the decision boundaries for novel reformulations to classical computer vision and machine learning tasks with arbitrary deep networks? To that regard, we show that the decision boundaries of the block of layers highlighted above are a subset of a tropical hypersurface, which is intimately related to a well structured polytope that is the convex hull of two zonotopes. We utilize this geometric characterization to shed lights on new perspectives of network pruning.

Brief Biography

Adel Bibi received his BSc degree in electrical engineering with class honors from Kuwait university in 2014. He later obtained his MSc degree in electrical engineering with a focus on computer vision from King Abdullah University of Science and Technology (KAUST) in 2016. Currently, he is pursuing his PhD degree with a focus at the intersection between computer vision, machine learning and optimization. Adel has been recognized as an outstanding reviewer for CVPR18, CVPR19 and ICCV19 and won the best paper award at the optimization and big data conference in KAUST. Several of his works were selected for fully oral presentation and spotloghts. He has published more than 10 papers in CVPRs, ECCVs, ICCVs and ICLRs.