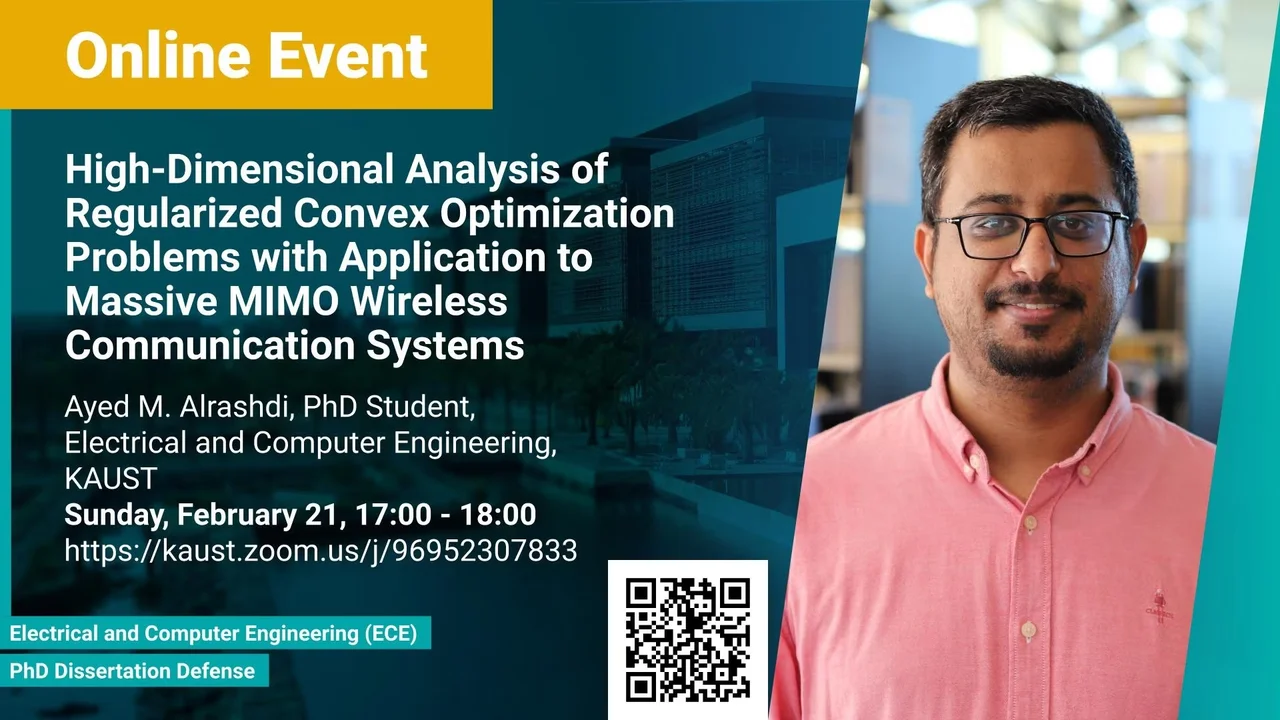

High-Dimensional Analysis of Regularized Convex Optimization Problems with Application to Massive MIMO Wireless Communication Systems

In this thesis, we focus on precisely analyzing the high dimensional error performance of such regularized convex optimization problems under the presence of different impairments (such as uncertainties and/or correlations) in the measurement matrix, which has independent Gaussian entries. The precise nature of our analysis allows performance comparison between different types of these estimators and enables us to optimally tune the involved hyperparameters. In particular, we study the performance of some of the most popular cases in linear inverse problems, such as the Least Squares (LS), Regularized Least Squares (RLS), LASSO, Elastic Net, and their box-constrained variants.

Overview

Abstract

In the past couple of decades, the amount of data available has dramatically increased. Thus, in modern large-scale inference problems, the dimension of the signal to be estimated is comparable or even larger than the number of available observations. Yet the desired properties of the signal lie in some low-dimensional structure, such as sparsity, low-rankness, finite alphabet, etc. Recently, non-smooth regularized convex optimization has risen as a

powerful tool for the recovery of such structured signals from noisy linear measurements in an assortment of applications in signal processing, wireless communications, machine learning, computer vision, etc. With the advent of Compressed Sensing (CS), there has been a huge number of theoretical results that consider the estimation performance of non-smooth convex optimization in such a highdimensional setting.

In this thesis, we focus on precisely analyzing the high dimensional error performance of such regularized convex optimization problems under the presence of different impairments (such as uncertainties and/or correlations) in the measurement matrix, which has independent Gaussian entries. The precise nature of our analysis allows performance comparison between different types of these estimators and enables us to optimally tune the involved hyperparameters. In particular, we study the performance of some of the most popular cases in linear inverse problems, such as the Least Squares (LS), Regularized Least Squares (RLS), LASSO, Elastic Net, and their box-constrained variants. In each context, we define

appropriate performance measures, and we analyze them exactly in the High Dimensional Regime (HDR). We use our results for a concrete application of designing efficient decoders for modern massive Multi-input Multi-output (MIMO) wireless communication systems and optimally allocate their power.

The framework used for the analysis is based on Gaussian process methods, in particular, on a recently developed strong and tight version of the classical Gordon comparison inequality which is called the Convex Gaussian Min-max Theorem (CGMT). Also, we use some results from Random Matrix Theory (RMT) in our analysis as well.

Brief Biography

Ayed M. Alrashdi received the B.S. degree in Electrical Engineering (with first honors) from the University of Hail, Hail, Saudi Arabia, in 2014, and the M.S. degree in Electrical Engineering from King Abdullah University of Science and Technology (KAUST), Thuwal, Saudi Arabia, in 2016. He is a PhD candidate in the Electrical and Computer Engineering department, KAUST working with Professor Tareq Al-Naffouri in the Information System Lab (ISL). He is also a lecturer at The University of Hail since 2014.