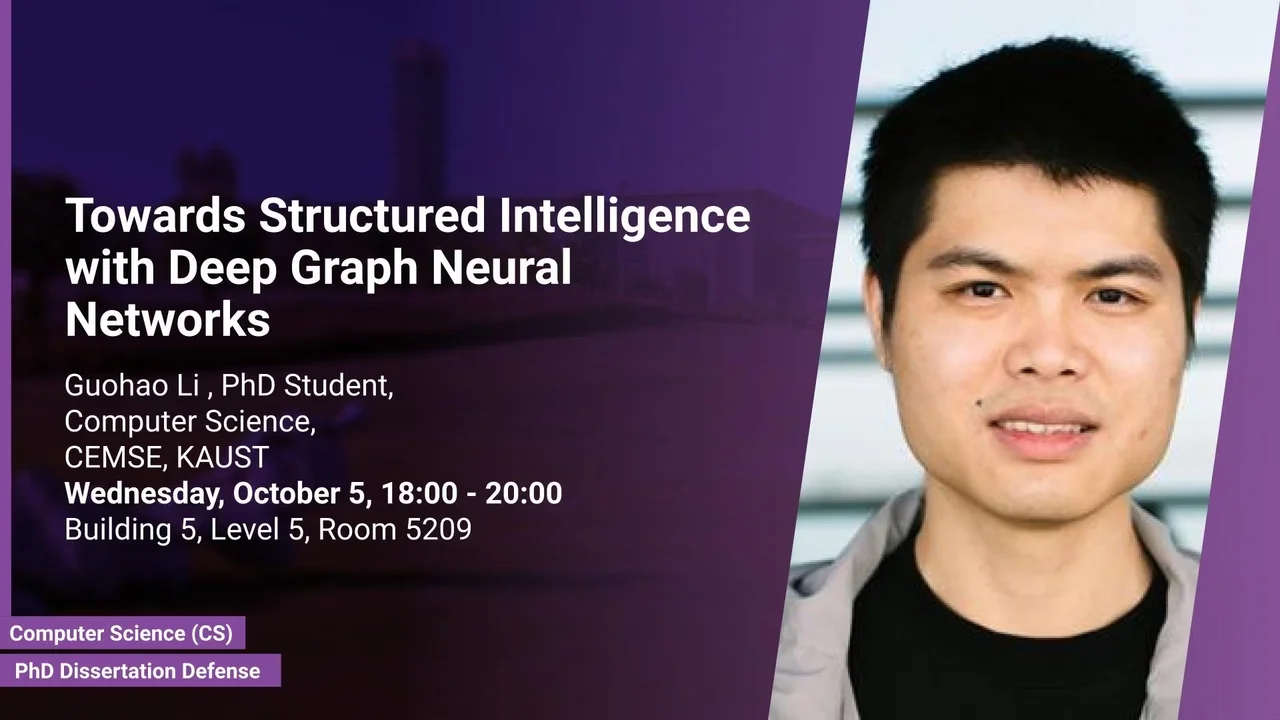

Towards Structured Intelligence with Deep Graph Neural Networks

This dissertation discusses approaches to building large-scale and efficient graph machine learning models for learning structured representation with applications to engineering and sciences. This work would present how to make Graph Neural Networks (GNNs) go deep by introducing architectural designs and how to automatically search GNN architectures by novel neural architecture search algorithms.

Overview

Abstract

Advances in convolutional neural networks and recurrent neural networks have led to significant improvements in learning on regular grid data domains such as images and texts. However, many real-world datasets, for instance, social networks, citation networks, molecules, point clouds, and 3D meshes, do not lie in such a simple grid. Such data is irregular or non-Euclidean in structure and has complex relational information. Graph machine learning, especially Graph Neural Networks (GNNs), provides a potential for processing such irregular data and being capable of modeling the relation between entities, which is leading the machine learning field to a new era. However, previous state-of-the-art (SOTA) GNNs are limited to shallow architectures due to challenging problems such as vanishing gradients, over-fitting, and over-smoothing. Most of the SOTA GNNs are not deeper than 3 or 4 layers, which restricts the representative power of GNNs and makes learning on large-scale graphs ineffective. Aiming to resolve this challenge, this dissertation discusses approaches to building large-scale and efficient graph machine learning models for learning structured representation with applications to engineering and sciences. This work would present how to make GNNs go deep by introducing architectural designs and how to automatically search GNN architectures by novel neural architecture search algorithms.

Brief Biography

Guohao Li is currently a Ph.D. candidate in Computer Science at King Abdullah University of Science and Technology (KAUST). He is a member of the Image and Video Understanding Laboratory (IVUL) in the Visual Computing Center (VCC) and KAUST AI Initiate, advised by Prof. Bernard Ghanem. During his Ph.D. studies, he worked at Intel ISL as a research intern with Dr. Vladlen Koltun and Dr. Matthias Müller. He visited Prof. Fisher Yu’s lab at ETHz as a visiting researcher. He also spent time at Kumo.ai as a Ph.D. intern with Prof. Jure Leskovec. His primary research interests are Graph Machine Learning, Computer Vision, and Embodied AI. He is the main lead of DeepGCN.org and a core contributor to PyG.org. He has published related papers in top-tier conferences and journals such as ICCV, CVPR, ICML, NeurIPS, RSS, and TPAMI.