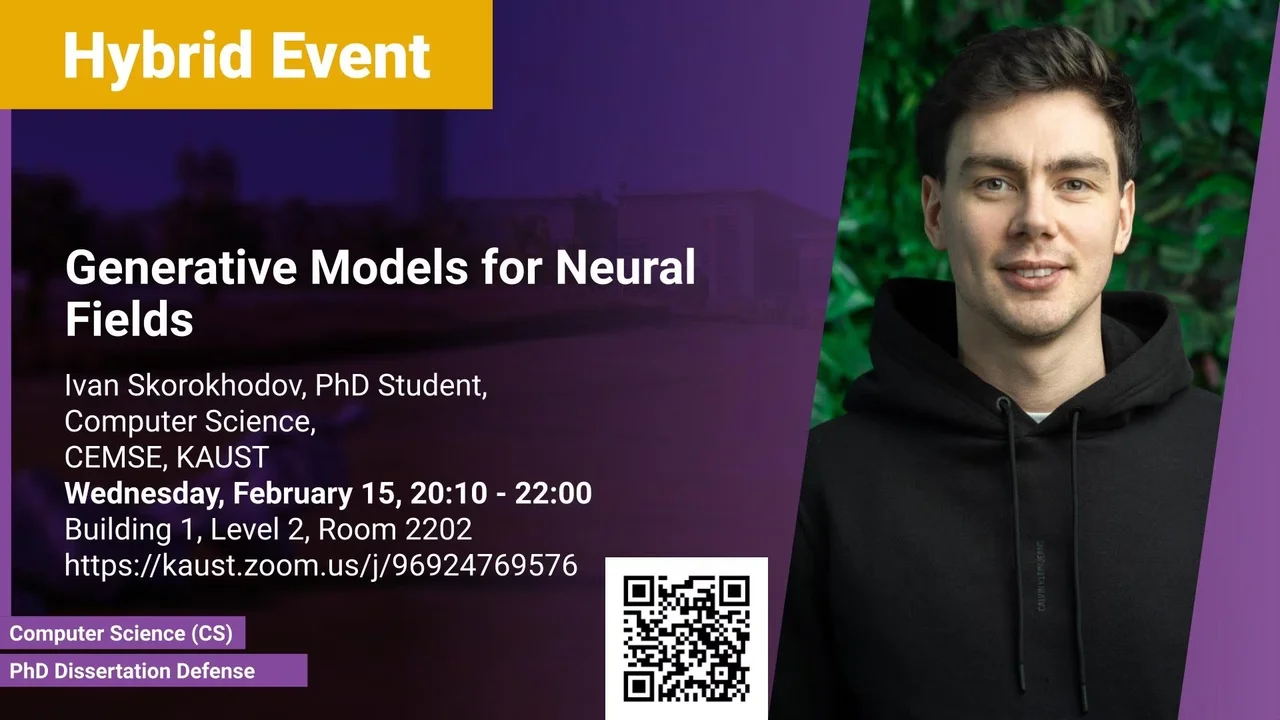

Generative Models for Neural Fields

B1 L2 R2202

In computer vision, generative AI models are typically built for images, videos, and 3D objects. Recently, there has emerged a paradigm of neural fields, which unifies the representations of such types of data by parametrizing them via neural networks. In this thesis, we develop generative models for images, videos, and 3D scenes which treat the underlying data in such a form and explore the benefits which such a perspective provides.

Overview

Abstract

Deep generative models are deep learning-based methods that are optimized to synthesize samples of a given distribution. During the past years, they have attracted much interest from the research community, and the developed tools now enjoy many practical applications in content creation and editing. In computer vision, such models are typically built for images, videos, and 3D objects. Recently, there has emerged a paradigm of neural fields, which unifies the representations of such types of data by parametrizing them via neural networks. In this work, we develop generative modeling methods for images, videos, and 3D objects which treat the underlying data in such a form. We show that this perspective can yield state-of-the-art synthesis quality and useful practical benefits, like interpolation/extrapolation capabilities, geometric inductive biases, and more efficient training and inference.

Brief Biography

Ivan Skorokhodov is a Computer Science Ph.D. student at Visual Computing Center at KAUST, supervised by Prof. Peter Wonka and Prof. Mohamed Elhoseiny. He did his BS and MS studies at Moscow Engineering Physics Institute and holds an additional CS degree from the Yandex School of Data Analysis. Before joining KAUST, he worked as a software engineer at Yandex and as a researcher at the Moscow Institute of Physics and Technology. During his Ph.D. studies at KAUST, he did several collaborations with Snap Research and had an internship there. His research is focused on generative models, hypernetworks, and neural rendering.