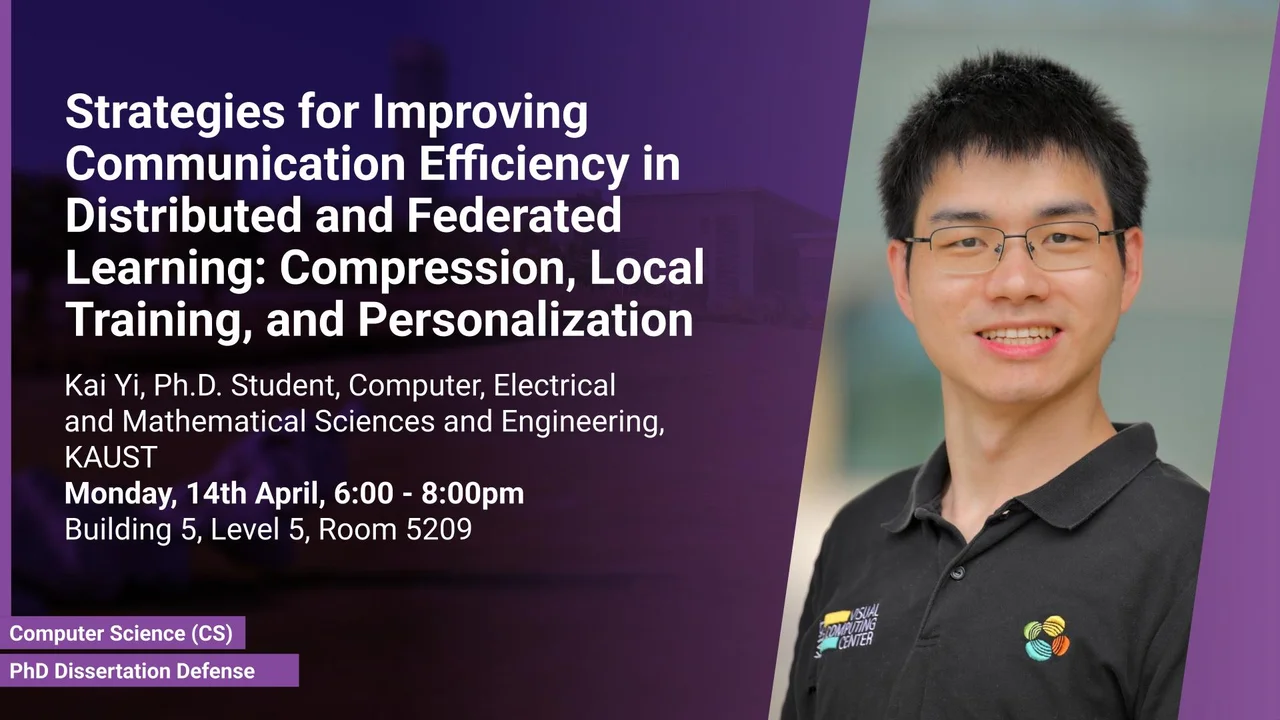

Strategies for Improving Communication Efficiency in Distributed and Federated Learning: Compression, Local Training, and Personalization

This dissertation advances communication-efficient distributed and federated learning through novel algorithms for model compression, local training optimization, and personalization, validated by theoretical analysis and empirical results.

Overview

This dissertation presents strategies to enhance communication efficiency in distributed and federated learning, focusing on model compression, local training, and personalization. It introduces the EF-BV method for error-feedback and variance reduction, FedP3 for provable privacy-friendly and personalized federated pruning, the Scafflix algorithm for adaptive and accelerated local training, Cohort-Squeeze for efficient federated pruning, and SymWanda for post-training pruning. Extensive experiments, supported by solid theoretical analysis, demonstrate improved communication efficiency, model accuracy, and convergence speed, contributing to scalable distributed learning systems.

Presenters

Brief Biography

Kai Yi is a PhD candidate in Computer Science at King Abdullah University of Science and Technology (KAUST), supervised by Peter Richtarik and working in the Optimization and Machine Learning Lab. He earned his master’s degree in Computer Science at KAUST in 2021 under the supervision of Mohamed Elhoseiny. He completed his Bachelor of Engineering with honors at Xi’an Jiaotong University (XJTU) in 2019.

He has interned at several leading research institutions, including Sony AI, Vector Institute, Tencent AI Lab, CMU Xulab, NUS CVML Group, and SenseTime Research. His primary research focuses on centralized and federated LLM compression. His work is highly interconnected, featuring significant contributions such as the LLM post-training compression algorithms SymWanda and PV-Tuning (NeurIPS Oral); communication-efficient federated learning methods Cohort-Squeeze (NeurIPS-W Oral), FedP3 (ICLR), and EF-BV (NeurIPS); and multimodal language model projects DACZSL (ICCVW), HGR-Net (ECCV), and VisualGPT (CVPR).

He actively serves as a reviewer for leading journals, including TPAMI, IJCV, and TMC, as well as top conferences such as NeurIPS, ICLR, ICML, CVPR, ECCV, and ICCV.