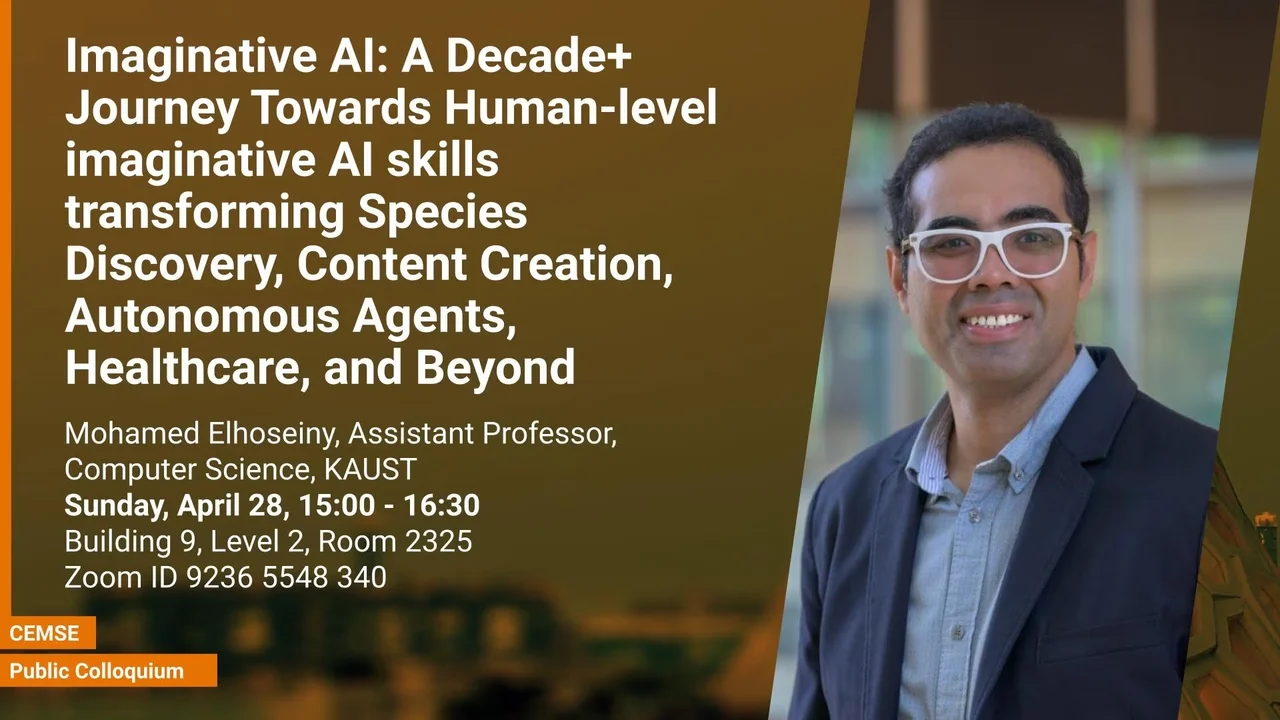

Imaginative AI: A Decade+ Journey Towards Human-level imaginative AI skills transforming Species Discovery, Content Creation, Autonomous Agents, Healthcare, and Beyond

Most existing AI learning methods can be categorized into supervised, semi-supervised, and unsupervised methods. These approaches rely on defining empirical risks or losses on the provided labeled and/or unlabeled data. Beyond extracting learning signals from labeled/unlabeled training data, in this talk, I will cover a class of methods that I have been developing for over a decade, which can learn beyond the vocabulary that was trained on and can compose or create novel concepts.

Overview

Abstract

Most existing AI learning methods can be categorized into supervised, semi-supervised, and unsupervised methods. These approaches rely on defining empirical risks or losses on the provided labeled and/or unlabeled data. Beyond extracting learning signals from labeled/unlabeled training data, in this talk, I will cover a class of methods that I have been developing for over a decade, which can learn beyond the vocabulary that was trained on and can compose or create novel concepts. Specifically, we address the question of how these AI skills may assist in species discovery, content creation, autonomous agents, emotional health, and beyond. We refer to this class of techniques as imagination AI methods, and we will dive into how we developed several approaches to build machine learning methods that can see, create, drive, and feel. See: recognize unseen visual concepts by imaginative learning signals and how that may extend in a continual setting where seen and unseen classes change dynamically. Create: generate novel art and fashion using creativity losses. Drive: improve trajectory forecasting for autonomous driving by modeling hallucinative driving intents. Feel: generate emotional descriptions of visual art that are metaphoric and go beyond grounded descriptions, and how to build these AI systems to be more inclusive of multiple cultures. We will cover how this class of methods has been revisited with recent advances in LLM that we developed to be computer vision skilled, facilitating a variety of perception and generative visual skills. While going over these aspects, we will point out the potential of this technology and its future research to help develop better assistive technology for a multicultural and more inclusive metaverse, emotional and physical health, species and drug discovery, and AI-assisted neuroscience.

Brief Biography

Mohamed Elhoseiny is an assistant professor of Computer Science at KAUST, and is a senior member honoree of AAAI and IEEE. Previously, he was a visiting Faculty at Stanford Computer Science Department (Oct 2019-March 2020), a Visiting Faculty at Baidu Research (March-October 2019), and a Postdoc researcher at Facebook AI Research (Nov 2016- Jan 2019). Dr. Elhoseiny earned his Ph.D. in 2016 from Rutgers University, where he was part of the art & AI lab and spent time at SRI International in 2014 and at Adobe Research (2015-2016). His primary research interest is in computer vision and especially in efficient multimodal learning with limited data in zero/few-shot learning and Vision and language. He is also interested in Affective AI and especially in understanding and generating novel visual content (e.g., art and fashion). He received an NSF Fellowship in 2014, the Doctoral Consortium award at CVPR’16, the Best Paper award at ECCVW’18 on Fashion and Design, and was selected as an MIT 35 under 35 semi-finalist in 2020. His zero-shot learning work was featured at the United Nations, and his creative AI work was featured in MIT Tech Review, New Scientist Magazine, Forbes Science, and HBO Silicon Valley. He has served as an Area Chair at major CV/AI conferences, including CVPR21, ICCV21, IJCAI22, ECCV22, ICLR23, CVPR23, ICCV'23, NeurIPS23, ICLR'24, CVPR'24,, SG Asia'24 and has organized Closing the Loop Between Vision and Language workshops at ICCV’15, ICCV’17, ICCV’19, ICCV’21, ICCV'23.