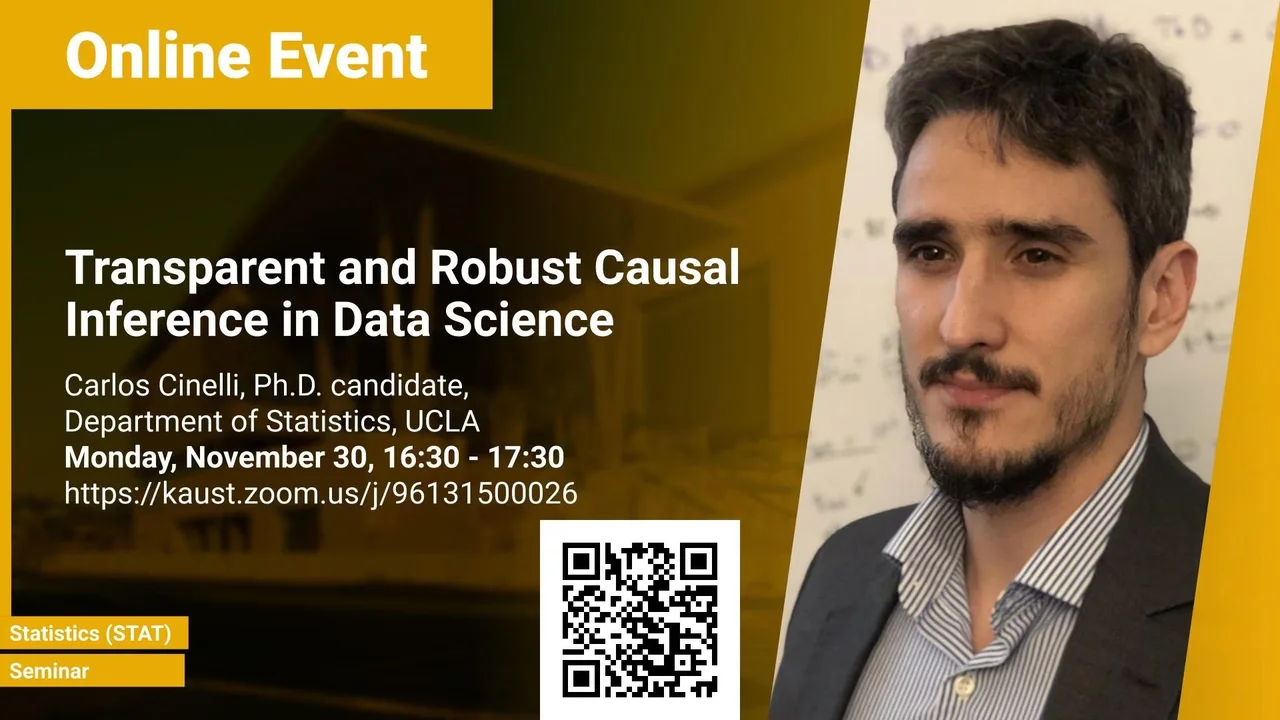

Transparent and Robust Causal Inference in Data Science

- Carlos Cinelli, Ph.D. candidate, Department of Statistics, UCLA

KAUST

The past few decades have witnessed rapid and unprecedented theoretical progress in the science of causal inference, ranging from the “credibility revolution” with the popularization of quasi-experimental designs, to the development of a complete solution to non-parametric identification with causal graphical models. Most of these theoretical progress, however, relies on strong, exact assumptions, such as the absence of unobserved common causes, or the absence of certain direct effects. Unfortunately, more often than not these assumptions are very hard to defend in practice. This leads to two undesirable consequences for applied quantitative work in the data-intensive sciences: (i) important research questions may be neglected, simply because they do not exactly match the requirements of current methods; or, (ii) researchers may succumb to making the required “identification assumptions” simply to justify the use of available methods, but not because these assumptions are truly believed (or understood). In this talk, I will discuss new theories, methods, and software for permitting causal inferences under more flexible and realistic settings. These tools empower scientists, and policymakers to both examine the sensitivity of causal inferences to violations of its underlying assumptions, and also to draw robust and trustworthy conclusions from settings in which traditional methods fail.

Overview

Abstract

The past few decades have witnessed rapid and unprecedented theoretical progress on the science of causal inference, ranging from the “credibility revolution” with the popularization of quasi-experimental designs, to the development of a complete solution to non-parametric identification with causal graphical models. Most of these theoretical progress, however, relies on strong, exact assumptions, such as the absence of unobserved common causes, or the absence of certain direct effects. Unfortunately, more often than not these assumptions are very hard to defend in practice. This leads to two undesirable consequences for applied quantitative work in the data-intensive sciences: (i) important research questions may be neglected, simply because they do not exactly match the requirements of current methods; or, (ii) researchers may succumb to making the required “identification assumptions” simply to justify the use of available methods, but not because these assumptions are truly believed (or understood). In this talk, I will discuss new theories, methods, and software for permitting causal inferences under more flexible and realistic settings. These tools empower scientists, and policymakers to both examine the sensitivity of causal inferences to violations of its underlying assumptions, and also to draw robust and trustworthy conclusions from settings in which traditional methods fail.

Brief Biography

Carlos Cinelli is a Ph.D. candidate in Statistics at the University of California, Los Angeles, working under the supervision of Judea Pearl and Chad Hazlett. His research focuses on developing new causal and statistical methods for transparent and robust causal claims in the empirical sciences. He is also interested in the intersections of causality with machine learning and artificial intelligence.