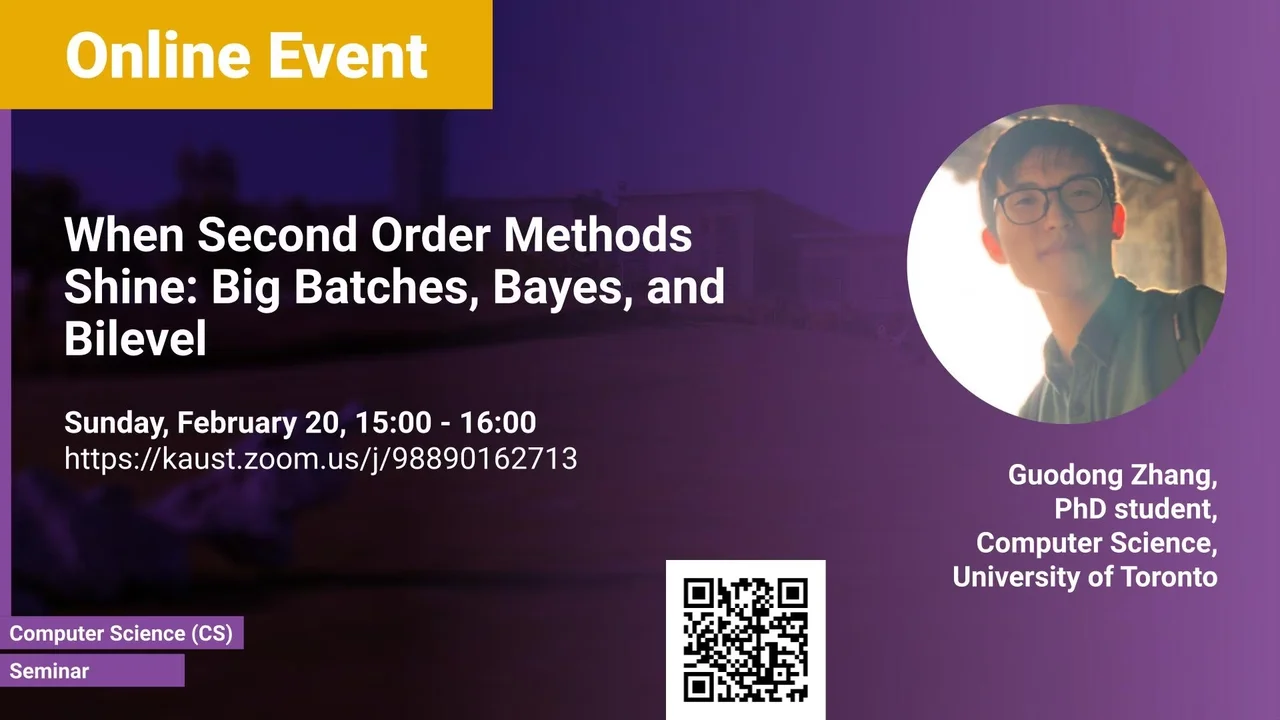

When Second Order Methods Shine: Big Batches, Bayes, and Bilevel

- Guodong Zhang, PhD student in University of Toronto

KAUST

In this talk, I will discuss how the use of second-order information – e.g, curvature or covariance – can help in all three problems, yet with vastly different roles in each. First, I will present a noisy quadratic model, which qualitatively predicts scaling properties of a variety of optimizers and in particular suggests that second-order optimization algorithms would extend perfect scaling to much bigger batches. Second, I will show how we can derive and implement scalable and flexible Bayesian inference algorithms from standard second-order optimization algorithms. Third, I will describe a novel second-order algorithm that finds desired equilibria and save us from converging to spurious fixed points in two-player sequential games (i.e. bilevel optimization) or even more general settings. Finally, I will conclude how my research would pave the way towards intelligent machines that can learn from data and experience efficiently, reason about their own decisions, and act in our interests.

Overview

Abstract

Many challenges in modern machine learning involve the three fundamental problems of optimization, Bayesian inference, and multi-player games. In this talk, I will discuss how the use of second-order information – e.g, curvature or covariance – can help in all three problems, yet with vastly different roles in each. First, I will present a noisy quadratic model, which qualitatively predicts scaling properties of a variety of optimizers and in particular suggests that second-order optimization algorithms would extend perfect scaling to much bigger batches. Second, I will show how we can derive and implement scalable and flexible Bayesian inference algorithms from standard second-order optimization algorithms. Third, I will describe a novel second-order algorithm that finds desired equilibria and save us from converging to spurious fixed points in two-player sequential games (i.e. bilevel optimization) or even more general settings. Finally, I will conclude how my research would pave the way towards intelligent machines that can learn from data and experience efficiently, reason about their own decisions, and act in our interests.

Brief Biography

Guodong Zhang is a PhD candidate in the machine learning group at the University of Toronto, advised by Roger Grosse. His research lies at the intersection of machine learning, optimization, and Bayesian statistics. In particular, his research focuses on understanding and improving algorithms for optimization, Bayesian inference, and multi-agent games in the context of deep learning. He has been recognized through the Apple PhD fellowship, Borealis AI fellowship, and many other scholarships. In the past, he has also interned at DeepMind and Google Brain.