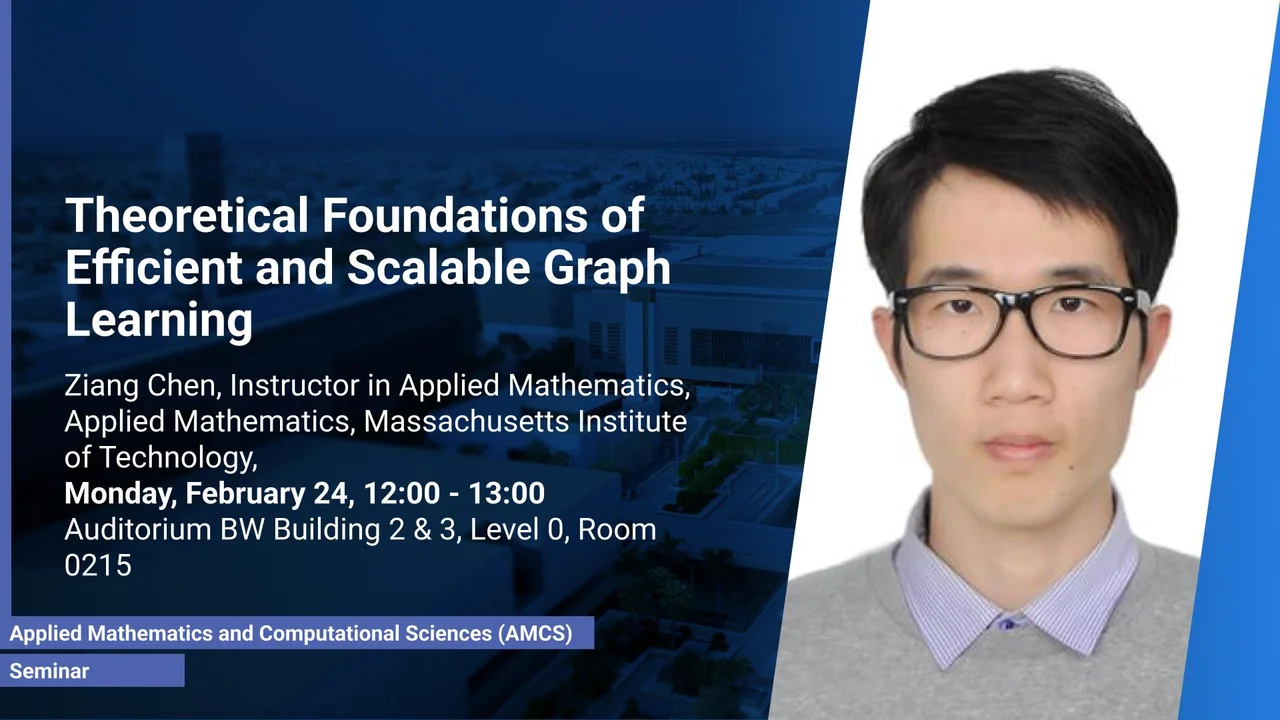

Theoretical Foundations of Efficient and Scalable Graph Learning

Auditorium between Building 2 - 3, L0 R0215

This talk addresses key challenges in graph neural networks (GNNs), including expressive power and model depth, by theoretically analyzing and providing solutions in three areas: universal approximation of linear program properties, strong expressive power of subgraph GNNs on graphs with bounded cycles, and the role of residual connections in mitigating oversmoothing and enabling deeper models.

Overview

Graph neural networks (GNNs) have achieved remarkable empirical success in processing and representing graph-structured data across diverse fields. However, fundamental challenges remain, including limitations in expressive power and model depth. This talk addresses these challenges through rigorous analysis and efficient solutions across three key areas of graph learning. First, we establish that message-passing GNNs and their variants can universally approximate certain critical properties of (mixed-integer) linear programs. Second, we demonstrate that subgraph GNNs exhibit strong expressive power on graphs with bounded cycles. Third, we show that residual connections provably mitigate the oversmoothing issue and enable greater model depth. These results not only advance the theoretical understanding of GNNs but also provide practical insights for real-world applications.

Presenters

Dr. Ziang Chen, Instructor, Applied Mathematics, MIT, USA

Brief Biography

Ziang Chen is an Instructor in Applied Mathematics at the Massachusetts Institute of Technology. He earned his Ph.D. in Mathematics from Duke University in 2023 under the supervision of Jianfeng Lu, along with an M.S. in Computer Science. He previously obtained an M.S. in Applied Mathematics from Harvard University in 2020, as well as a B.S. in Computational Mathematics and a B.L. in Chinese Language and Literature from Peking University in 2019. His research lies at the intersection of applied mathematics, machine learning, and scientific computing, with multiple publications in top-tier applied mathematics journals and machine learning conferences. His work has been recognized with the ICCM Graduate Thesis Award and a nomination for the Rudin Prize.