Neural Operators: Theory, Architecture, and Applications for PDEs

Overview

Abstract

Neural operator methods provide a novel approach for solving or learning the complex mappings from parameters to solutions arising from intricate physical systems. In this talk, I will cover the foundational aspects of neural operators, encompassing both theoretical frameworks and algorithmic developments, including some well-known neural operator architectures. Additionally, I will share our recent work on applying the neural operator method to multiscale partial differential equations (PDEs). To tackle the challenges of multiscale PDEs, we have developed a neural operator with a hierarchical matrix architecture. This design enables the efficient computation of features in a nested manner, maintaining a manageable linear computational cost. Furthermore, we have implemented an empirical loss function to mitigate the spectral bias in the neural operator-based approximations of multiscale functions.

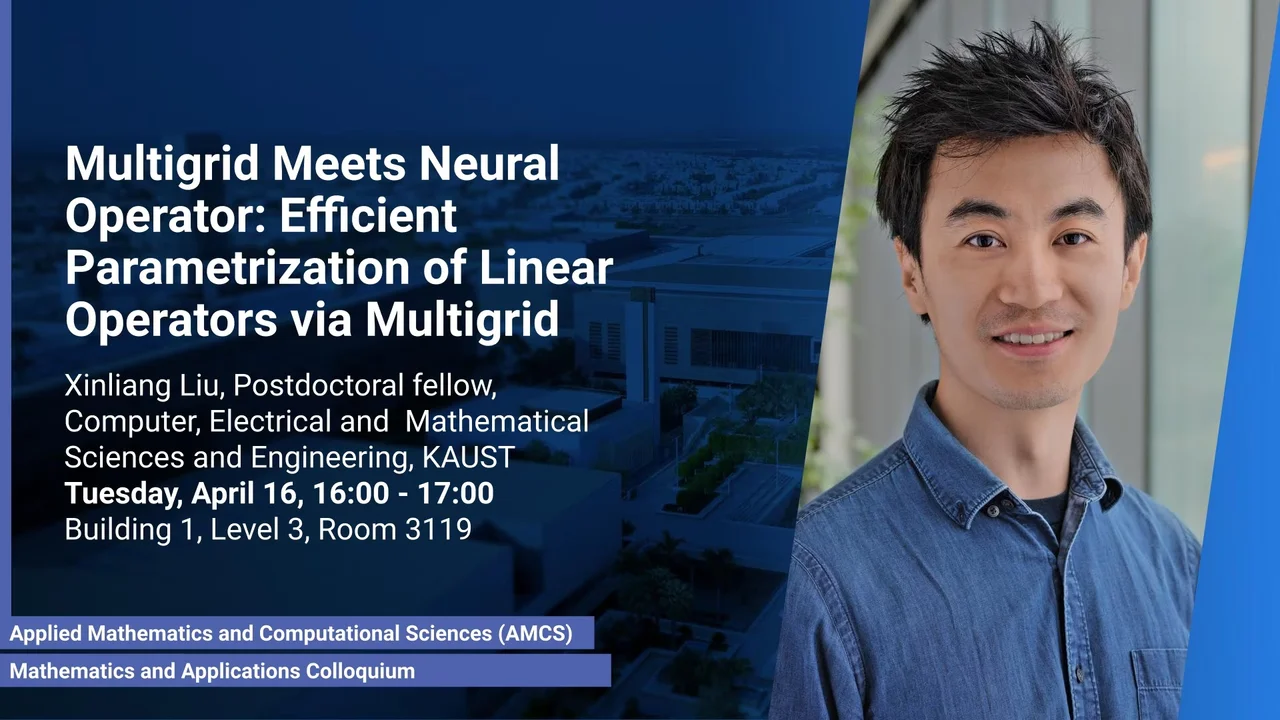

Brief Biography

Xinliang Liu is currently a postdoctoral researcher in the Computer, Electrical, and Mathematical Science and Engineering Division (CEMSE) at King Abdullah University of Science and Technology (KAUST), supervised by Prof. Jinchao Xu. His research focuses on operator learning, multi-scale algorithms and graph nerual networks. He earned his Ph.D. in Computational Mathematics, supervised by Prof. Lei Zhang, from Shanghai Jiao Tong University, Shanghai, China, in 2021. Subsequently, he joined KAUST in March 2022.