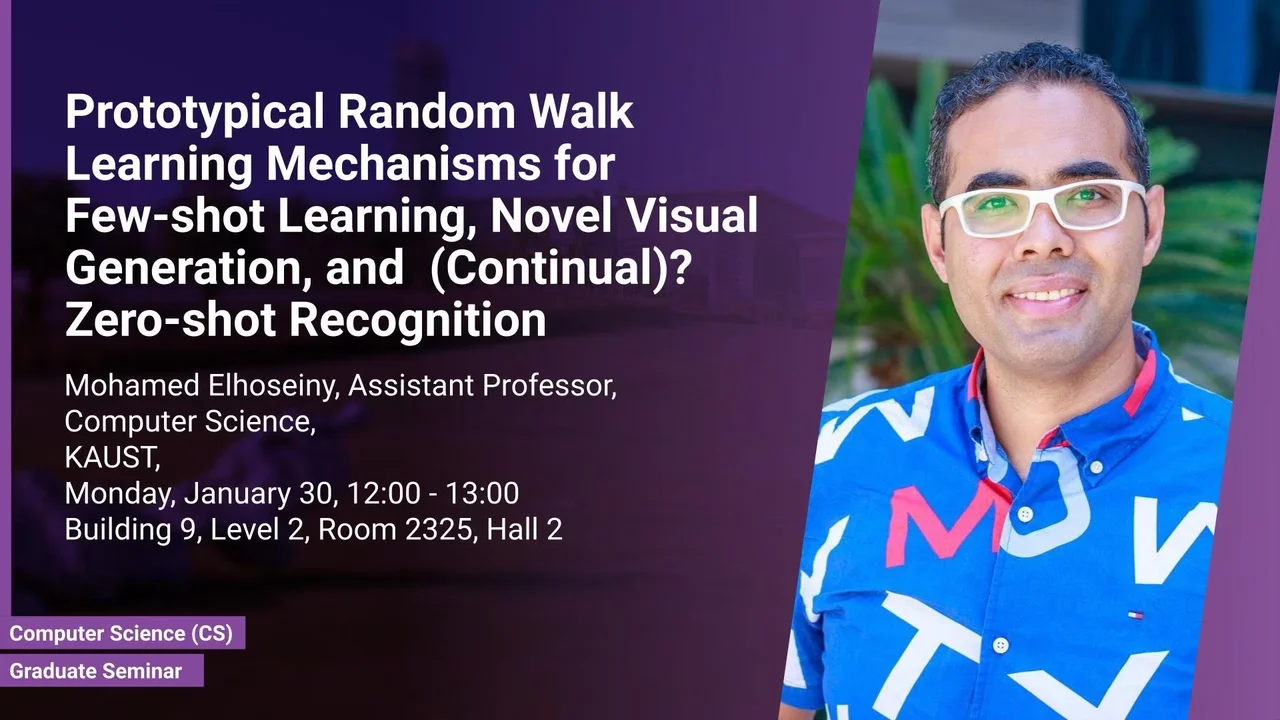

Prototypical Random Walk Learning Mechanisms for Few-shot Learning, Novel Visual Generation, and (Continual)? Zero-shot Recognition

In this talk, we will define prototypical random walks, a mechanism we introduced to improve visual classification with limited data (few-shot learning), and then developed the mechanism in a conceptually different way to facilitate novel image generation and unseen class recognition tasks. More specifically, in the few-shot learning setting, we will show how we can develop a random walk semi-supervised loss that enables the network to learn representations that are compact and well-separated.

Overview

Abstract

In this talk, we will define prototypical random walks, a mechanism we introduced to improve visual classification with limited data (few-shot learning), and then developed the mechanism in a conceptually different way to facilitate novel image generation and unseen class recognition tasks. More specifically, in the few-shot learning setting, we will show how we can develop a random walk semi-supervised loss that enables the network to learn representations that are compact and well-separated. The idea is related to the recent development of graph-based approaches for few-shot learning. However, we show that compact and well-separated representations of different classes can be achieved by a prototypical random walk mechanism without needing additional graph-NN parameters or requiring a transductive setting where a collective test set is provided. We will then show how the mechanism can be adapted to learn novelty-aware representation to facilitate novel visual generation tasks. In this case, the prototypical random walk operates on sampled produced with a generator network instead of unlabeled data in the few-shot learning task. We will then show the mechanism can be further developed to be language/semantically guided by class semantic descriptions and hence facilitate unseen class recognition, also known as zero-shot recognition. We will also demonstrate that the mechanism is effective in a continual learning setting of the task, where the distribution of seen and unseen classes dynamically changes over time.

Brief Biography

Mohamed Elhoseiny is an Assistant Professor of Computer Science at KAUST. Since the Fall of 2021, he has become a senior member of IEEE and a member of the international Summit community. Previously, he was a Visiting Faculty at Baidu Research (2019), and a Postdoc researcher at Facebook AI Research (2016-2019). Dr. Elhoseiny did his Ph.D. in 2016 at Rutgers University, where he was part of the art & AI lab and spent time at SRI International in 2014 and at Adobe Research (2015-2016). His primary research interest is in computer vision and especially in efficient multimodal learning with limited data in zero/few-shot learning and Vision & Language. He is also interested in Affective AI and especially in understanding and generating novel visual content (e.g., art and fashion). He received an NSF Fellowship in 2014, the Doctoral Consortium award at CVPR’16, best paper award at ECCVW’18 on Fashion and Design. His zero-shot learning work was featured at the United Nations, and his creative AI work was featured in MIT Tech Review, New Scientist Magazine, Forbes Science, and HBO Silicon Valley. He has served as an Area Chair at major AI conferences, including CVPR21, ICCV21, IJCAI22, ECCV22, ICLR23, CVPR23, and organized CLVL workshops at ICCV’15, ICCV’17, ICCV’19, and ICCV’21.