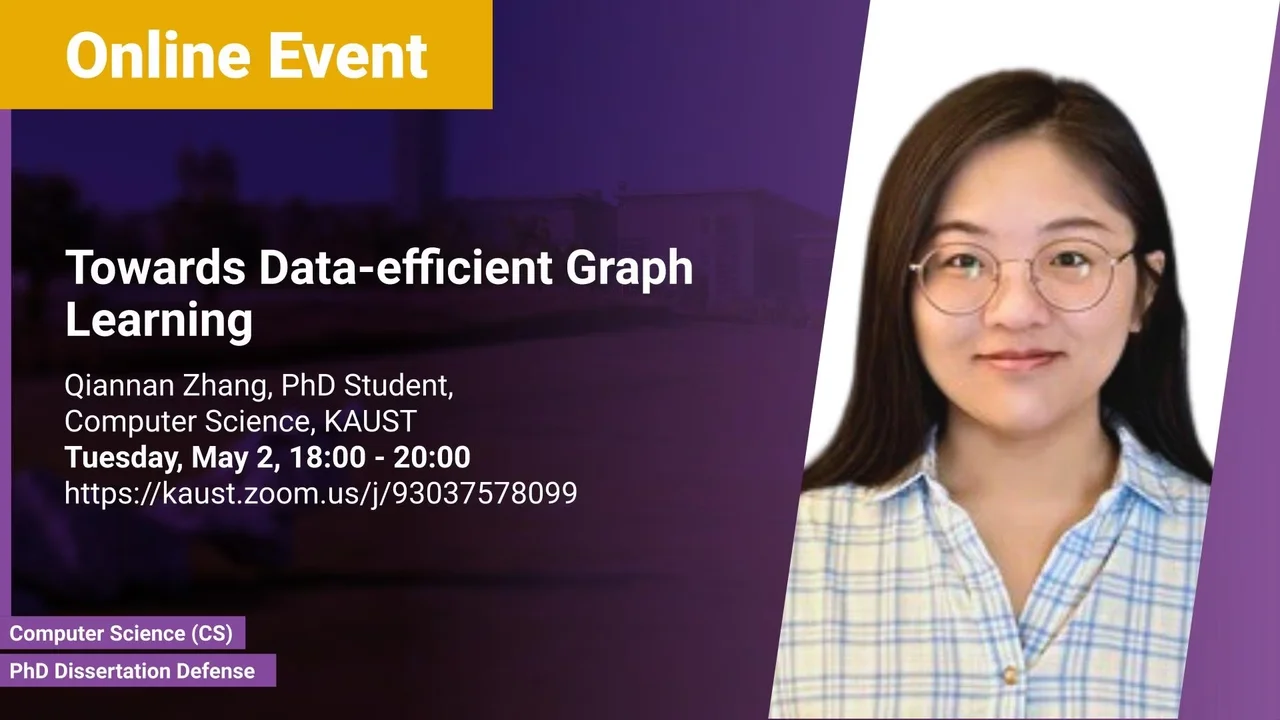

Towards Data-efficient Graph Learning

This dissertation focuses on the challenge of learning with small amounts of annotated data in graph machine learning. The scarcity of annotated data can severely degrade the performance of graph learning models, and the ability to learn with small amounts of data, known as data-efficient graph learning, is essential for achieving strong generalization in low-data regimes. The dissertation proposes three methods to address the challenges of graph learning in low-data scenarios, including a graph meta-learning framework, a solution for few-shot graph classification, and a cross-domain knowledge transfer model. Experimental results demonstrate the effectiveness of the proposed methods in improving model generalization for data-efficient graph learning.

Overview

Abstract

Graph machine learning has emerged as a popular technique for modeling graph-structured data, with potential applications in fields such as social network analysis and molecular modeling. However, due to the difficulty and cost of data annotation, the scarcity of annotated data is a common challenge in practice, which can severely degrade the performance of graph learning models. To overcome this, the ability of models to learn with small amounts of data, known as data-efficient graph learning, is essential for achieving strong generalization in low-data regimes. The general idea of data-efficient graph learning is to transfer knowledge from related resources to obtain the models with generalizability to the graph-related tasks with mere annotations. Yet, it is non-trivial to learn a graph deep learning model that generalizes well to the target tasks in the low-data regimes.

In this dissertation, we focus on addressing the challenges of graph learning in low-data scenarios, including dealing with graph structure and structural heterogeneity to extract transferable knowledge, selecting beneficial and fine-grained knowledge to transfer, and addressing divergence between different resources to promote knowledge transfer. First, we propose a graph meta-learning framework that accounts for graph heterogeneity and explores unlabelled data to improve model generalizability. Second, to further enable knowledge selection for better knowledge transfer, a solution for few-shot graph classification is proposed to leverage selective tasks that preserve beneficial knowledge by coordinating with prompt tasks and reinforcement signals. Third, in order to promote model generalizability in the face of diverse domains, we propose a cross-domain knowledge transfer model by evaluating domain shifts and exploring cross-domain regulatory information. Experimental results demonstrate the effectiveness of the proposed methods in improving model generalization for data-efficient graph learning.

Brief Biography

Qiannan Zhang is a Ph.D. candidate in the Department of Computer Science, KAUST, working with Prof. Mikhail Moshkov. Before joining KAUST, she received her B.S. and M.S. degrees in communication engineering from Tianjin University. Her research interests lie in data mining and machine learning, in particular graph representation learning, self-supervised learning, and data-efficient graph learning, etc.