Pop stats for big geodata

A universal high-performance computing interface allows popular statistical tools to run efficiently on large geospatial datasets.

About

Even the most computationally demanding statistical analyses on spatial data could run many times faster by using a universal high-performance computing (HPC) interface developed for one of the world’s most widely used statistics tools.

Free open-source software is the backbone of research across many scientific disciplines, and the R statistics environment is probably the most popular and well-developed open-source tool available to scientists. R does almost everything a researcher might need using everyday computers; its only real limitation is the time it takes to run on very large datasets.

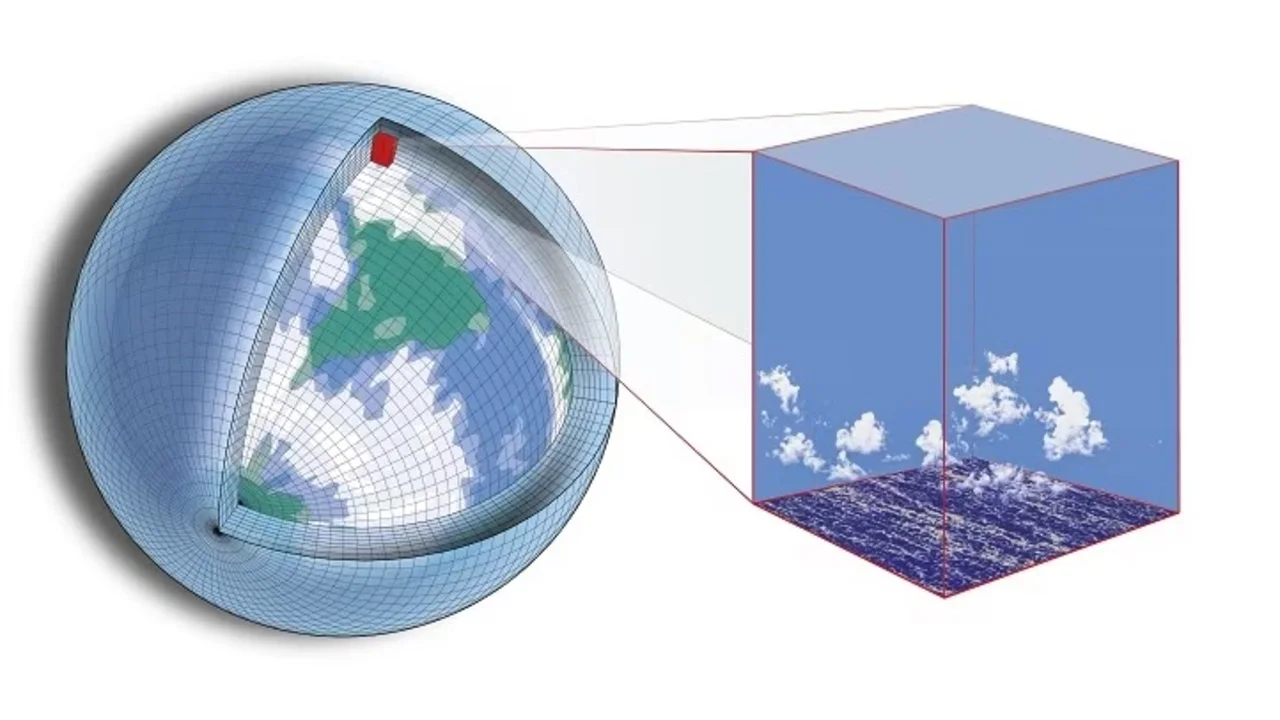

“R is a free programming language for statistical computing and graphics,” says KAUST researcher Sameh Abdulah. “However, existing R packages can really only handle small data sizes because each computation is run sequentially on a single processor—it is simply not feasible to use these packages to analyze large-scale climate and weather data, for example.”

Abdulah and his colleagues—including David Keyes, Marc Genton and Ying Sun from KAUST’s Statistics Program and the Extreme Computing Research Center—have been exploring ways to change this by improving the capabilities of existing statistical packages using the parallel processing power of most desktop computers.

“We want to extend statistical tools like R to large-scale datasets by implementing them using high-performance computing languages,” says Abdulah. “Our goal is for any statistician to be able to run experiments in R with only a very abstract understanding of the high-performance hardware like graphics processors and distributed memory systems that they may be using.”

Read the full article