Abstract

The interplay between Pontryagin’s Minimum Principle and Bellman’s Principle of Optimality is exploited to revisit optimal control problems. This interplay allows characterizing the optimal feedback as the fixed point of a nonlinear static map and, in the finite horizon case, it allows a similar characterization for the optimal costate. The interplay also reveals that the underlying Hamiltonian system can be externally stabilized to reliably compute approximate optimal feedback strategies. Applications of these ideas and tools to the design of novel algorithm for the solution of AREs, to iterative learning, and to differential game theory are also discussed.

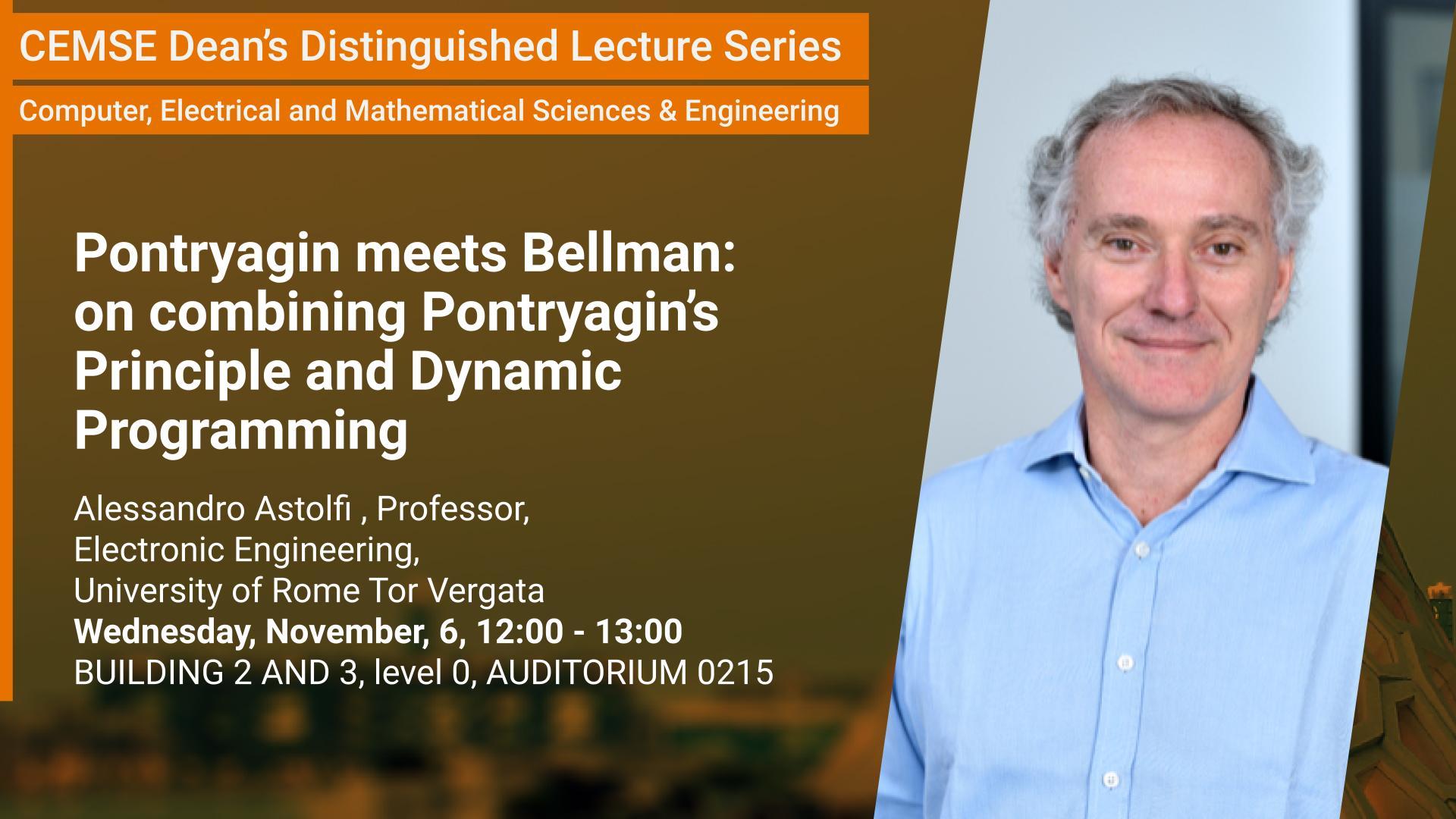

Brief Biography

Alessandro Astolfi was born in Rome, Italy, in 1967. He graduated in electrical engineering from the University of Rome in 1991. In 1992 he joined ETH-Zurich where he obtained an M.Sc. in Information Theory in 1995 and the Ph.D. degree with Medal of Honor in 1995 with a thesis on discontinuous stabilisation of nonholonomic systems. In 1996 he was awarded a Ph.D. from the University of Rome “La Sapienza” for his work on nonlinear robust control. Since 1996 he has been with the Electrical and Electronic Engineering Department of Imperial College London, London (UK), where he is currently Professor of Nonlinear Control Theory and College Consul. From 1998 to 2003 he was also an Associate Professor at the Dept. of Electronics and Information of the Politecnico of Milano. Since 2005 he has also been a Professor at Dipartimento di Ingegneria Civile e Ingegneria Informatica, University of Rome Tor Vergata. His research interests are focussed on mathematical control theory and control applications, with special emphasis for the problems of discontinuous stabilisation, robust and adaptive control, observer design and model reduction.