Going to extremes to predict natural disasters

A systematic approach to selecting and configuring statistical models improves predictions of extreme events.

About

Predicting natural disasters remains one of the most challenging problems in simulation science because not only are they rare but also because only few of the millions of entries in datasets relate to extreme events. A systematic method for comparing the accuracy of different types of simulation models for such prediction problems has recently been developed by a working group at KAUST.

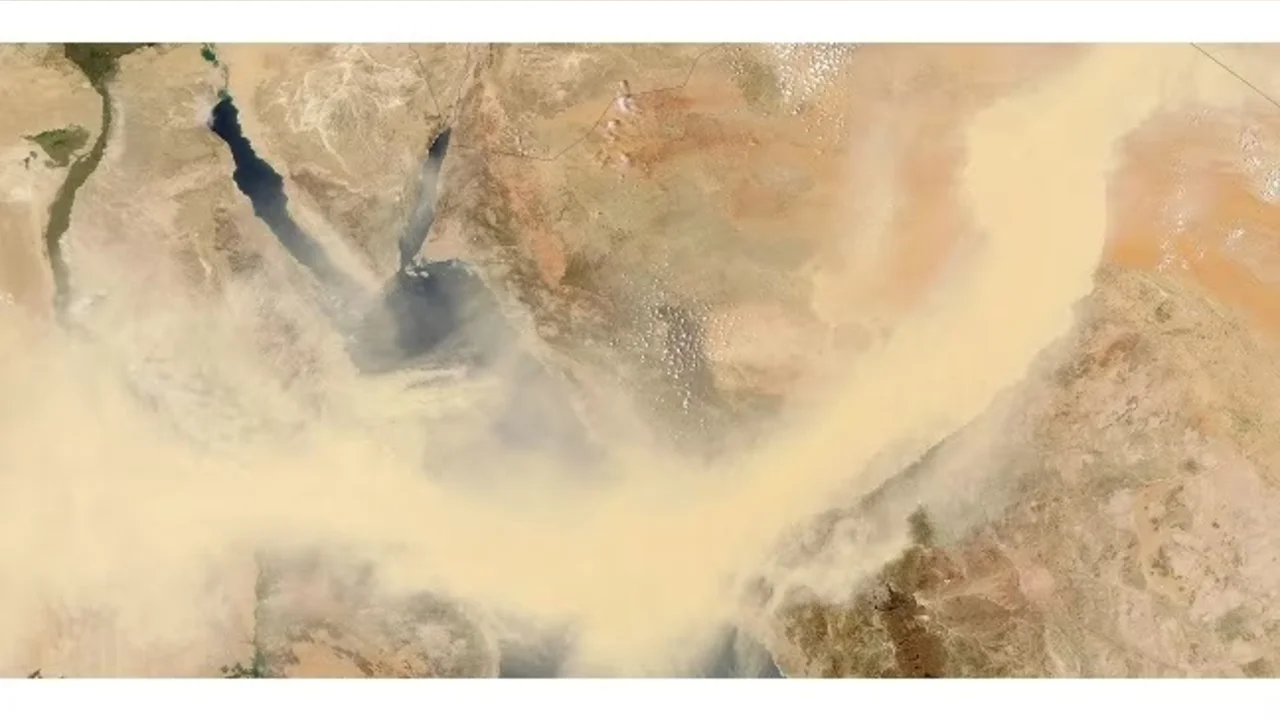

“Extreme events like dust storms, droughts and floods can affect communities and damage infrastructure,” says Sabrina Vettori, a doctoral student cosupervised by Marc Genton and Raphaël Huser. “Modeling and forecasting extremes is very challenging and requires flexible, yet interpretable, models with sound theoretical underpinning—criteria that are exponentially more difficult to meet as the data dimensionality increases,” she explains.

Increasing the dimensionality or number of observation variables (like temperature and wind speed) dramatically increases the predictive power of a simulation model, but the statistical dexterity needed to correctly pick out and predict the combination of conditions leading to extreme events is immense.

“We are exploring the boundaries of extreme value theory,” says Genton. “The aim of our work is to provide a greater understanding of the performance of existing estimators for modeling extreme events over multiple variables and to develop a new statistical method for nonparametric estimation in higher dimensions.”

Read the full article