Cutting datasets down to size

A powerful statistical tool could significantly reduce the burden of analyzing very large datasets.

About

By exploiting the power of high-performance computing, a new statistical tool has been developed by KAUST researchers that could reduce the cost and improve the accuracy of analyzing large environmental and climate datasets.

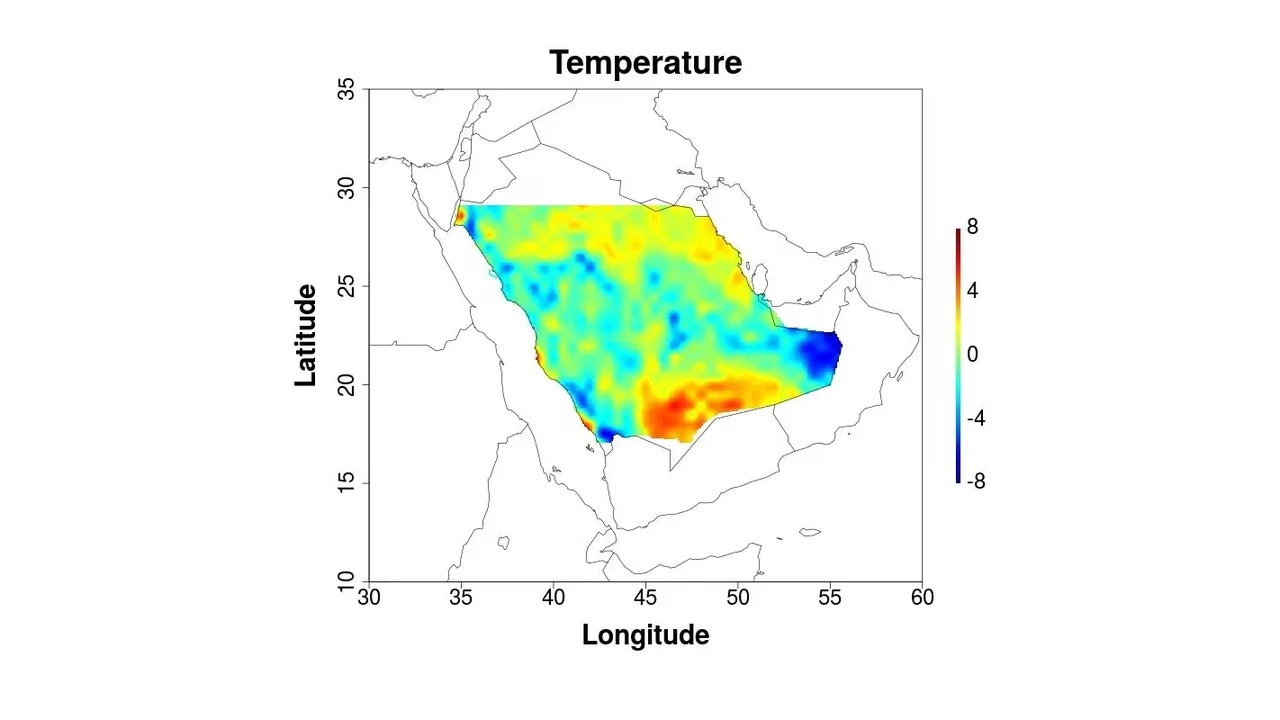

Datasets containing environmental and climate observations, such as temperature, wind speeds and soil moisture, are often very large because of the high spatial resolution of the data. The cost of analyzing such datasets increases steeply as the size of the dataset increases: for instance, increasing the size of a dataset by a factor of 10 drives up the cost of the computation by a factor of a 1,000 and the memory requirements by a factor of 100, creating a computational strain on standard statistical software.

This spurred postdoctoral fellow Sameh Abdulah to develop a standalone software framework through a collaboration between KAUST’s Extreme Computing Research Center (ECRC) and statisticians specializing in spatio-temporal dynamics and the environment.

The new framework, called Exascale GeoStatistics or ExaGeoStat, is able to process large geospatial environmental and climate data by employing high-performance computing architectures with a high degree of concurrency not available through universally used statistical software.

“Existing statistical software frameworks are not able to fully exploit large datasets,” says Abdulah. “For example, a computation that would normally require one minute to complete would take nearly 17 hours if the dataset were just 10 times larger. This leads to compromises due to the limitations in computing power, forcing researchers to turn to approximation methods that cloud their interpretation of results.”

Read the full article