KAUST researchers define the emerging field of high-performance statistical computing (HPSC)

KAUST researchers outline a new discipline for statistical computing at supercomputing scale.

About

A team of researchers from King Abdullah University of Science and Technology (KAUST), working with KAUST alumna, Mary Salvaña, now a faculty member at the University of Connecticut, U.S., has defined high-performance statistical computing (HPSC) as a new scientific discipline that unites modern statistical methodology with the capabilities of advanced supercomputing systems.

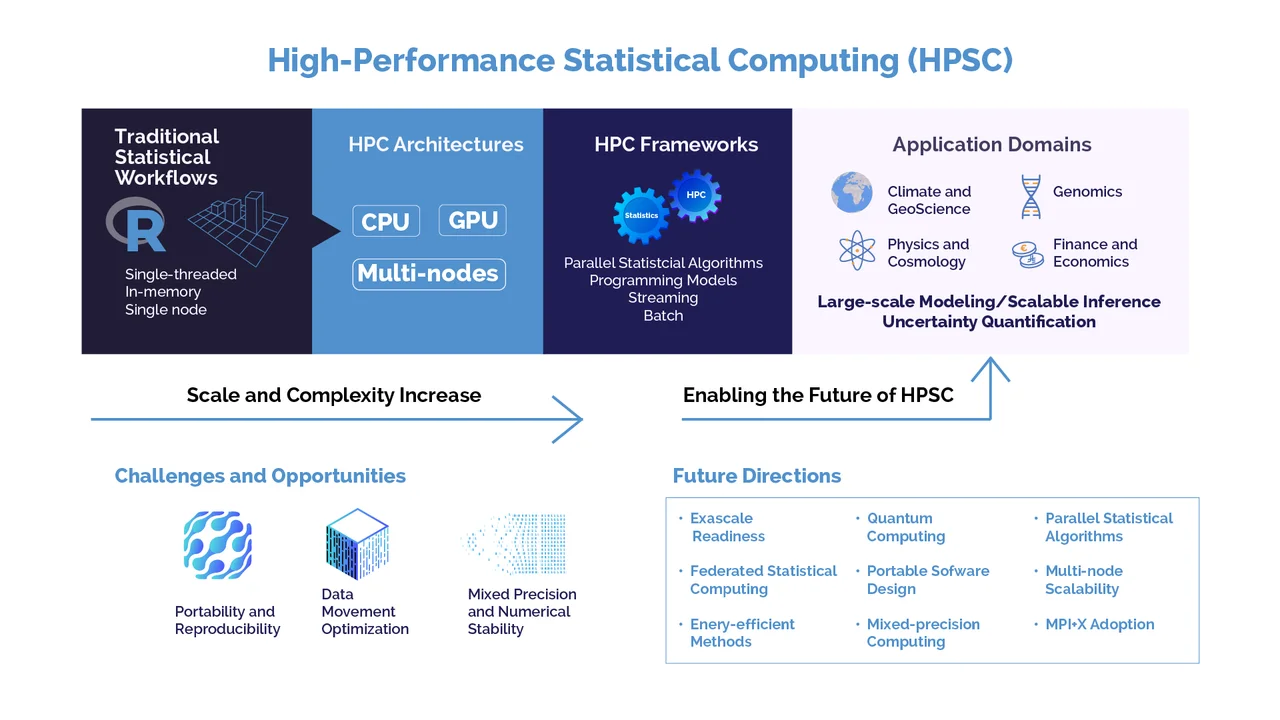

The team’s comprehensive review article titled, "High-Performance Statistical Computing (HPSC): Challenges, Opportunities, and Future Directions," outlines the conceptual foundations of this field and details how statistical practice must evolve to operate effectively at large computational scale. The work synthesizes more than six decades of progress in statistical and high-performance computing and positions HPSC as the fourth wave of statistical computing, offering the first roadmap for unifying modern statistical methods with exascale CPU–GPU systems.

The authors highlight that many widely used statistical algorithms were originally developed for single-processor environments and require redesign to operate reliably on large, parallel architectures. They note that, despite the widespread use of statistical software across scientific domains, the statistical computing community remains underrepresented on the world’s most powerful supercomputers —a gap HPSC addresses.

“Many statistical algorithms were built for a very different computing era,” said Marc Genton, Al-Khawarizmi Distinguished Professor of Statistics at KAUST and corresponding author of the paper. “As datasets grow in size and complexity, we need scalable approaches that align with the architecture of modern supercomputers,” he added.

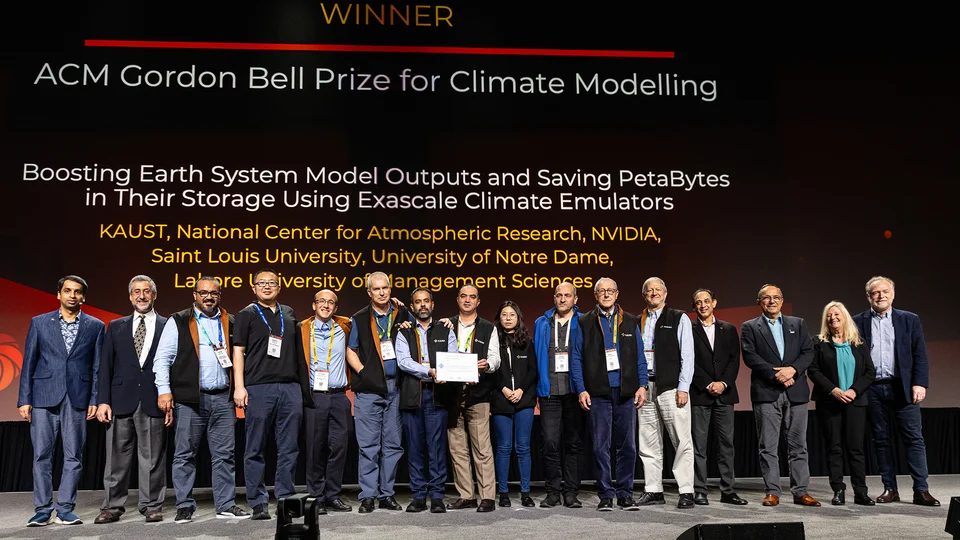

The review investigates advances in GPU acceleration, distributed likelihood estimation, mixed-precision numerical techniques and scalable algorithms now used in climate science, genomics, physics, economics and finance. It offers a systematic overview of HPSC activity across these fields and identifies shared computational principles that govern statistical inference at scale. The authors also illustrate how HPSC principles translate into practice through tools such as ExaGeoStat, a geostatistical modeling framework designed for large-scale spatial data and deployed on leading supercomputers worldwide, which has served as part of the computational workflow that contributed to the 2024 ACM Gordon Bell Prize in Climate Modeling.

As analyses scale across thousands of processing units, numerical stability, data movement and reproducibility become increasingly complex.

“HPSC gives statisticians a framework to think at a supercomputing scale,” said co-author Salvaña. “Our goal was to bring together ideas from statistics and high-performance computing (HPC) in a way that supports researchers working with today’s massive datasets.”

“Statistical computations can be highly sensitive to small numerical variations,” said Ying Sun, professor of statistics at KAUST. “Running analyses at supercomputing scale requires methods that preserve both accuracy and computational efficiency.”

Redesigning statistical methods for an exascale era

The authors note that many researchers in statistics and data science rely on tools such as R and Python, which require additional engineering to run effectively on large-scale systems. Bridging this gap will require new interfaces, training and open-source frameworks that support scalability and portability. The review outlines future research priorities for HPSC, including parallel algorithm design, communication-efficient methods, mixed-precision computing and performance-portable software capable of operating across heterogeneous architectures.

“The goal is to make high-performance computing more accessible to researchers who rely on statistical methods but may not have experience with large-scale systems,” said David Keyes, professor of applied mathematics and computational science at KAUST.

Rather than reporting a single study, the paper provides a field-defining conceptual contribution that organizes current work, identifies critical gaps and establishes a global research agenda for HPSC. The authors note that HPSC supports advances in climate and environmental modeling, large-scale genomics, pandemic-scale inference, particle physics and economic forecasting. They argue that HPSC will be essential for exploiting future exascale and zettascale systems and for enabling the next generation of computational science applications. The work also aligns with Saudi Arabia’s national priorities in high-performance computing, AI and digital innovation.

Building a community for the next generation of statistical computing

Beyond the publication, the researchers are establishing an international community to advance HPSC.

“Building a community around HPSC is essential for developing tools and standards that work across modern architectures,” said Sameh Abdulah, a senior research scientist at KAUST. “By sharing real-world workloads and testing methods on advanced systems, we can accelerate progress for the entire field.”

This initiative is gaining momentum, with an invited HPSC session accepted for the Joint Statistical Meetings (JSM) 2026, one of the world’s largest statistical conferences, and ongoing coordination through the HPSC for Science platform. The community supports shared benchmarks, training opportunities and the collaborative development of scalable statistical methods.

Reference

Abdulah, S., Salvaña, M. L. O., Sun, Y., Keyes, D. E., & Genton, M. G. High-performance statistical computing (HPSC): Challenges, opportunities, and future directions. Wiley Interdisciplinary Reviews: Computational Statistics 17, e70052 (2025). | article.