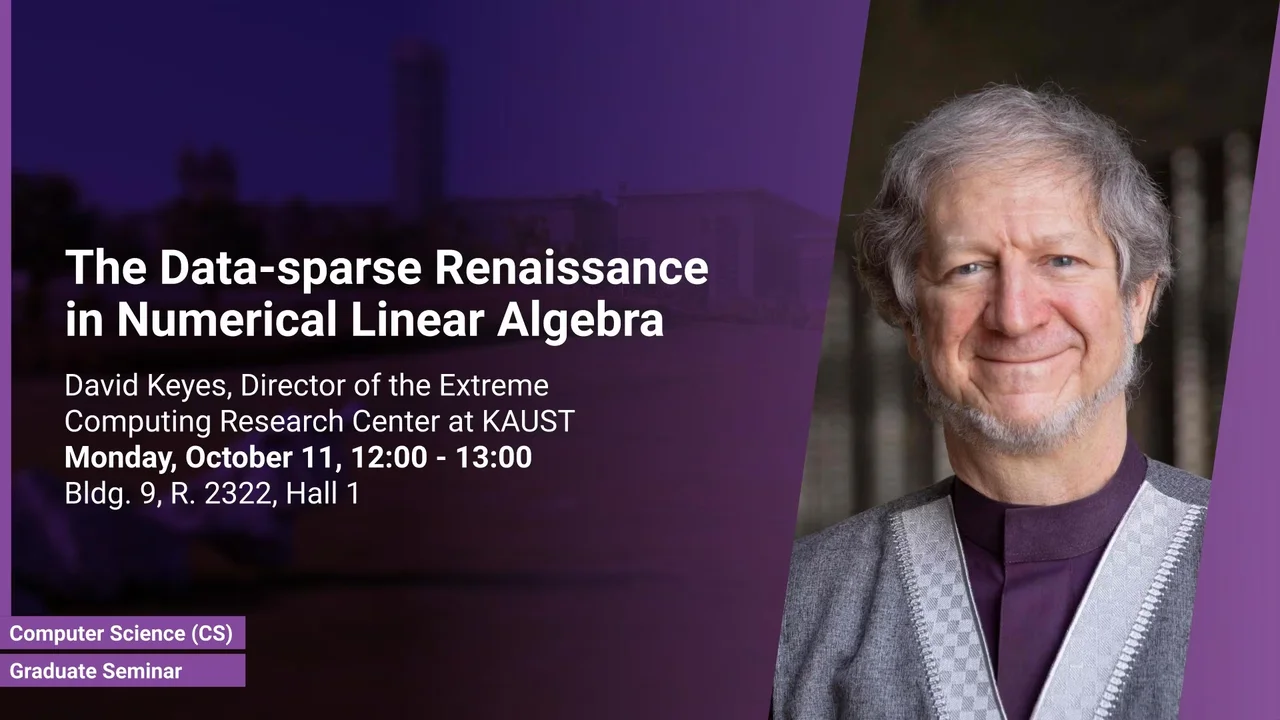

The Data-sparse Renaissance in Numerical Linear Algebra

B9 R2322 H1

A traditional goal of algorithmic optimality, squeezing out operations, has been superseded because of evolution in architecture. Algorithms must now squeeze memory, data transfers, and synchronizations, while extra operations on locally cached data cost relatively little time or energy. Hierarchically low-rank matrices realize a rarely achieved combination of optimal storage complexity and high-computational intensity in approximating a wide class of formally dense operators that arise in exascale applications.

Overview

Abstract

A traditional goal of algorithmic optimality, squeezing out operations, has been superseded because of evolution in architecture. Algorithms must now squeeze memory, data transfers, and synchronizations, while extra operations on locally cached data cost relatively little time or energy. Hierarchically low-rank matrices realize a rarely achieved combination of optimal storage complexity and high-computational intensity in approximating a wide class of formally dense operators that arise in exascale applications. They may be regarded as algebraic generalizations of the fast multipole method. Methods based on hierarchical tree-based data structures and their simpler cousins, tile low-rank matrices, are well suited for early exascale architectures, which are provisioned for high processing power relative to memory capacity and memory bandwidth. These data-sparse algorithms are ushering in a renaissance of numerical linear algebra. Modules of a KAUST-developed software toolkit, Hierarchical Computations on Manycore Architectures (HiCMA) illustrate these features on several applications. Early modules of this open-source project are distributed in software libraries of major computer vendors. A recent addition, H2Opus, from the KAUST PhD thesis of Wajih Boukaram, extends H2 hierarchical matrix operations to distributed memory and GPUs. The seminar will be prefaced by a brief introduction to current students and alumni of Keyes’ group at KAUST.

Brief Biography

David Keyes directs the Extreme Computing Research Center at KAUST, where he was founding Dean in 2009, and holds appointments in Applied Mathematics, Computer Science, and Mechanical Engineering. He works at the interface between parallel computing and partial differential equations and statistics, with a current focus on exploiting data sparsity. Keyes led scalable solver projects in the SciDAC and ASCI programs of the US DoE, directed university collaboration programs for US DoE and NASA institutes, and taught at Columbia, Old Dominion, and Yale. He is a Fellow of SIAM, AMS, and AAAS. He has been awarded the ACM Gordon Bell Prize, the IEEE Sidney Fernbach Award, and the SIAM Prize for Distinguished Service to the Profession. He earned a B.S.E. in Aerospace and Mechanical Sciences from Princeton and a Ph.D. in Applied Mathematics from Harvard.