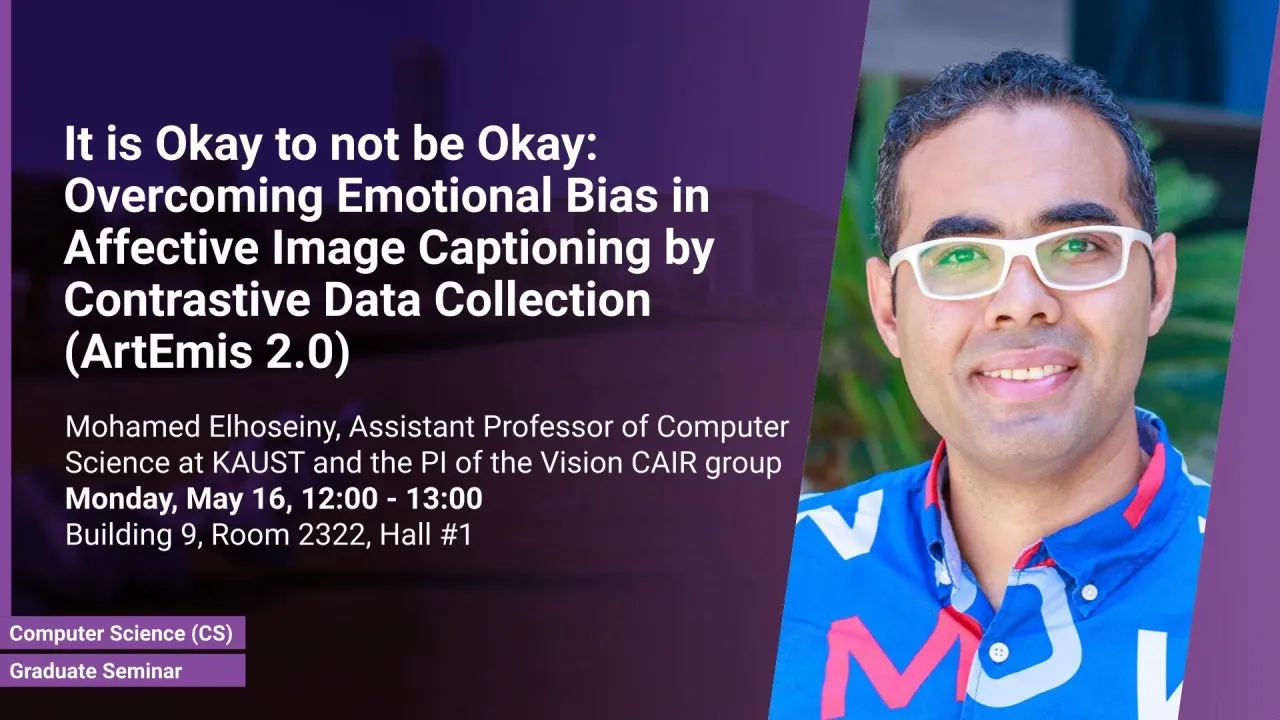

It is Okay to not be Okay: Overcoming Emotional Bias in Affective Image Captioning by Contrastive Data Collection (ArtEmis 2.0)

B9 R2322 H1

Datasets that capture the connection between vision, language, and affection are limited, causing a lack of understanding of the emotional aspect of human intelligence. As a step in this direction, the ArtEmis dataset was recently introduced as a large-scale dataset of emotional reactions to images along with language explanations of these chosen emotions.

Overview

Abstract

Datasets that capture the connection between vision, language, and affection are limited, causing a lack of understanding of the emotional aspect of human intelligence. As a step in this direction, the ArtEmis dataset was recently introduced as a large-scale dataset of emotional reactions to images along with language explanations of these chosen emotions. We observed a significant emotional bias towards instance-rich emotions, making trained neural speakers less accurate in describing under-represented emotions. We show that collecting new data, in the same way, is not effective in mitigating this emotional bias. To remedy this problem, we propose a contrastive data collection approach to balance ArtEmis with a new complementary dataset such that a pair of similar images have contrasting emotions (one positive and one negative). We collected 260,533 instances using the proposed method, we combine them with ArtEmis, creating a second iteration of the dataset. The new combined dataset, dubbed ArtEmis v2.0, has a balanced distribution of emotions with explanations revealing more fine details in the associated painting. Our experiments show that neural speakers trained on the new dataset improve CIDEr and METEOR evaluation metrics by 20% and 7%, respectively, compared to the biased dataset. Finally, we also show that the performance per emotion of neural speakers is improved across all the emotion categories, significantly on under-represented emotions. The collected dataset and code are available at https://artemisdataset-v2.org.

Brief Biography

Mohamed Elhoseiny is an assistant professor of Computer Science at KAUST and the PI of the Vision CAIR group. Since Fall 2021, he has become a senior member of IEEE and a member of the international Summit community. Previously, he was a visiting Faculty at Stanford Computer Science department (2019-2020), Visiting Faculty at Baidu Research (2019), Postdoc researcher at Facebook AI Research (2016-2019). Dr. Elhoseiny did his Ph.D. in 2016 at Rutgers University where he was part of the art & AI lab and spent time at SRI International in 2014 and at Adobe Research (2015-2016). His primary research interest is in computer vision and especially in efficient multimodal learning with limited data in zero/few-shot learning and Vision & Language. He is also interested in Affective AI and especially to understand and generate novel visual content (e.g., art and fashion). He received an NSF Fellowship in 2014, the Doctoral Consortium award at CVPR’16, best paper award at ECCVW’18 on Fashion and Design. His zero-shot learning work was featured at the United Nations and his creative AI work was featured in MIT Tech Review, New Scientist Magazine, Forbes Science, and HBO Silicon Valley. He has served as an Area Chair at major AI conferences including CVPR21, ICCV21, IJCAI22, ECCV22, and organized CLVL workshops at ICCV’15, ICCV’17, ICCV’19, and ICCV’21. website:http://www.mohamed-elhoseiny.com/ group website: https://cemse.kaust.edu.sa/vision-cair