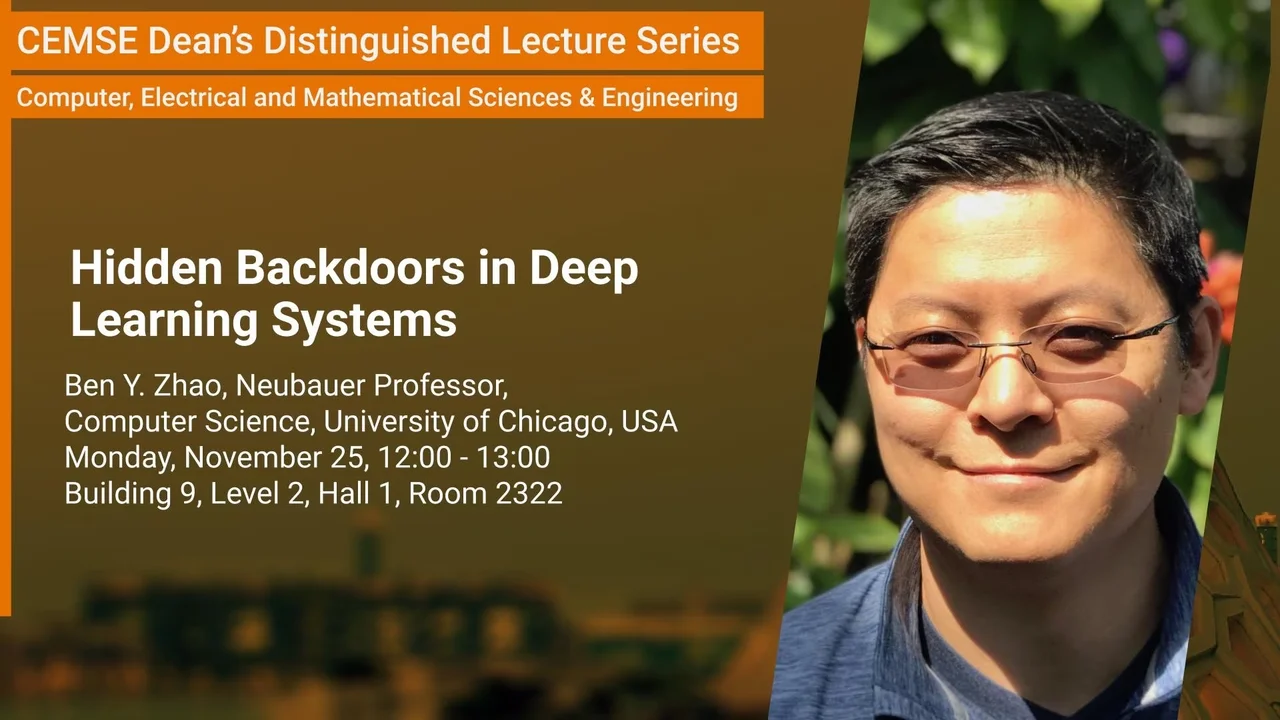

Hidden Backdoors in Deep Learning Systems

In this talk, I will describe two recent results on detecting and understanding backdoor attacks on deep learning systems. I will first present Neural Cleanse (IEEE S&P 2019), the first robust tool to detect a wide range of backdoors in deep learning models. We use the idea of perturbation distances between classification labels to detect when a backdoor trigger has created shortcuts to misclassification to a particular label. Second, I will also summarize our new work on Latent Backdoors (CCS 2019), a stronger type of backdoor attack that is more difficult to detect and survives retraining in commonly used transfer learning systems. Latent backdoors are robust and stealthy, even against the latest detection tools (including neural cleanse).

Overview

Abstract

Today’s machine learning systems are largely based on deep learning (deep neural networks or DNNs), which are complex, mathematical constructs trained by millions of data samples. Their complexity makes them difficult to understand and their behavior hard to predict. These properties pave the way for a new type of threat, often referred to as backdoor or “Trojan" attacks. In a backdoor attack, a malicious party can corrupt a deep learning model (either at initial training time or later) to embed hidden classification rules that do not interfere with normal classification, unless an unusual “trigger” is applied to the input, which would then produce unusual (and likely incorrect) results. For example, a facial recognition model with a backdoor might recognize anyone with a pink earring as Elon Musk. Backdoor attacks have been validated in a number of image classification applications, and are difficult to detect in practice.

In this talk, I will describe two recent results on detecting and understanding backdoor attacks on deep learning systems. I will first present Neural Cleanse (IEEE S&P 2019), the first robust tool to detect a wide range of backdoors in deep learning models. We use the idea of perturbation distances between classification labels to detect when a backdoor trigger has created shortcuts to misclassification to a particular label. Second, I will also summarize our new work on Latent Backdoors (CCS 2019), a stronger type of backdoor attack that is more difficult to detect and survives retraining in commonly used transfer learning systems. Latent backdoors are robust and stealthy, even against the latest detection tools (including neural cleanse).

Brief Biography

Ben Zhao is the Neubauer Professor of Computer Science at the University of Chicago. He completed his Ph.D. from Berkeley (2004) and his BS from Yale (1997). He is an ACM distinguished scientist, and recipient of the NSF CAREER award, MIT Technology Review's TR-35 Award (Young Innovators Under 35), ComputerWorld Magazine's Top 40 Tech Innovators award, Google Faculty Award, and IEEE ITC Early Career Award. His work has been covered by media outlets such as Scientific American, New York Times, Boston Globe, LA Times, MIT Tech

Review, and Slashdot. He has published more than 160 publications in areas of security and privacy, networked systems, wireless networks, data-mining and HCI (H-index > 60). He recently served as PC chair for World Wide Web Conference (WWW 2016) and the Internet Measurement Conference (IMC 2018) and is a general co-chair for HotNets 2020.