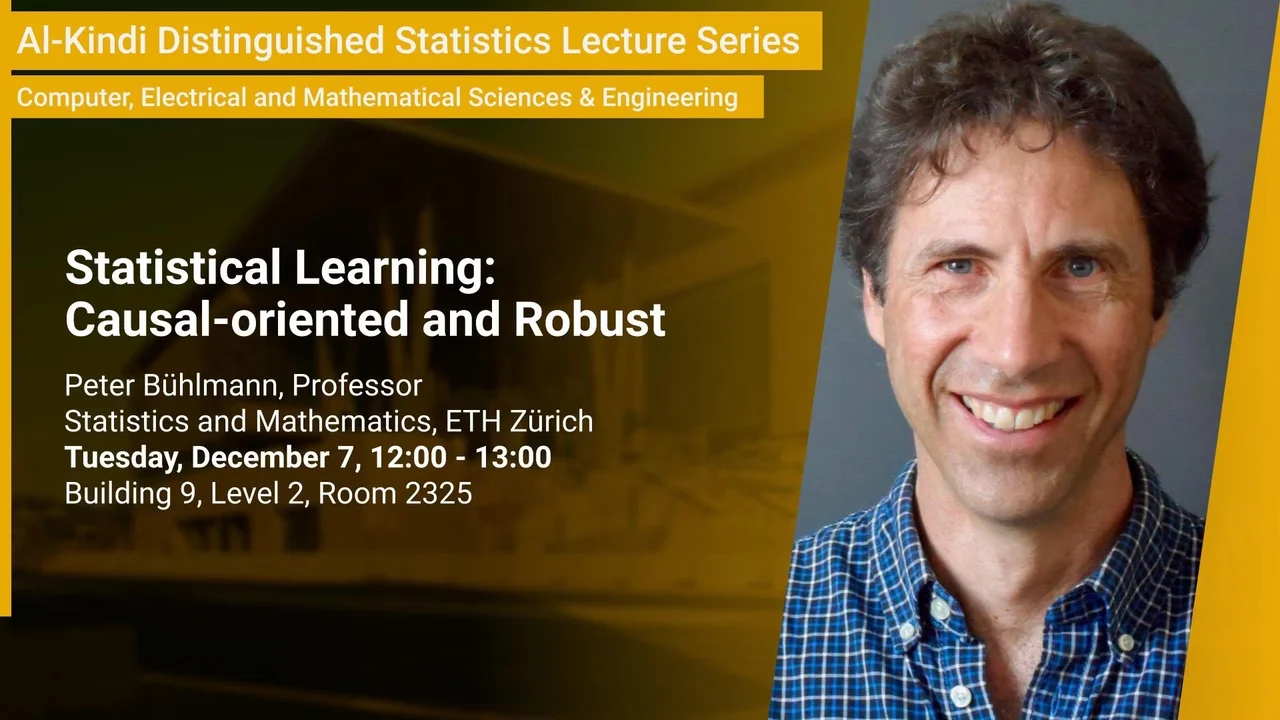

Statistical Learning: Causal-oriented and Robust

Reliable, robust and interpretable machine learning is a big emerging theme in data science and artificial intelligence, complementing the development of pure black box prediction algorithms. Looking through the lens of statistical causality and exploiting a probabilistic invariance property opens up new paths and opportunities for enhanced interpretation, robustness and external validity, with wide-ranging prospects for various applications.

Overview

Abstract

Reliable, robust and interpretable machine learning is a big emerging theme in data science and artificial intelligence, complementing the development of pure black box prediction algorithms. Looking through the lens of statistical causality and exploiting a probabilistic invariance property opens up new paths and opportunities for enhanced interpretation, robustness and external validity, with wide-ranging prospects for various applications.

Brief Biography

Peter Bühlmann studied mathematics at ETH Zürich and received his doctoral degree in 1993 from the same institution. He was a Postdoctoral Fellow from 1994-1995 and a Neyman Assistant Professor from 1995 - 1997 at UC Berkeley, before he returned to ETH Zürich in 1997. From 2013 - 2017, he was Chair of the Department of Mathematics at ETH Zürich. He is a Fellow of the Institute of Mathematical Statistics, a Fellow of the American Statistical Association, and was Co-Editor of the Annals of Statistics from 2010 - 2012. Other honors which he recently received include Doctor Honoris Causa from the Université Catholique de Louvain in 2017, Neyman Lecturer 2018 elected by the Institute of Mathematical Statistics, Rothschild Lecturer 2018 at the Newton Institute (Cambridge), recipient of the Guy Medal in Silver 2018 from the Royal Statistical Society, and plenary speaker at the European Congress of Mathematics 2021.