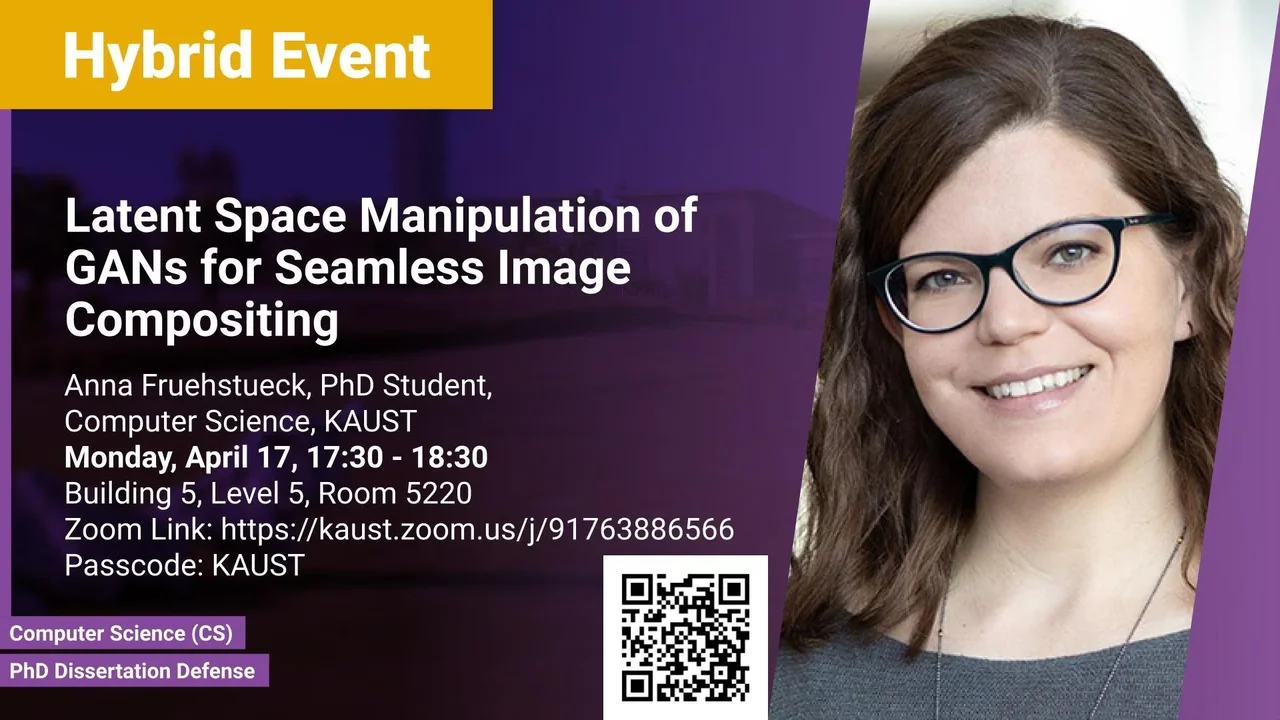

Latent Space Manipulation of GANs for Seamless Image Compositing

Generative Adversarial Networks (GANs) are a very successful method for high-quality image synthesis and are a powerful tool to generate realistic images by learning their visual properties from a dataset of exemplars. However, the controllability of the generator output still poses many challenges. In this thesis, we propose several methods for achieving larger and/or higher visual quality in GAN outputs by combining latent space manipulations with image compositing operations

Overview

Abstract

Generative Adversarial Networks (GANs) are a very successful method for high-quality image synthesis and are a powerful tool to generate realistic images by learning their visual properties from a dataset of exemplars. However, the controllability of the generator output still poses many challenges. In this thesis, we propose several methods for achieving larger and/or higher visual quality in GAN outputs by combining latent space manipulations with image compositing operations:

(1) GANs are inherently suitable for small-scale texture synthesis due to the generator's capability to learn image properties of a limited domain such as the properties of a specific texture type at a desired level of detail. A rich variety of suitable texture tiles can be synthesized from the trained generator. Due to the convolutional nature of GANs, we can achieve large-scale texture synthesis by tiling intermediate latent blocks, allowing the generation of (almost) arbitrarily large texture images that are seamlessly merged.

(2) We notice that generators trained on heterogeneous data perform worse than specialized GANs, and we demonstrate that we can optimize multiple independently trained generators in such a way that a specialized network can fill in high-quality details for specific image regions, or insets, of a lower-quality canvas generator. Multiple generators can collaborate to improve the visual output quality and through careful optimization, seamless transitions between different generators can be achieved.

(3) GANs can also be used to semantically edit facial images and videos, with novel 3D GANs even allowing for camera changes, enabling unseen views of the target. However, the GAN output must be merged with the surrounding image or video in a spatially and temporally consistent way, which we demonstrate in our method.

Brief Biography

Anna Frühstück is a Ph.D. candidate in Computer Science at the Visual Computing Center under the supervision of Prof. Peter Wonka. Prior to joining KAUST, she obtained a Bachelor’s Degree in Computer Science and a M.Sc. degree in Visual Computing from Vienna University of Technology in Austria. Anna is interested in computer graphics topics related to synthesis and modeling. In her research, she is developing techniques for large-scale image synthesis using generative machine learning methods such as Generative Adversarial Networks, focusing on the generation of high-quality content, the controllability and plausible compositing of the output.

During her Ph.D. studies, Anna was awarded a fellowship by Facebook Research and collaborated in several industry internships with Meta Reality Labs, Adobe and Weta FX.