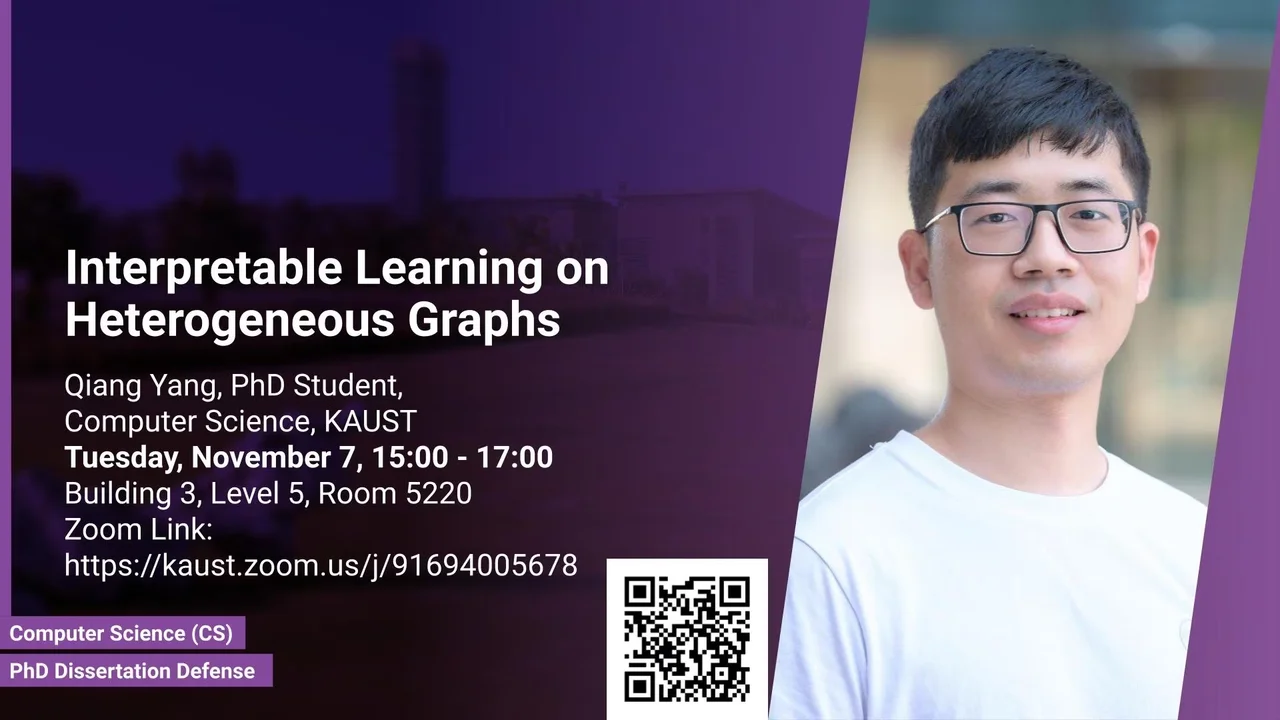

Interpretable Learning on Heterogeneous Graphs

B3 L5 R5220

Graph Representation Learning has gained substantial attention in recent years within the field of data mining. This interest has been driven by the prevalence of data organized as graphs, such as social networks and academic graphs, which encompass various types of nodes and edges-forming heterogeneous graphs.

Overview

Abstract

Graph Representation Learning has gained substantial attention in recent years within the field of data mining. This interest has been driven by the prevalence of data organized as graphs, such as social networks and academic graphs, which encompass various types of nodes and edges-forming heterogeneous graphs. However, many existing methods primarily focus on homogeneous graphs, where nodes and edges are of the same type. These methods often leverage Network Embedding and Graph Neural Networks (GNNs) to learn node representations through a message-passing mechanism from neighbors. However, they fall short of providing explanations for the generated predictions. There are at least two key challenges we are faced with: firstly, effectively learning high-quality graph representations for intricate graphs, and secondly, deciphering and comprehending predictions made by graph models. Here, we focus on two graph-relevant tasks, i.e., relation prediction and graph classification on heterogeneous graphs. To address these challenges, we introduce an innovative framework capable of learning robust representations for heterogeneous graphs while offering insightful interpretations of predictions. Notably, our framework can be extended to other graph-relevant tasks by modifying the corresponding modules. We tackle these problems from three distinct angles. Firstly, acknowledging that meta-paths in heterogeneous graphs capture diverse and complementary insights into node interactions, we present a novel interpretable relation learning model. Secondly, recognizing the scarcity of exploration into heterogeneous information and interpretations within temporal graphs, we devise a deep neural network-based approach to parameterize the interpretation generation process for predicted results, representing it as a weighted sub-graph. Lastly, current interpretable methods primarily focus on answering ”why” questions by identifying sub-graphs for interpretation. However, they often fail to ascertain whether removing an identified sub-graph from an input graph would inevitably alter the prediction results, leading to predictions lacking counterfactual robustness against input noise. To remedy this, we propose generating robust and reasonable counterfactual interpretations for predictions. Our experimental results, conducted on diverse heterogeneous academic graphs, demonstrate the superior performance of our proposed methods on the performance of graph tasks when compared to state-of-the-art approaches. Furthermore, our designed models offer well-comprehensible explanations for predictions.

Brief Biography

Qiang Yang is a computer science PhD student at King Abdullah University of Science and Technology (KAUST), Saudi Arabia. His current research interests include interpretable graph learning, multimodal learning, and knowledge graph representation learning. He focuses on heterogeneous graphs which have different types of nodes and edges, such as academic graphs, and knowledge graphs, and their applications in link prediction, node classification, and graph classification.