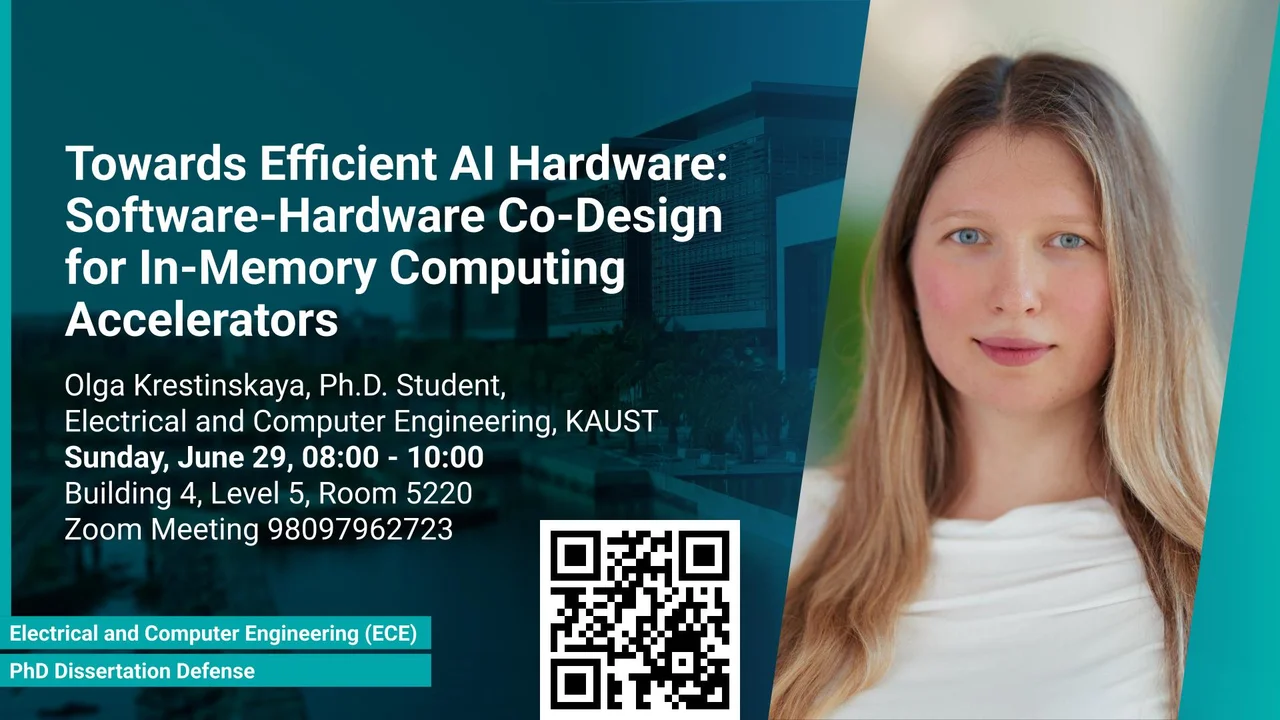

Towards Efficient AI Hardware: Software-Hardware Co-Design for In-Memory Computing Accelerators

This dissertation addresses two key challenges in software–hardware co-design for IMC-based neural network accelerators: (1) the need to develop generalized IMC hardware that can efficiently support multiple neural network models, and (2) the need for automated frameworks that jointly optimize model parameters, quantization schemes, and IMC hardware configurations for workload-specific deployments.

Overview

The exponential growth of artificial intelligence (AI) applications has driven the demand for efficient, scalable, and energy-aware hardware capable of supporting deep neural networks (DNNs) across a wide range of platforms, from cloud servers to edge devices. In-memory computing (IMC) has emerged as a promising paradigm to overcome the inefficiencies of traditional von Neumann architectures by performing matrix-vector multiplications directly within memory arrays, thereby significantly reducing data movement and energy consumption. However, the effective deployment of AI models to IMC accelerators requires comprehensive co-optimization across the software–hardware stack and holistic co-design methodologies that jointly optimize software and hardware parameters to accommodate the diverse needs of modern AI workloads.

This dissertation addresses two key challenges in software–hardware co-design for IMC-based neural network accelerators: (1) the need to develop generalized IMC hardware that can efficiently support multiple neural network models, and (2) the need for automated frameworks that jointly optimize model parameters, quantization schemes, and IMC hardware configurations for workload-specific deployments. To tackle the first challenge, a joint hardware–workload co-optimization framework is proposed to generate generalized IMC hardware that supports multiple neural network models with minimal performance degradation. The framework incorporates a four-phase genetic algorithm, enhanced with Hamming-distance-based sampling, improving convergence speed and design diversity. To address the second challenge, this thesis introduces Compute-In-Memory Neural Architecture Search (CIMNAS), a unified framework that explores a vast multi-dimensional search space to generate highly efficient IMC designs tailored to specific workloads. It performs joint optimization across three domains: neural network architecture, quantization schemes, and IMC hardware configurations, exploring a broad hardware parameter space across device, circuit, architecture, and system levels.

The proposed approaches demonstrate significant improvements in hardware efficiency, scalability, and inference accuracy and are validated through extensive simulation results and comparisons with state-of-the-art frameworks. Together, these contributions advance the field of automated software–hardware co-design for IMC applications, enabling next-generation design methods for energy-efficient and generalizable AI accelerators.

Presenters

Brief Biography

Olga Krestinskaya (Graduate Student Member, IEEE) is a Ph.D. candidate at King Abdullah University of Science and Technology (KAUST), Saudi Arabia. Her research focuses on software–hardware co-design for in-memory computing (IMC) architectures and AI hardware, with a particular interest in hardware-aware neural architecture search (NAS) algorithms, memristor-based systems, neuromorphic computing, and mixed-signal IMC implementations. She has authored several high-impact works on analog memristive neural networks, mixed-signal circuit-level implementations of in-memory computing architectures, quantized neural networks, and brain-inspired algorithms, with a focus on developing energy-efficient and scalable IMC hardware for AI applications.

Olga is the recipient of the 2019 IEEE CASS Predoctoral Award, the 2025 Web of Talents STEM Award (1st place), and multiple KAUST Dean’s Awards. Her work was recognized with the Best Poster Award at the 2nd Nature Conference on Neuromorphic Computing (2024), and she was shortlisted for the prestigious Rising Stars Women in Engineering Workshop (Asian Deans’ Forum 2024).