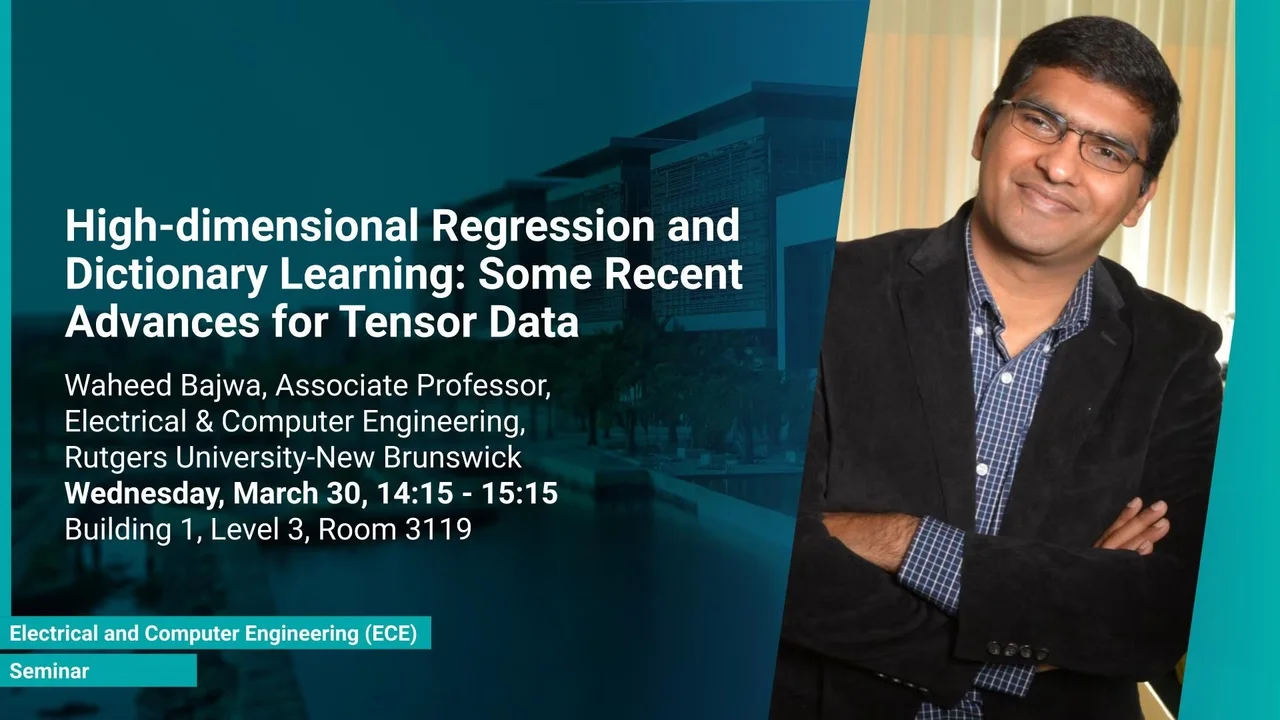

High-dimensional Regression and Dictionary Learning: Some Recent Advances for Tensor Data

- Prof. Waheed Bajwa, Electrical and Computer Engineering, Rutgers University-New Brunswick

B1 L3 R3119

Data in many modern signal processing, machine learning, and statistics problems tend to have tensor (aka, multidimensional / multiway array) structure. While traditional approaches to processing of such data involve 'flattening' of data samples into vectors, it has long been realized that explicit exploitation of tensor structure of data can lead to improved performance. Recent years, in particular, have witnessed a flurry of research activity centered around development of computational algorithms for improved processing of tensor data.

Overview

Abstract

Data in many modern signal processing, machine learning, and statistics problems tend to have tensor (aka, multidimensional / multiway array) structure. While traditional approaches to processing of such data involve 'flattening' of data samples into vectors, it has long been realized that explicit exploitation of tensor structure of data can lead to improved performance. Recent years, in particular, have witnessed a flurry of research activity centered around development of computational algorithms for improved processing of tensor data. Despite the effectiveness of such algorithms, an explicit theoretical characterization of the benefits of exploitation of tensor structure remains unknown in the high-dimensional setting for several problems. In this talk, we focus on two such high-dimensional problems for tensor data, namely, high-dimensional regression and high-dimensional dictionary learning. The basic assumption in this talk for both these problems is that the dimensionality of data far exceeds the number of available data samples, so much so that existing approaches to regression (e.g., sparse regression) and dictionary learning (e.g., K-SVD) may not result in meaningful results. Under this high-dimensional setting, we discuss algorithms capable of exploiting certain low-dimensional structures underlying tensor data for effective regression and dictionary learning. In addition, we present sample complexity results for both high-dimensional problems that highlight the usefulness of the latent tensor structures being exploited by the presented algorithms in relation to existing works in the literature.

Brief Biography

Waheed U. Bajwa, whose research interests include statistical signal processing, high-dimensional statistics, machine learning, inverse problems, and networked systems, is currently a visiting fellow in the Center for Statistics and Machine Learning at Princeton University, and an associate professor in the Department of Electrical and Computer Engineering and an associate member of the graduate faculty of the Department of Statistics at Rutgers University.

Dr. Bajwa has received several research and teaching awards icluding the Army dataResearch Office Young Investigator Award (2014), the National Science Foundation CAREER Award (2015), Rutgers University’s Presidential Merit Award (2016), Rutgers University’s Presidential Fellowship for Teaching Excellence (2017), Rutgers University’s Engineering Governing Council ECE Professor of the Year Award (2016, 2017, 2019), and Rutgers University’s Warren I. Susman Award for Excellence in Teaching (2021). He is a co-investigator on a work that received the Cancer Institute of New Jersey’s Gallo Award for Scientific Excellence in 2017, a co-author on papers that received Best Student Paper Awards at IEEE IVMSP 2016 and IEEE CAMSAP 2017 workshops, and a Member of the Class of 2015 National Academy of Engineering Frontiers of Engineering Education Symposium.