Lama Niyazi celebrated a significant academic triumph, successfully defending her Ph.D. thesis in Electrical and Computer Engineering at KAUST. Her extensive research, guided by Professors Tareq Al-Naffouri and Mohamed-Slim Alouini, focused on advancing high-dimensional data classifiers using tools from asymptotic Random Matrix Theory (RMT). Lama's work filled critical gaps in understanding linear classifiers in scenarios with small, high-dimensional datasets.

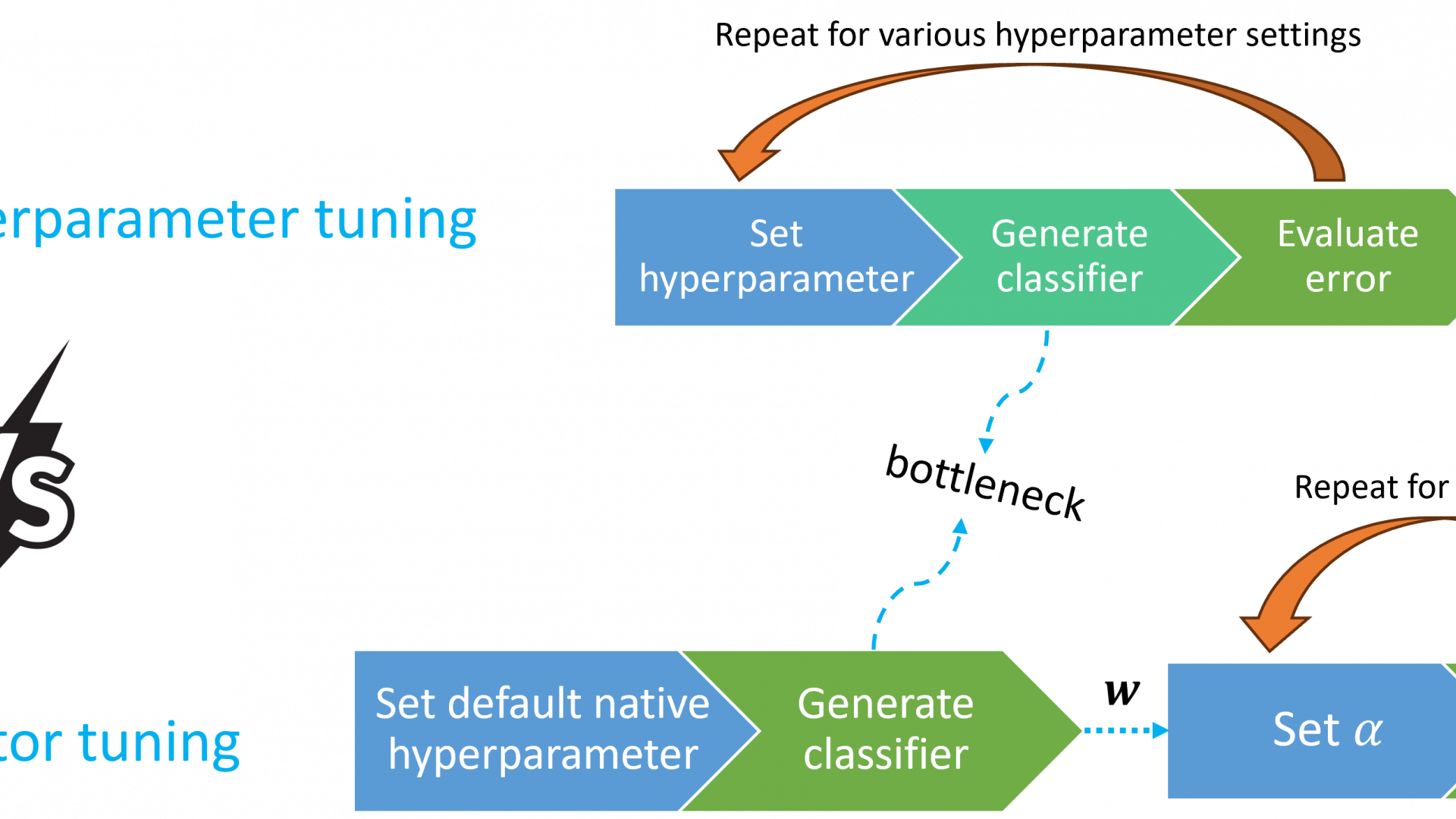

Throughout her dissertation, Lama meticulously outlined how asymptotic RMT provided insights into classifier behavior, paving the way for potential enhancements. Her exploration of discriminant-averaging and vote-averaging RP-LDA ensemble classifiers not only illuminated optimal ensembles for Gaussian data but also introduced groundbreaking techniques for tuning binary linear classifiers.

Acknowledging the invaluable contributions and guidance from the examiners and committee members—Prof Basem Shihada, Prof Babak Hassibi, Prof K. V. S. Hari, Dr Abla Kammoun, and Dr Hayssam Dahrouj—Lama's research significantly deepened our understanding of classifier design and presented practical solutions for improving their performance. Her dedication and contributions markedly advance the applicability of linear classifiers across diverse domains, showcasing their potential in various applications, including deep neural network transfer learning.

Congratulations, Lama, on this exceptional achievement! Your commitment to pushing boundaries in high-dimensional data classification is commendable, and we eagerly anticipate your continued success in future endeavors.