By learning to deviate from known information in the same way that humans do, an "imagination" algorithm for artificial intelligence (AI) is able to identify previously unseen objects from written descriptions.

The algorithm, developed by KAUST researcher Mohamed Elhoseiny in collaboration with Mohamed Elfeki from the University of Central Florida, paves the way for artificial imagination and the automated classification of new plant and animal species.

“Imagination is one of the key properties of human intelligence that enables us not only to generate creative products like art and music, but also to understand the visual world,” explains Elhoseiny.

Artificial intelligence relies on training data to develop its ability to recognize objects and respond to its environment. Humans also develop this ability through accumulated experience, but humans can do something that AI cannot. They can intuitively deduce a likely classification for a previously unencountered object by imagining what something must look like from a written description or by inference from something similar. In AI, this ability to imagine the unseen is becoming increasingly important as the technology is rolled out into complex real-world applications where misclassification or misrecognition of new objects can prove disastrous.

Also important is the sheer volume of data needed to reliably train AI for the real world. It is unfeasible to train AI with images of even a fraction of the known species of plants and animals in the world in all their permutations, let alone the countless undiscovered or unclassified species.

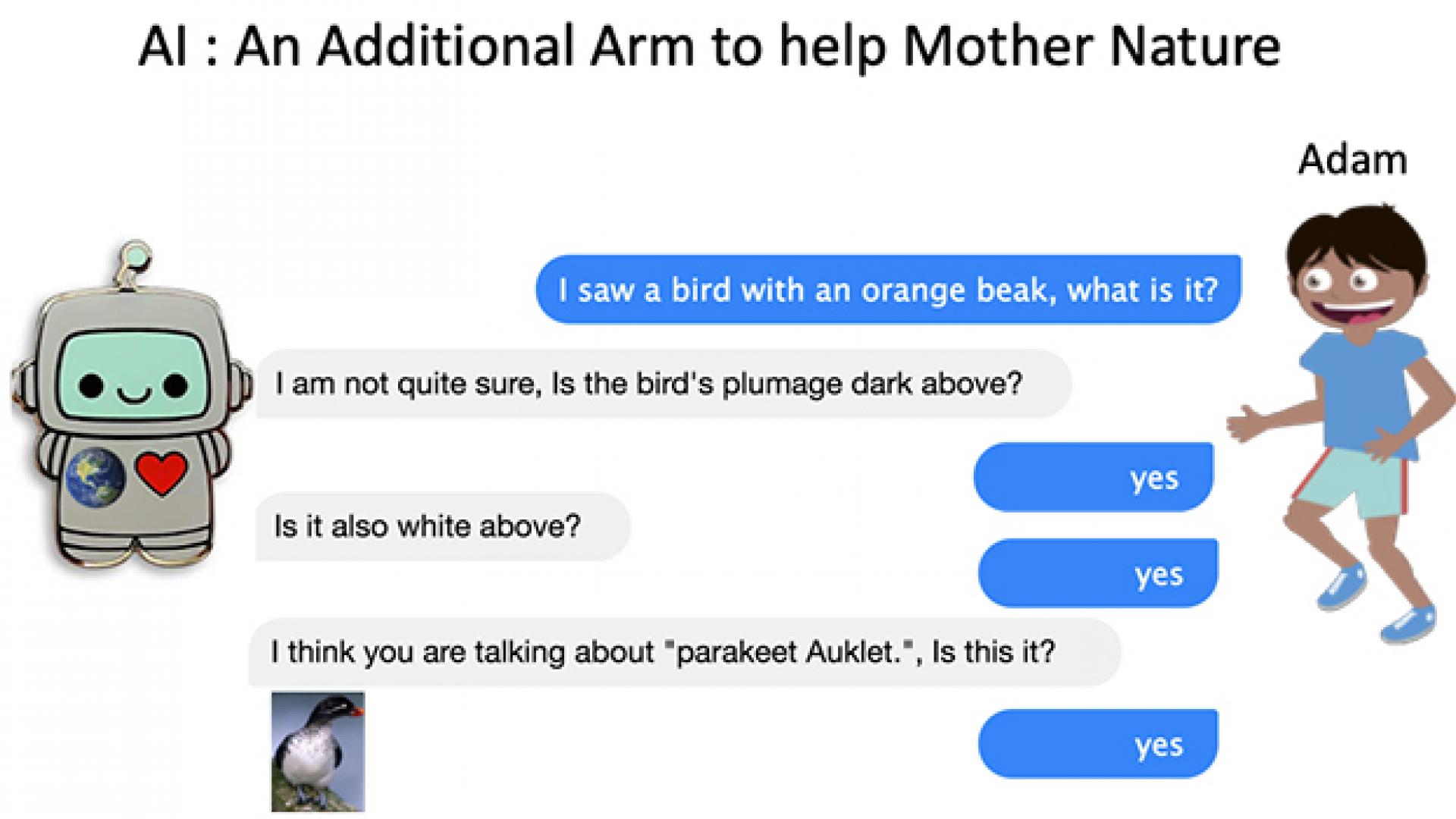

Elhoseiny and Elfeki’s research aimed at developing what is called a zero-shot learning (ZSL) algorithm to help with the recognition of previously unseen categories based on class-level descriptions with no training examples.

“We modeled the visual learning process for ‘unseen’ categories by relating ZSL to human creativity, observing that ZSL is about recognizing the unseen while creativity is about creating a ‘likable unseen’,” says Elhoseiny.

Read the full article