Abstract

GPUs have emerged as a popular choice for deep learning. To deal with ever-growing datasets, it is also common to use multiple GPUs in parallel for distributed deep learning. Although achieving cost-effectiveness in these clusters relies on efficient sharing, modern GPU hardware, deep learning frameworks, and cluster managers are not designed for efficient, fine-grained sharing of GPU resources. In this talk, I will present our recent works on efficient GPU resource management, both within a single GPU and across many GPUs in a cluster for hyperparameter tuning, training, and inference. The common thread across all our works is leveraging the interplay between short-term predictability and long-term unpredictability of deep learning workloads. I'll discuss how to leverage the former to address the latter and improve utilization, performance, and fairness in shared GPU clusters. All our works are open-source and available at https://github.com/symbioticlab.

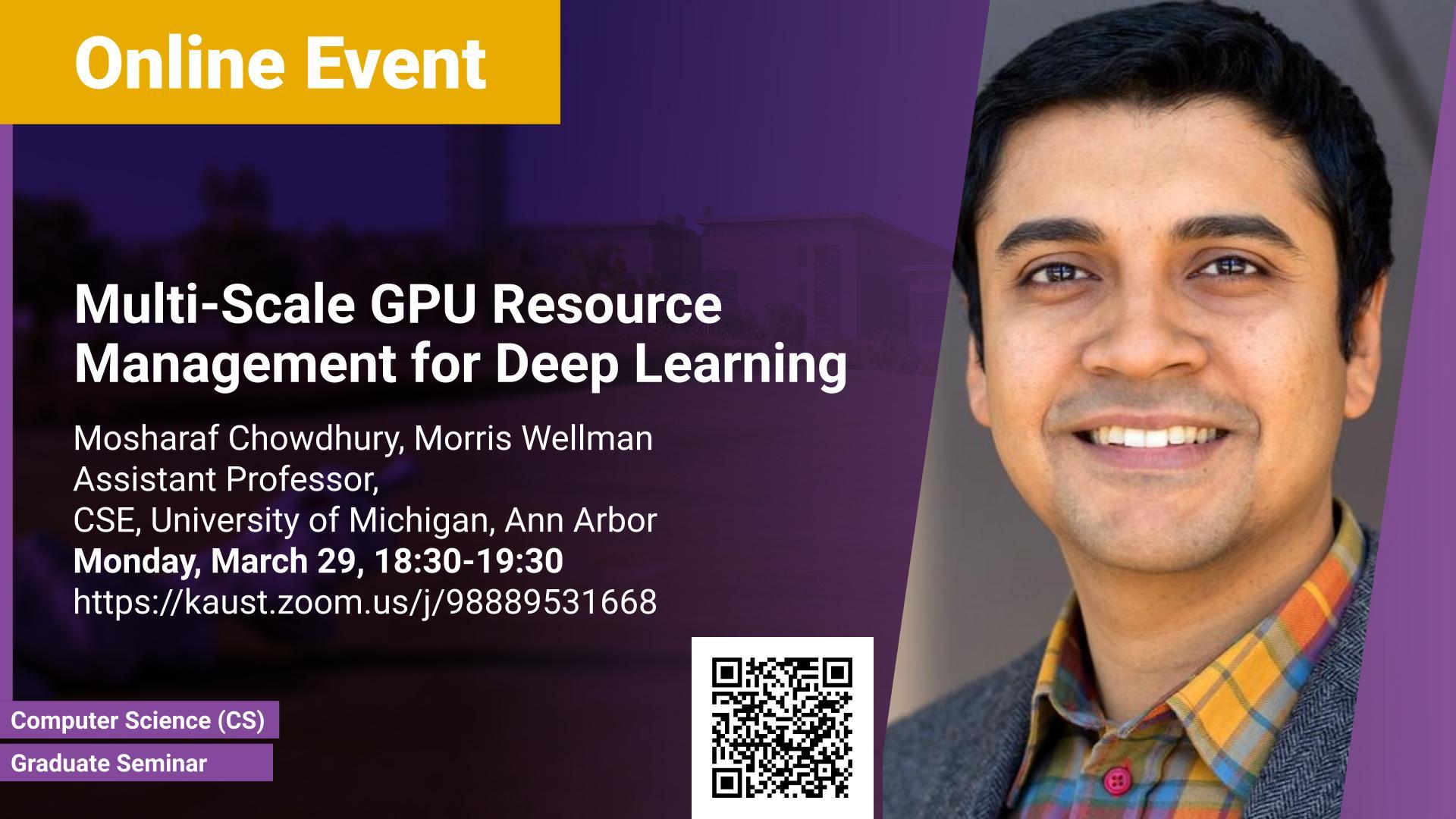

Brief Biography

Mosharaf Chowdhury is a Morris Wellman assistant professor of CSE at the University of Michigan, Ann Arbor. His current research focuses on application-infrastructure symbiosis across different layers of software and hardware stacks. Mosharaf invented coflows and is a co-creator of Apache Spark. Software artifacts from his research have been deployed in Microsoft, Facebook, Google, and Amazon datacenters. He has received an NSF CAREER award, the 2015 ACM SIGCOMM doctoral dissertation award, best paper awards at NSDI and ATC, multiple faculty fellowships and awards from Google, VMware, and Alibaba, as well as a Facebook fellowship and a Cheriton Scholarship. He received his PhD from the AMPLab at UC Berkeley in 2015.