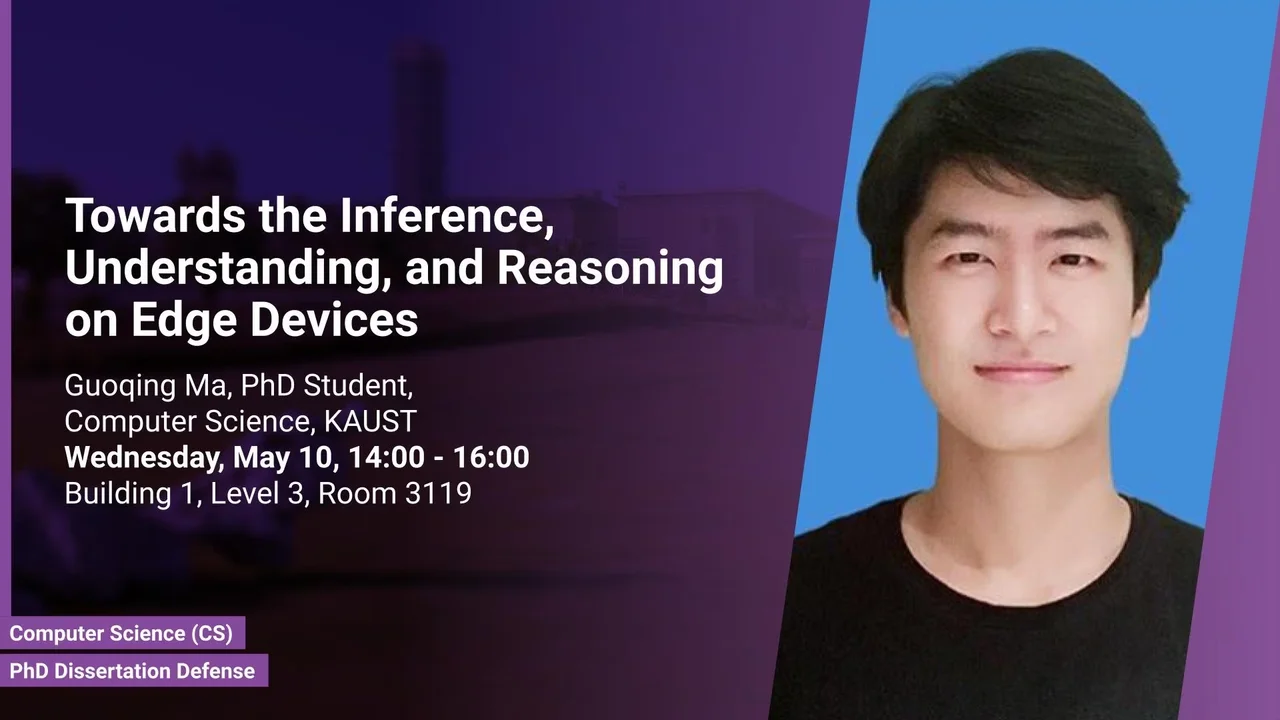

Towards the Inference, Understanding, and Reasoning on Edge Devices

Edge devices refer to compact hardware that performs data processing and analysis close to the data source, eliminating the need for data transmission to centralized systems for analysis. These devices are typically integrated into other equipment, such as sensors or smart appliances, and can collect and process data in real time.

Overview

Abstract

Edge devices refer to compact hardware that performs data processing and analysis close to the data source, eliminating the need for data transmission to centralized systems for analysis. These devices are typically integrated into other equipment, such as sensors or smart appliances, and can collect and process data in real time. They offer many advantages over traditional centralized processing, including reduced latency, improved responsiveness, and efficient data transfer. In the Internet of Things (IoT) systems, edge devices are pivotal, driving innovation in various industries. Our study delves into the potential of edge devices in indoor localization applications, traffic prediction, and multi-modal representation learning. We evaluated the proposed system's performance and suitability for various use cases through rigorous experimentation and analysis. We assessed the technical aspects of the system's implementation in each application, comparing its performance with existing methods and benchmarks. Our research provides invaluable insights into the strengths and limitations of edge devices in different contexts, emphasizing their potential to revolutionize various industries.

To address the computational limitations of single devices in complex deep learning tasks, we introduced a novel system named Distributed Artificial Intelligence Over-the-Air (AirDAI). This system ensures strict privacy protection for multiple clients while providing intelligent services in wireless settings. AirDAI involves local training on raw data, with trained outputs such as model parameters from local clients sent back to a central server for aggregation. To facilitate the development of AirDAI in wireless communication networks, we proposed a generic system design and an associated simulator that can be customized based on wireless channels and system-level configurations. We provided the proposed system design and analysis of the impacts of wireless environments to facilitate further implementations and updates. A series of experiments were conducted to verify the effectiveness and efficiency of the proposed system design.

However, heterogeneous edge networks often face limitations in communication and computation resources that can restrict model capacity and user participation. To address this challenge, we propose FedForest, which optimizes the global network by distilling knowledge from aggregated sub-networks sampled at each optimization step. The sub-network sampling process is made differentiable using soft masks to relax constraints, and the model size is used as an additional constraint to extract a new sub-network for the subsequent local optimization process. Our experimental results demonstrate that FedForest significantly reduces server-to-client communication and local device computation costs compared to conventional algorithms. Furthermore, FedForest collectively reduces uploading communication costs while maintaining performance with the benchmark Top-K sparsification method. We believe that FedForest can accelerate the deployment of large-scale deep learning models.

Brief Biography

Guoqing Ma received the B.Sc. degree from the South University of Science and Technology of China, Shenzhen, China, in 2017. He is currently pursuing the Ph.D. degree in computer science with the King Abdullah University of Science and Technology (KAUST), Thuwal, Saudi Arabia. His main research interests include big data analysis and large-scale system design.