Hardware Centric Quantized Convolutional Neural Network and Algorithms

This thesis addresses the challenges of deploying quantized convolutional neural networks (QCNNs) on resource-constrained edge devices by proposing two novel hardware-software co-design frameworks: one for deriving lightweight, hardware-friendly models validated on FPGA, and another for hardware-aware mixed-precision quantization on compute-in-memory accelerators.

Overview

In recent years, machine learning algorithms have demonstrated remarkable versatility in tasks ranging from visual perception and sequential data analysis to natural language processing. Convolutional neural networks (CNNs), in particular, have become the backbone of state-of-the-art systems for vision-related applications. However, the rise of the Internet of Things (IoT) and edge computing has intensified the demand for deploying these powerful models on resource-constrained devices. Although significant progress has been made in refining CNN architectures, deploying them at the edge remains challenging due to limited computational and memory resources.

Quantization, which represents data flows and model parameters in lower-precision formats, offers a promising solution for reducing memory requirements and simplifying arithmetic operations. To address these challenges, this thesis begins with a comprehensive review of quantization methods and a wide array of acceleration hardware specifically designed for quantized CNNs (QCNNs). This review not only highlights the key challenges and open problems in QCNN hardware accelerator design but also provides general guidelines for effective software-hardware co-design.

Building on this foundation, the thesis proposes two novel frameworks to facilitate the edge deployment of QCNNs. The first framework derives lightweight models from original architectures by exclusively employing hardware-friendly operations, and it is validated through an FPGA implementation. The second framework investigates the mapping of QCNNs to compute-in-memory (CIM) accelerators, introducing a hardware-aware, fully gradient-based, column-wise mixed-precision quantization (MPQ) approach. Notably, this method utilizes hardware metrics directly sourced from a simulator or compiler, thereby minimizing the discrepancy between theoretical models and actual hardware performance.

In summary, this thesis provides a detailed examination of the challenges in QCNN deployment. It presents two hardware-software co-design frameworks that advance the efficient implementation of QCNNs on edge devices.

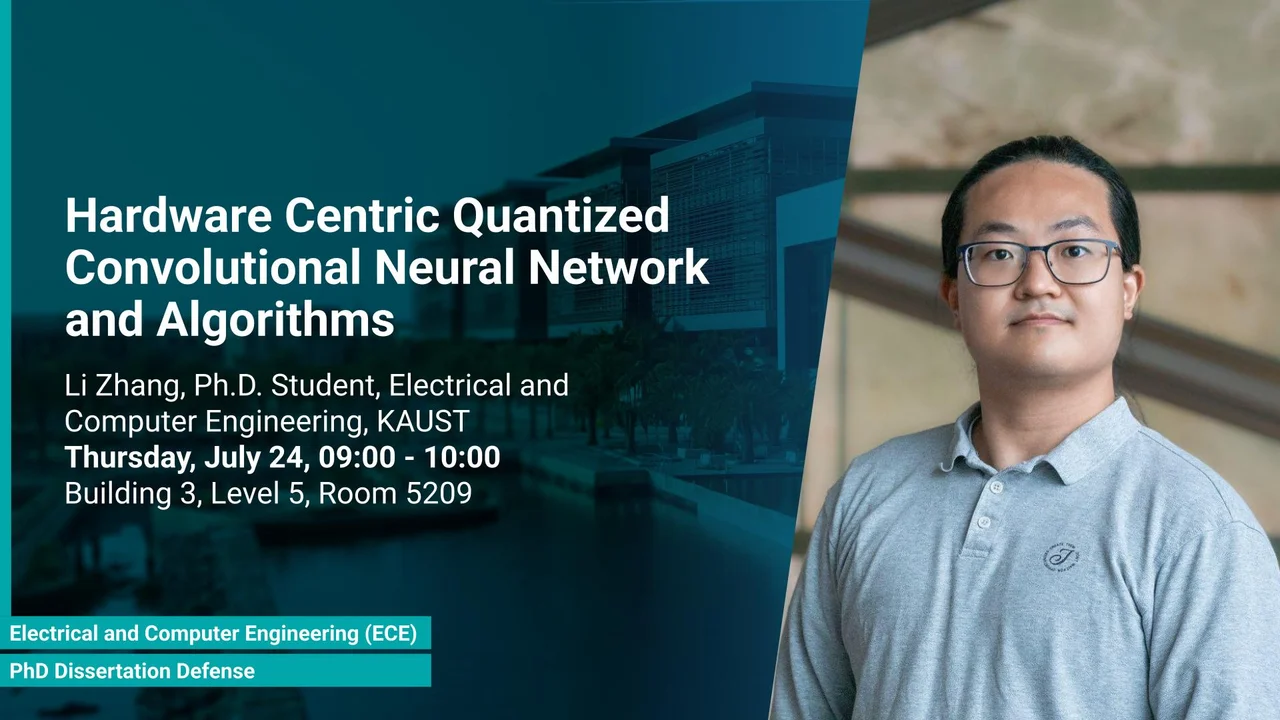

Presenters

Brief Biography

Li Zhang is a Ph.D. candidate in the Sensors Lab of the Electrical Engineering Department, CEMSE Division, King Abdullah University of Science and Technology (KAUST). He earned his B.S. in Microelectronic Science and Engineering from the University of Electronic Science and Technology of China in 2018, and his M.S. in Electrical Engineering from KAUST in 2019. His research interests include quantized neural networks, neural-network accelerator architectures, and software–hardware co-design. He has technical expertise in optimization algorithms, machine-learning methods, FPGA-based digital circuit design, and experimental measurement techniques.