Abstract

Sobolev spaces are centrally important objects in PDE theory. Consequently, to understand how deep neural networks can be used to numerically solve PDEs a necessary first step is to determine now efficiently they can approximate Sobolev functions. In this talk we consider this problem for deep ReLU neural networks, which are the most important class of neural networks in practical applications. Existing sharp results are only available for Sobolev spaces $W^s(L_\infty)$ in which the derivatives are measured in $L_\infty$. However, the solutions to many important PDEs, especially solutions which form shocks, can only be bounded in Sobolev spaces $W^s(L_p)$ where the derivates are measured in a weaker $L_p$ norm for $p < \infty$. In this talk, we will present sharp $L^q$-approximation rates for deep ReLU networks on $W^s(L_p)$ for all $q$ and $p$. Then we will discuss some of the technical details of the proof and conclude by giving a few open research directions.

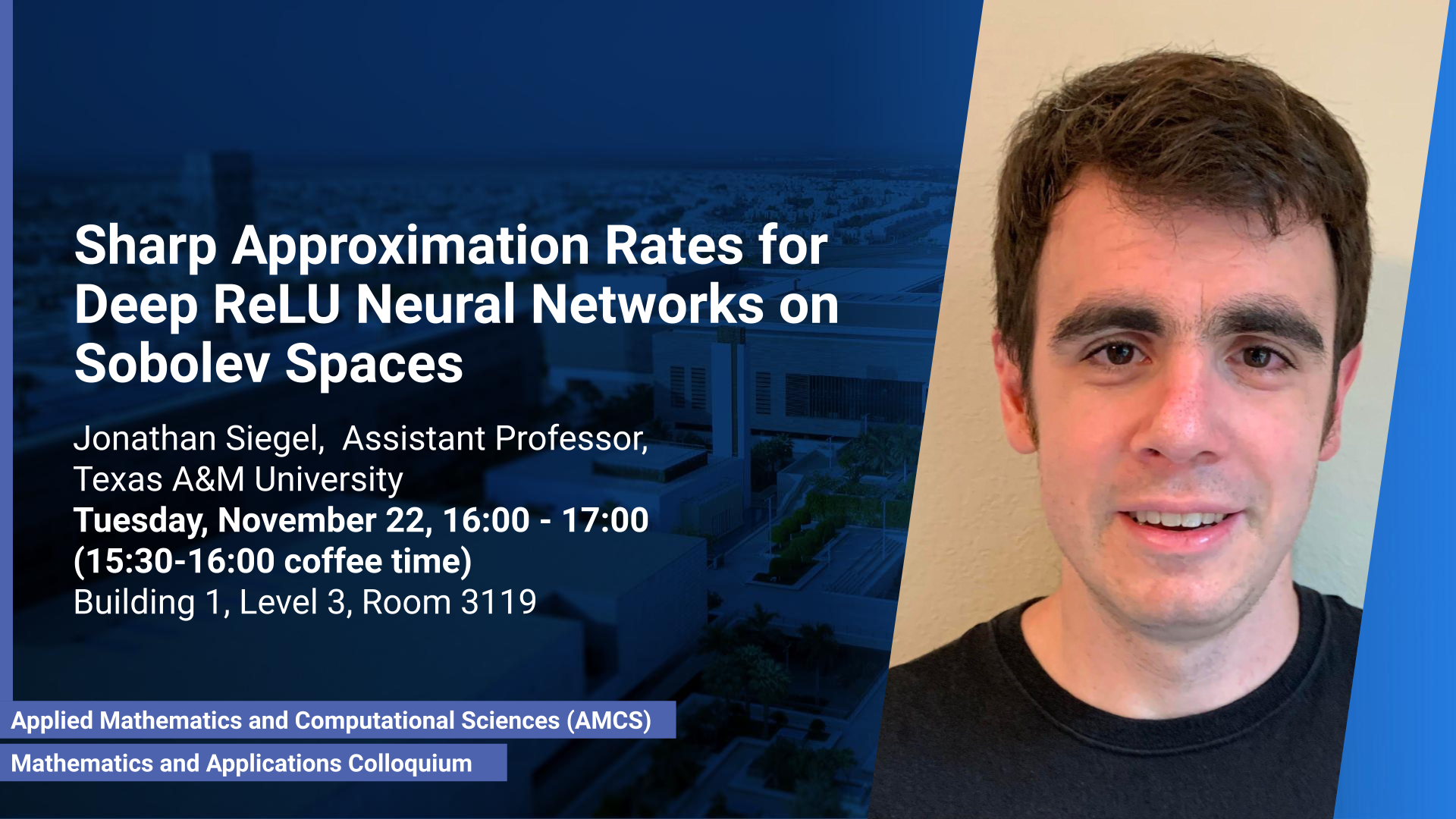

Brief Biography

Jonathan Siegel is currently an assistant professor in the mathematics department at Texas A&M University. He attended graduate school at UCLA and received a Ph.D. under the guidance of Prof. Russel Caflisch in 2018. In his dissertation, he studied optimization on manifolds and its applications to electronic structure calculations, for which he won the Pacific Journal of Mathematics Dissertation Prize. From 2018 until 2022, he did a postdoc at Penn State working with Prof. Jinchao Xu on the optimization theory and approximation properties of neural networks. He joined the faculty at Texas A&M in 2022.