Abstract

Deep neural networks (DNNs) are revolutionizing computing, necessitating an integrated approach across the computing stack to optimize efficiency. In this talk, I will explore the frontier of DNN optimization, spanning algorithms, software, and hardware. We'll start with hardware-aware neural architecture search, demonstrating how tailoring DNN architectures to specific hardware can drastically enhance performance. I'll then delve into the intricacies of DNN-hardware codesign, revealing how this synergy leads to cutting-edge hardware accelerator architectures. Finally, we'll venture into system-level strategies, including automated DNN partitioning and holistic server design, setting the stage for efficiency in the era of large-scale DNNs. This talk aims to shed light on the pivotal role of codesign in unleashing the full potential of next-generation DNNs, paving the way for continued breakthroughs in deep learning.

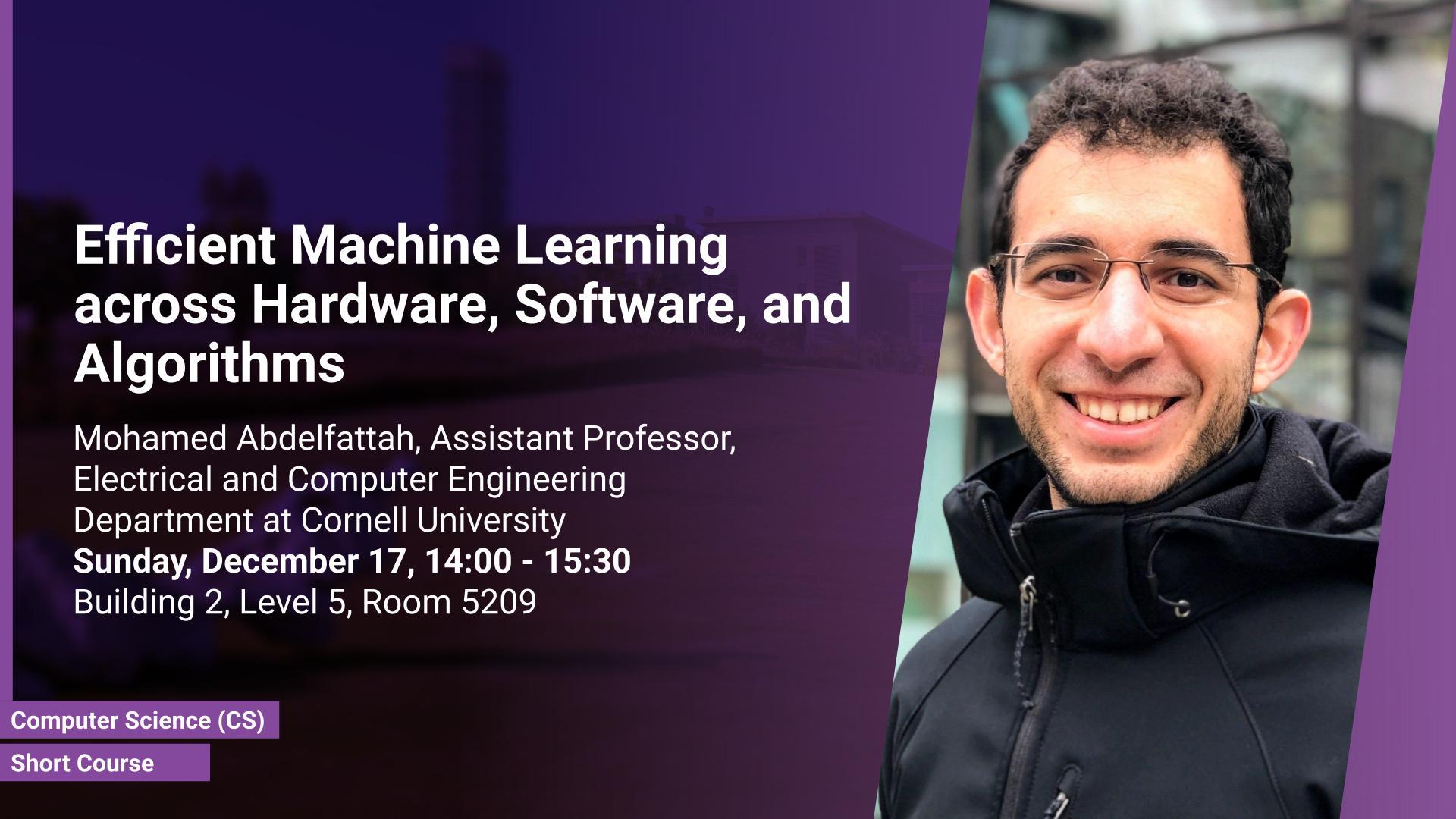

Brief Biography

Mohamed Abdelfattah is an Assistant Professor at Cornell Tech and in the Electrical and Computer Engineering Department at Cornell University. His research group is designing the next generation of machine-learning-centric computer systems for both data centers and mobile devices. He received his BSc from the German University in Cairo, his MSc from the University of Stuttgart, and his PhD from the University of Toronto. After his PhD, Mohamed spent five years at Intel and Samsung Research. He is the recipient of multiple best paper awards, the Vanier Canada Graduate Scholarship, and the NSF CAREER award.