Event Start

Event End

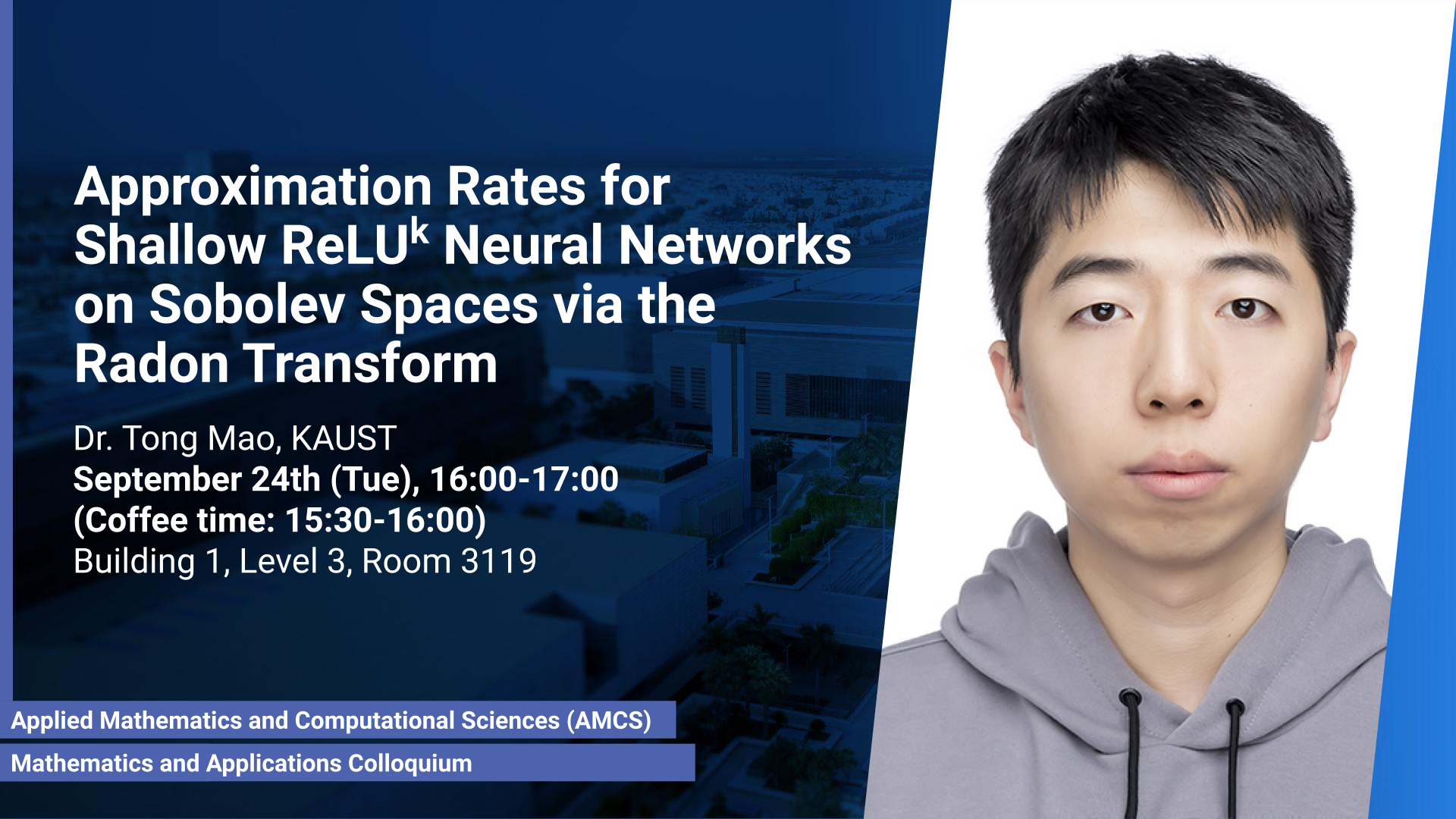

Location

Abstract

We consider the problem of how efficiently shallow neural networks with the ReLUk activation function and $N$ neurons can approximate functions from Sobolev spaces $W^s(L_p(\Omega))$ with error measured in the $L_q(\Omega)$-norm. Utilizing the Radon transform, we proved the suboptimal approximation rates $\mathcal{O}(N^{-s/d})$ in a variety of cases, including when $q\leq p$, $p\geq 2$, and $s \leq k + (d+1)/2$. The rates we derive are optimal up to logarithmic factors, and significantly generalize existing results.

Brief Biography

Dr. Tong Mao has been a postdoctoral researcher at KAUST under the supervision of Professor Jinchao Xu since 2023. Before joining KAUST, he was a postdoctoral researcher at Claremont Graduate University, USA, and he completed his PhD in 2022 at the City University of Hong Kong, HKSAR. His research interests include the mathematical theory of machine learning, particularly the approximation theory of various neural networks.