© 2020 KAUST

A pre-emptive memory management system developed by KAUST researchers can speed up data-intensive simulations by 2.5 times by eliminating delays due to slow data delivery. The development elegantly and transparently addresses one of the most stubborn bottlenecks in modern supercomputing—delivering data from memory fast enough to keep up with computations.

“Reducing the movement of data while keeping it close to the computing hardware is one of the most daunting challenges facing computational scientists handling big data,” explains Hatem Ltaief from the research team. “This is exacerbated by the widening gap between computational speed and memory transmission capacity, and the need to store high-volume data on remote storage media.”

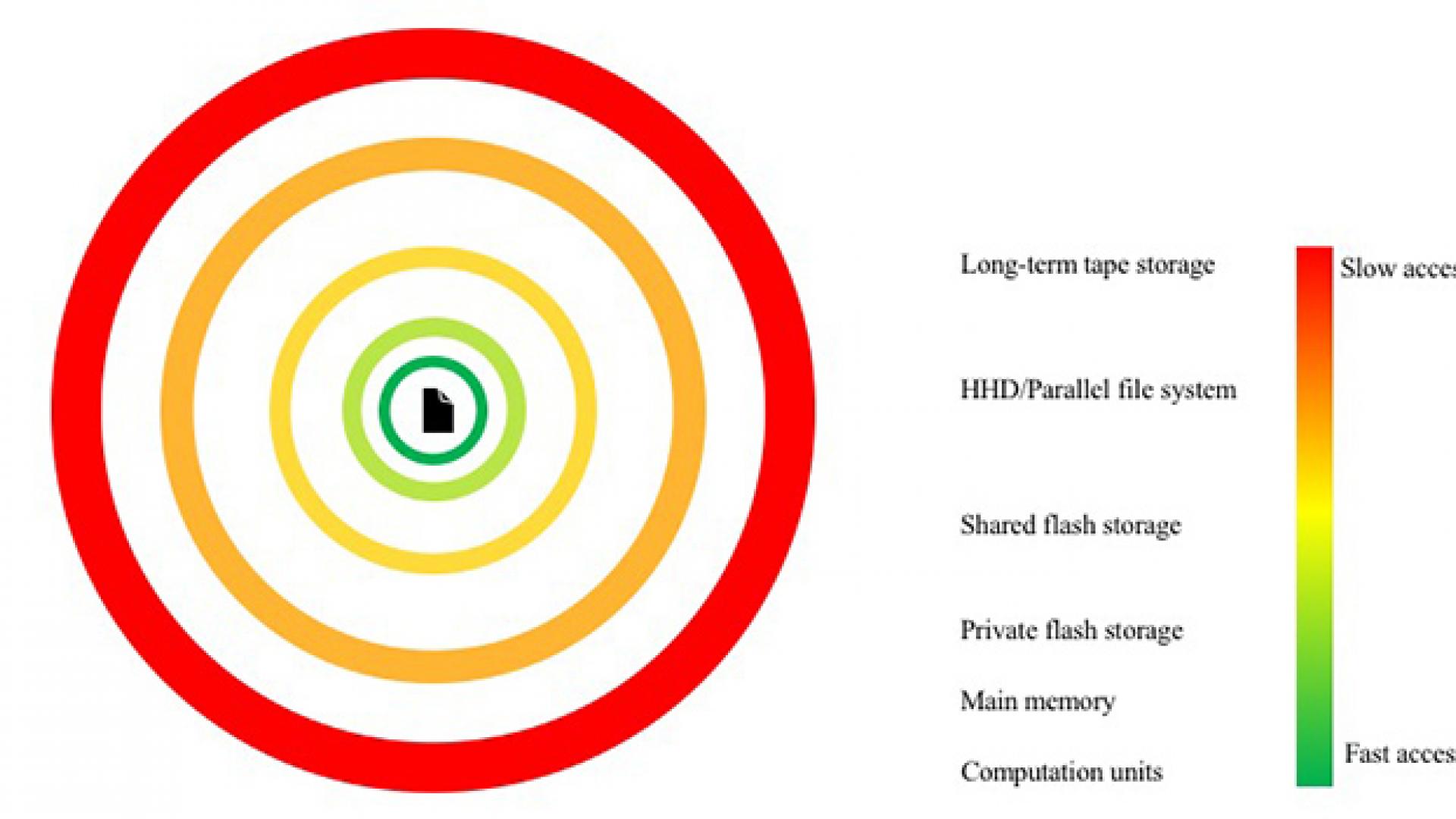

The key challenge in processing big data is the cost and scale of storing the data in memory. The faster the memory, the more expensive it is, and the faster the data need to be moved between computing elements. Because only relatively small capacities of the fastest memory are available on even the most powerful supercomputing platforms, system engineers add successively larger, slower, and more remote layers of memory to hold the tera- and petabytes of data typical of big data sets.

“It is in this hostile landscape that our system comes into play by reducing the overhead of moving data in and out of remote storage hardware,” says Ltaief.

Read the full article