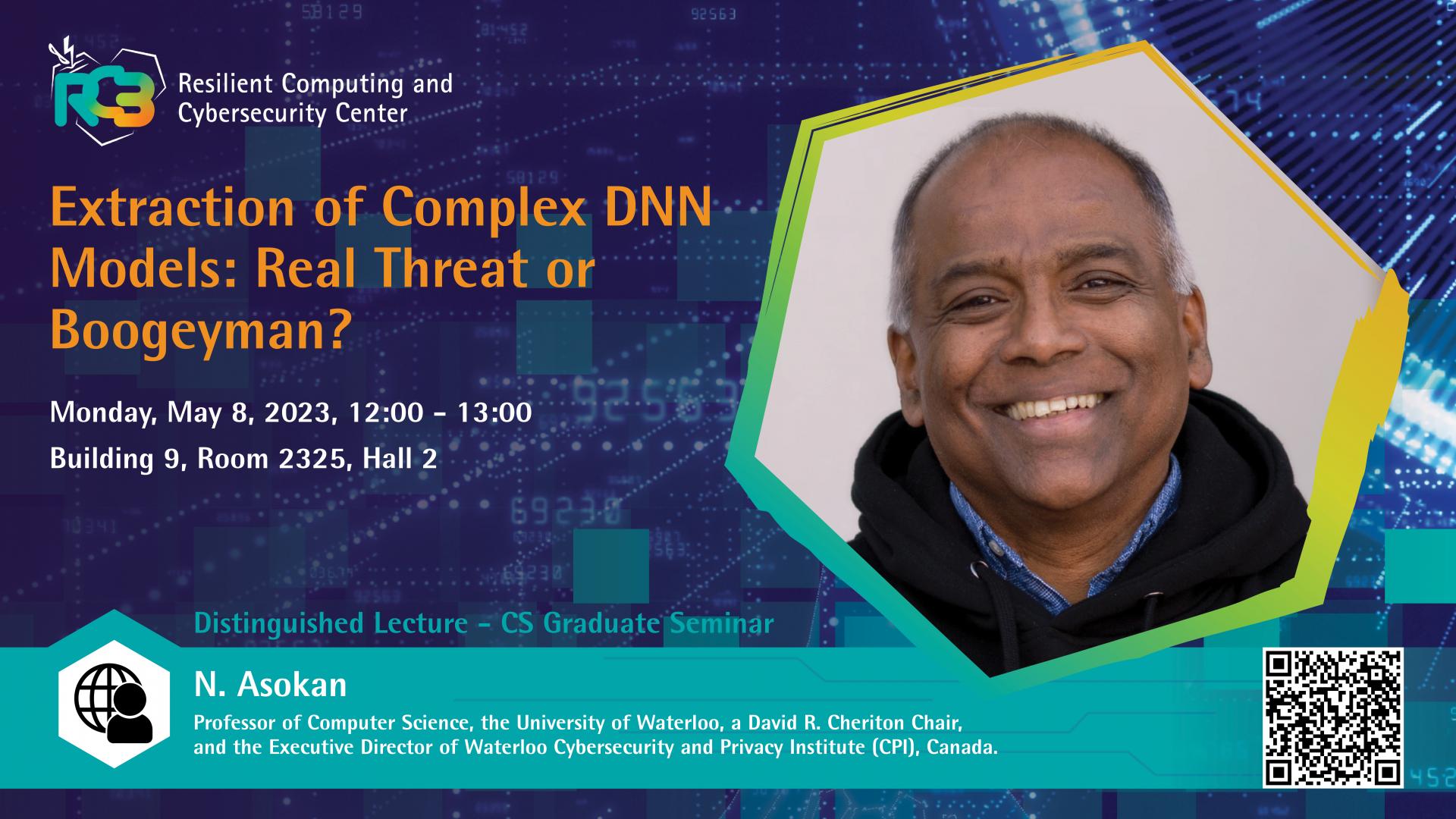

This Distinguished Lecture is part of the CS Graduate Seminars.

Abstract

The success of deep learning in many application domains has been nothing short of dramatic. The success has brought the spotlight onto security and privacy concerns with deep learning. One of them is the threat of "model extraction": when a machine learning model is made available to customers via an inference interface, a malicious customer can use repeated queries to this interface and use the information gained to construct a surrogate model. In this talk, I will describe our work in exploring whether model extraction constitutes a realistic threat. I will also discuss possible countermeasures, focussing on deterrence mechanisms that allow for the verification of ownership of ML models. Finally, I will touch on the issue of conflicts that arise when protection mechanisms for multiple different threats need to be applied simultaneously to a given ML model, using ownership verification techniques as a case study.

Brief Biography

N. Asokan is a Professor of Computer Science at the University of Waterloo, where he holds a David R. Cheriton chair and serves as the Executive Director of Waterloo Cybersecurity and Privacy Institute (CPI). He is also an Adjunct Professor of Computer Science at Aalto University, where he was the founding director of the Helsinki-Aalto Institute for Cybersecurity (HAIC). Asokan's primary research theme is systems security broadly, including topics like the development and use of novel platform security features, applying cryptographic techniques to design secure protocols for distributed systems, applying machine learning techniques to security/privacy problems, and understanding/addressing the security and privacy of machine learning applications themselves. Asokan is a Fellow of both ACM and IEEE and was chosen for an Outstanding Innovation Award by SIGSAC, ACM’s special interest group in security and privacy. View Asokan's LinkedIn profile and personal website.