Abstract

The technique of cross-validation is widely used in statistics and machine learning to assess predictive performance and select models. It involves dividing the data into multiple sets, training the model on some of the data, testing it on the rest, and repeating this process several times. The objective is to evaluate the model’s predictive power on unseen data that is different from the training data. Leave-one-out cross-validation and K-fold cross-validation are two popular cross-validation methods. However, these methods may not be appropriate for structured models that have numerous potential prediction tasks since they do not consider the data’s structure. To address this issue, we introduce leave-group-out cross-validation, an extension of cross-validation that enables the left-out groups to adapt to different prediction tasks. We propose an automatic group construction method for leave-group-out cross-validation to estimate the model’s predictive performance when the prediction task is unspecified. Additionally, we provide an efficient approximation of leave-group-out cross-validation for latent Gaussian models, which are implemented in the R-INLA software. We demonstrate the practicality of our proposed leave-group-out cross-validation approach by applying it to the joint modeling of survival and longitudinal data. Our example illustrates the effectiveness of this method in various real-world scenarios.

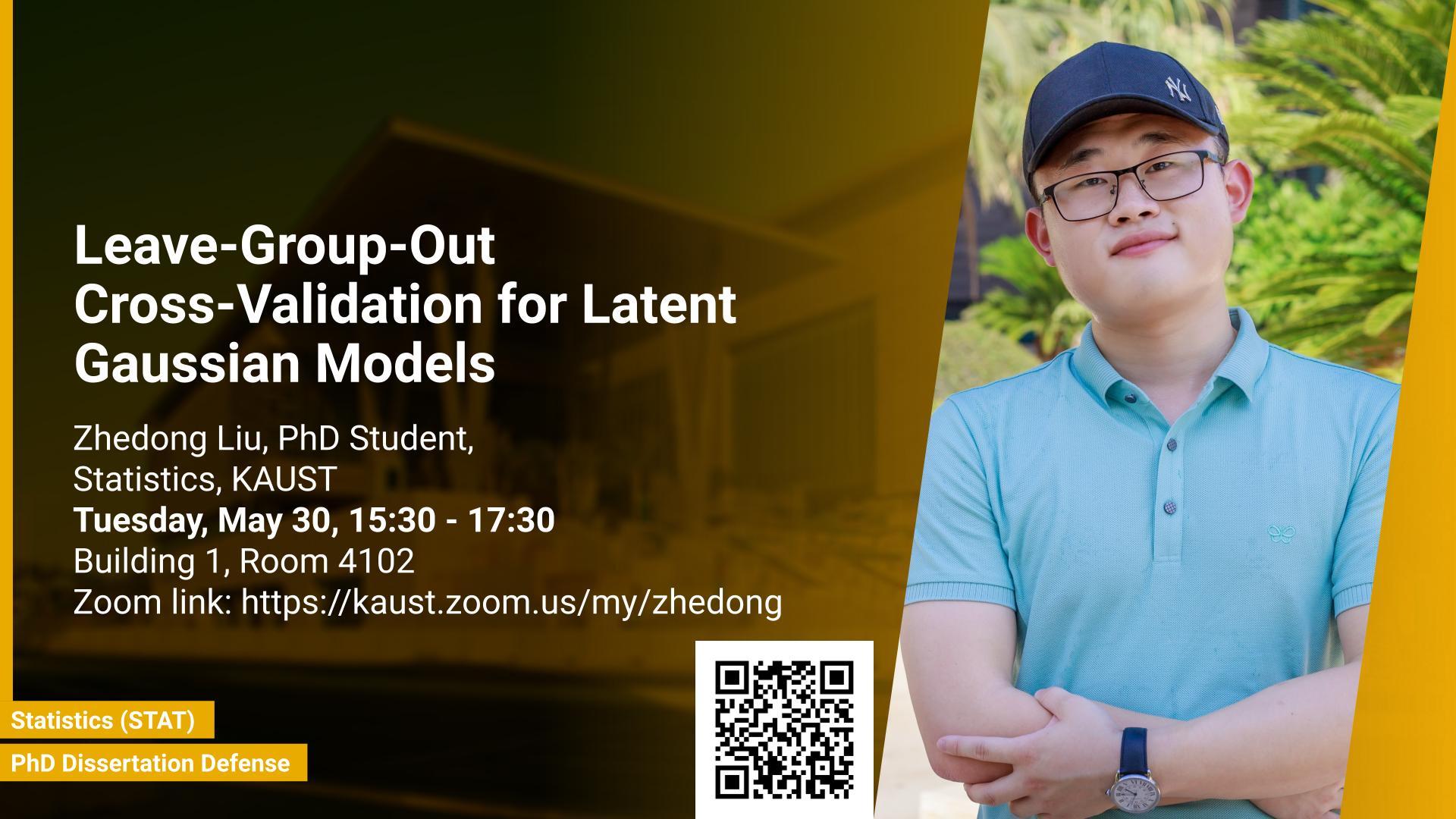

Brief Biography

Zhedong Liu is a Ph.D. student in Statistics under the supervision of Professor Haavard Rue in the Bayesian Statistics & Modeling group. Zhedong Liu obtained his bachelor's degree in Mathematics and Economics from George Mason University (GMU) in 2017. He has another bachelor's degree in International Economics and Trade from Nanjing University of Information Science and Technology (NUIST) in 2017.