Events

Apr 6 - Apr 12, 2025

Mar 23 - Mar 29, 2025

Mar 16 - Mar 22, 2025

Mar 9 - Mar 15, 2025

One-on-One Statistical Consulting for KAUST Researchers

-

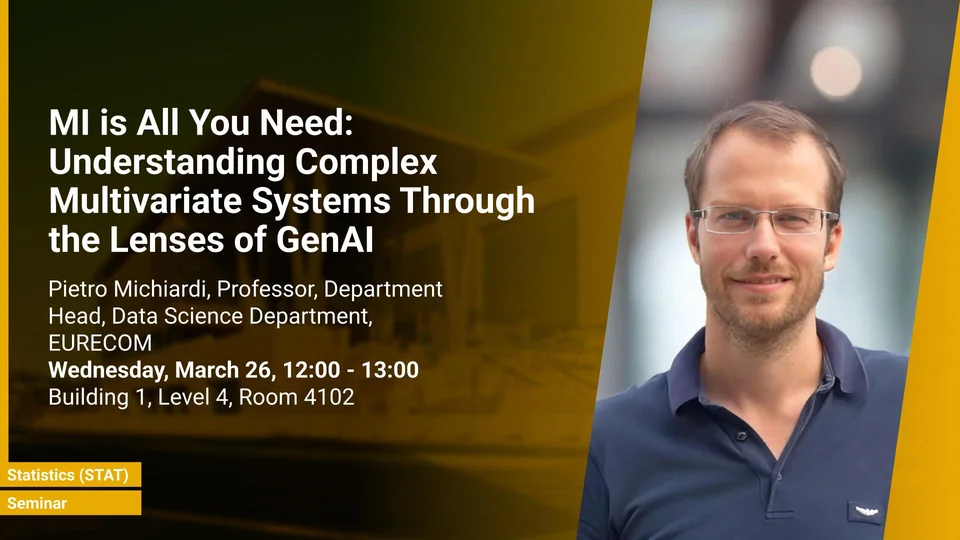

B1 L4 R4102

Mar 2 - Mar 8, 2025

Feb 23 - Mar 1, 2025

Feb 16 - Feb 22, 2025

Feb 9 - Feb 15, 2025

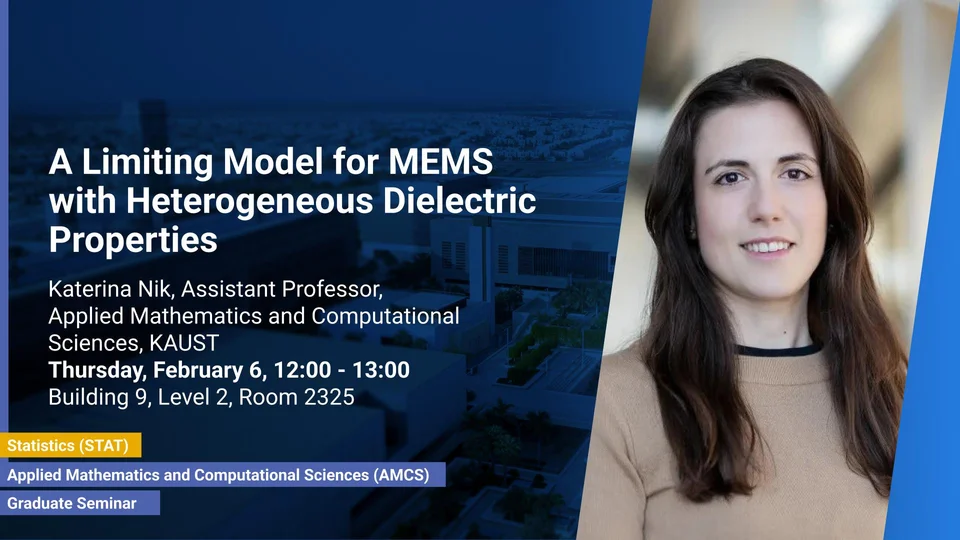

Feb 2 - Feb 8, 2025

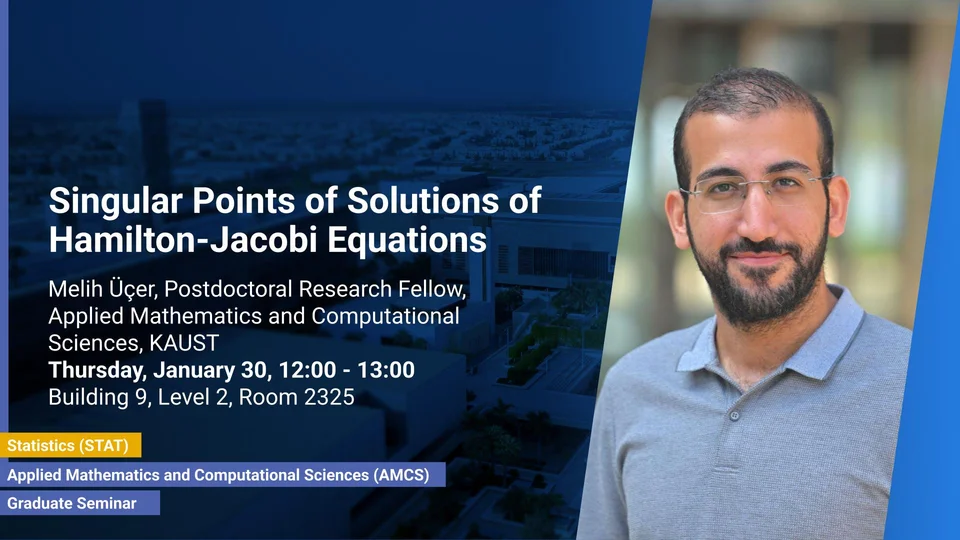

Jan 26 - Feb 1, 2025

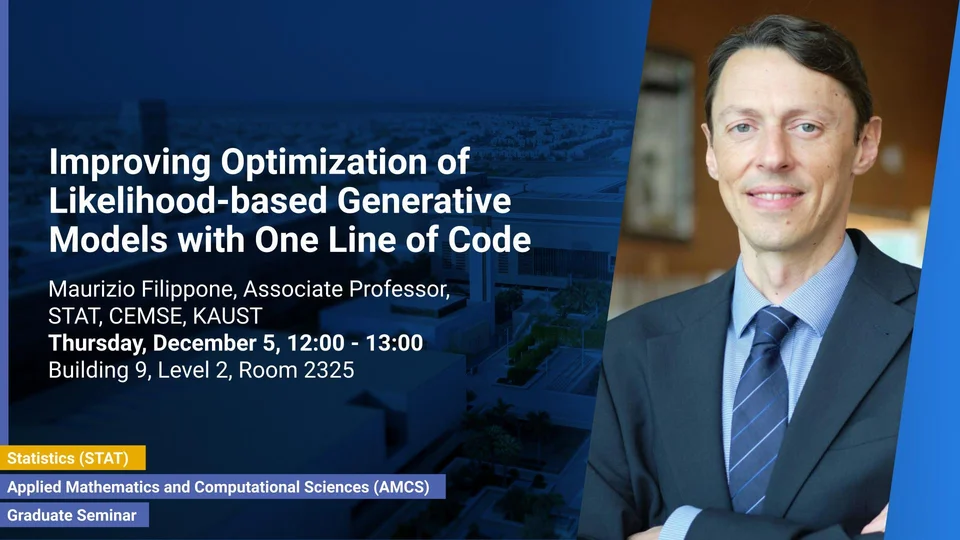

Dec 1 - Dec 7, 2024

Nov 24 - Nov 30, 2024

Nov 17 - Nov 23, 2024

The KAUST 2024 Workshop on Statistics

-

Auditorium between Building 4 - 5

May 26 - Jun 1, 2024

May 19 - May 25, 2024

May 5 - May 11, 2024

Apr 28 - May 4, 2024

Apr 21 - Apr 27, 2024

On Distribution-Free Risk Control

Michael Jordan, Professor Emeritus, University of California, Berkeley

-

B9 L4 R4225

An Alternative View on AI: Collaborative Learning, Statistical Incentives, and Social Welfare

Michael Jordan, Professor Emeritus, University of California, Berkeley

-

Auditorium between B2 and 3

Apr 14 - Apr 20, 2024

Towards Self-explainable Deep Learning Models

Prof. Michael Kampffmeyer, UiT The Arctic University of Norway

-

B1 L4 R 4102

Can neural ODEs forecast weather?

Dr. Markus Heinonen, Academy Research Fellow, Aalto Univeristy, Finland

-

B1 L4 R 4102

Mar 31 - Apr 6, 2024

Mar 24 - Mar 30, 2024

Mar 17 - Mar 23, 2024

Mar 3 - Mar 9, 2024

Feb 25 - Mar 2, 2024

Feb 11 - Feb 17, 2024

Feb 4 - Feb 10, 2024

Jan 28 - Feb 3, 2024

Think globally, act locally: from domain decomposition to nonlinear model order reduction

Prof. Charbel Farhat

-

KAUST Auditorium R2030

Jan 21 - Jan 27, 2024

Dec 3 - Dec 9, 2023

Nov 26 - Dec 2, 2023

Nov 19 - Nov 25, 2023

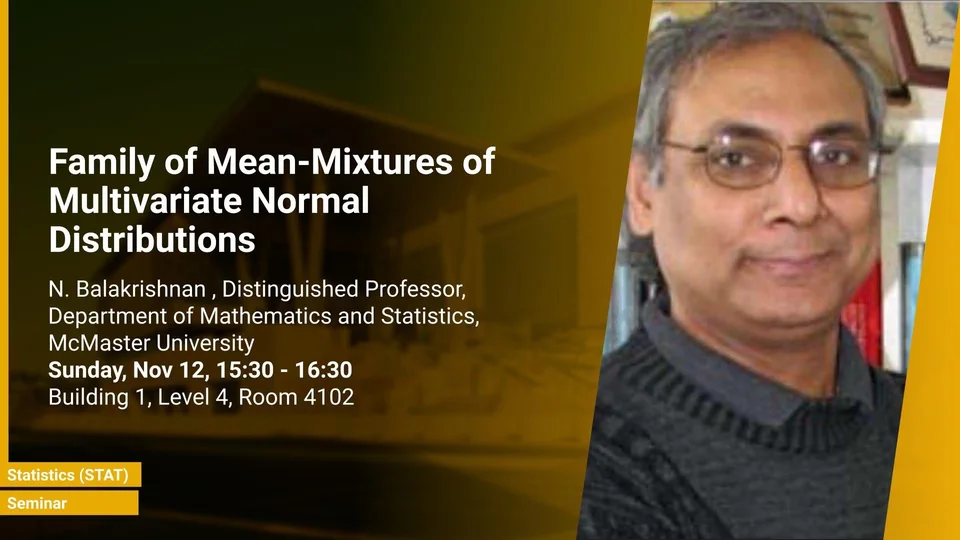

Nov 12 - Nov 18, 2023

Family of Mean-Mixtures of Multivariate Normal Distributions

Prof. Narayanaswamy Balakrishnan, Department of Mathematics and Statistics, McMaster University

-

B1 L4 R4102

Nov 5 - Nov 11, 2023

Oct 29 - Nov 4, 2023

Oct 22 - Oct 28, 2023

Oct 15 - Oct 21, 2023

Latent Gaussian Spatial Modelling with Change of Support

Erick Chacon Montalvan, Postdoctoral fellow, Statistics Geohealth Group, KAUST

-

B9 L2 H2