Abstract

In this talk, I will first give a convergence analysis of gradient descent (GD) method for training neural networks by relating them with finite element method. I will then present some acceleration techniques for GD method and also give some alternative training algorithms

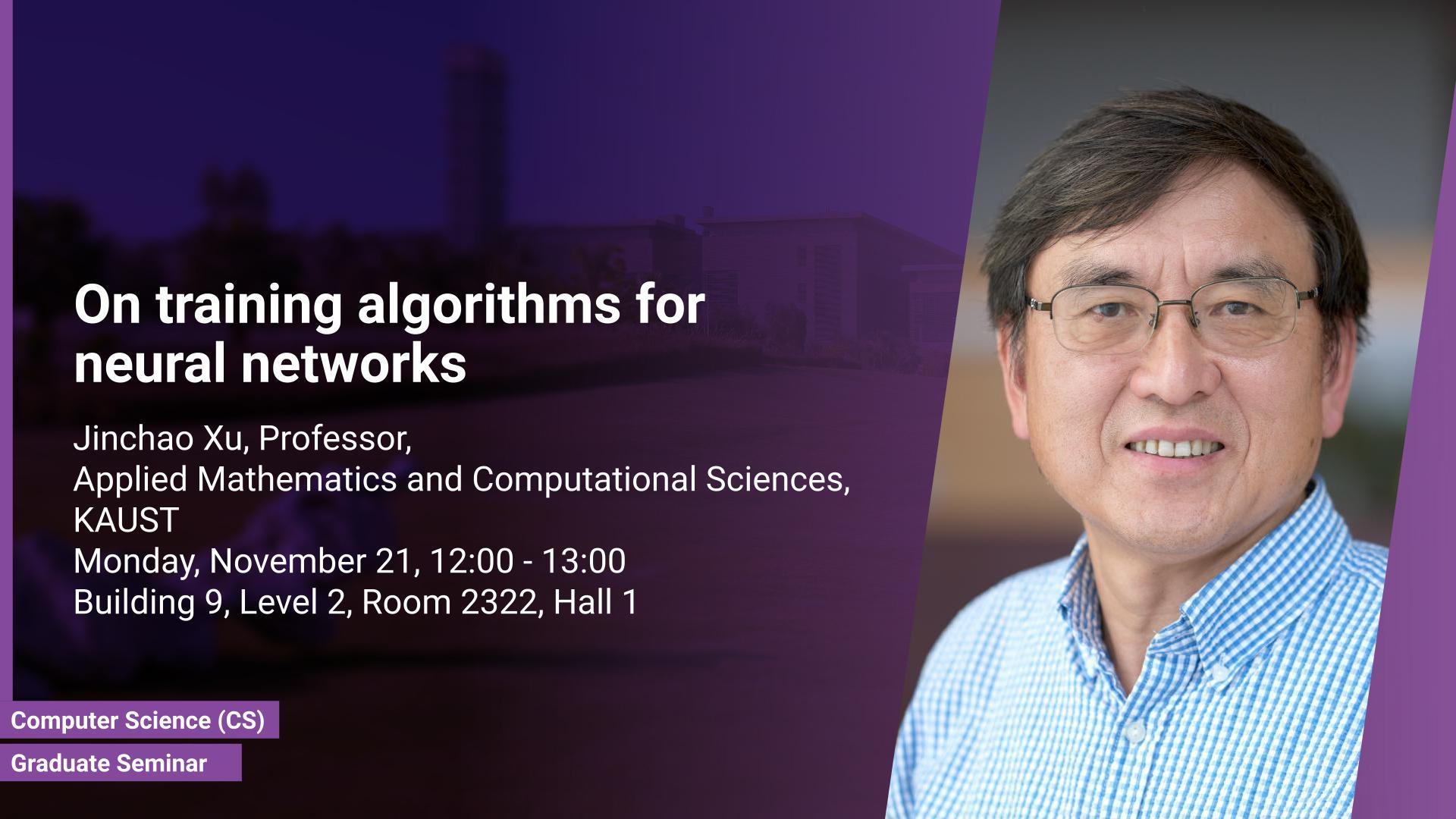

Brief Biography

Jinchao Xu was the Verne M. Willaman Professor of Mathematics and Director of the Center for Computational Mathematics and Applications at the Pennsylvania State University, and is currently Professor of Applied Mathematics and Computational Sciences, CEMSE, KAUST. Xu’s research is on numerical methods for partial differential equations that arise from modeling scientific and engineering problems. He has made contributions to the theoretical analysis, algorithmic developments, and practical application, of multilevel methods. He is perhaps best known for the Bramble-Pasciak-Xu (BPX) preconditioner and the Hiptmair-Xu preconditioner. His other research interests include the mathematical analysis, modeling and applications of deep neural networks. He was an invited speaker at the International Congress for Industrial and Applied Mathematics in 2007 as well as at the International Congress for Mathematicians in 2010. He is a Fellow of the Society for Industrial and Applied Mathematics (SIAM), the American Mathematical Society (AMS), the American Association for the Advancement of Science (AAAS) and the European Academy of Sciences