Abstract

A multivariate dataset consists of n cases in d dimensions, and is often stored in an n by d data matrix. It is well-known that real data may contain outliers. Depending on the situation, outliers may be (a) undesirable errors which can adversely affect the data analysis, or (b) valuable nuggets of unexpected information. In statistics and data analysis the word outlier usually refers to a row of the data matrix, and the methods to detect such outliers only work when at least half the rows are clean. But often many rows have a few contaminated cell values, which may not be visible by looking at each variable (column) separately.

We describe a method to detect deviating data cells in a multivariate sample which takes the correlations between the variables into account. It has no restriction on the number of clean rows, and can deal with high dimensions. Other advantages are that it provides predicted values of the outlying cells, while imputing missing values at the same time.

We illustrate the method on several real data sets, where it uncovers more structure than found by purely columnwise methods or purely rowwise methods. The proposed method can help to diagnose why a certain row is outlying, e.g. in process control. It also serves as an initial step for estimating multivariate location and scatter matrices, and for cellwise robust principal component analysis.

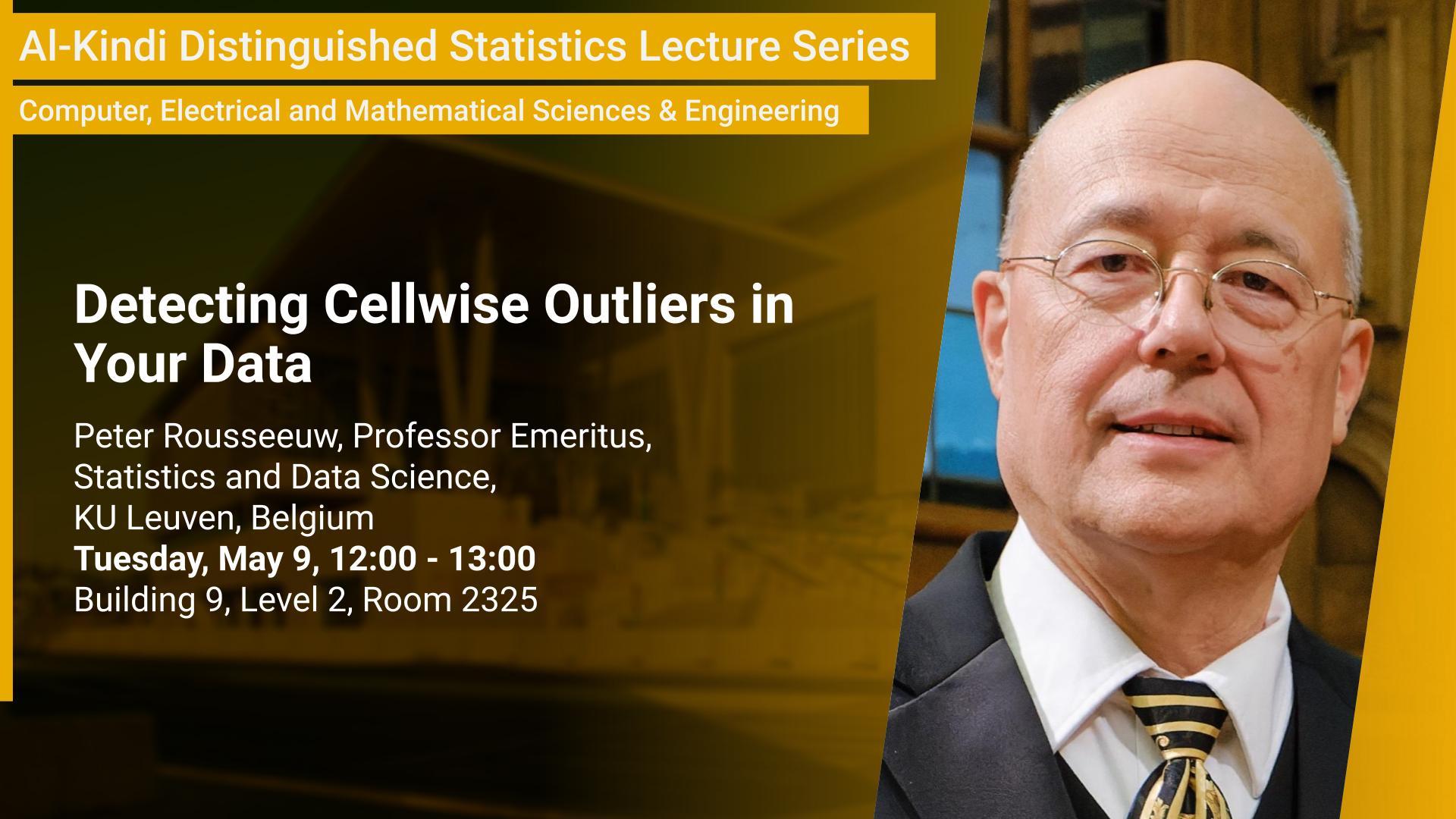

Brief Biography

Peter Rousseeuw is a Belgian statistician who has mainly contributed to robust methodology, outlier detection, and cluster analysis. He obtained his PhD following research at ETH Zurich, which led to a book on influence functions. Later he was professor at the Delft University of Technology and at the University of Antwerp, Belgium. Afterward he spent over a decade in a financial company in New York. Currently he is professor emeritus at KU Leuven, Belgium. Among the techniques he introduced are the Least Trimmed Squares method and S-estimators for robust regression, as well as the Minimum Covariance Determinant method for covariance matrices. His 1984 paper on robust regression was included in Breakthroughs in Statistics by Kotz and Johnson, which reprinted 60 papers from 1850 to 1990. With L. Kaufman he coined the word medoid when proposing the k-medoids method for cluster analysis. His silhouette display for clustering is often used to select the number of clusters. Other works are the scale estimator Qn (with C. Croux), robust principal component methods, regression depth, the bagplot (with J. Tukey), and dealing with cellwise outliers. His work has been cited over 100,000 times.