Reproduced with permission from reference 1© 2016 American Statistical Association.

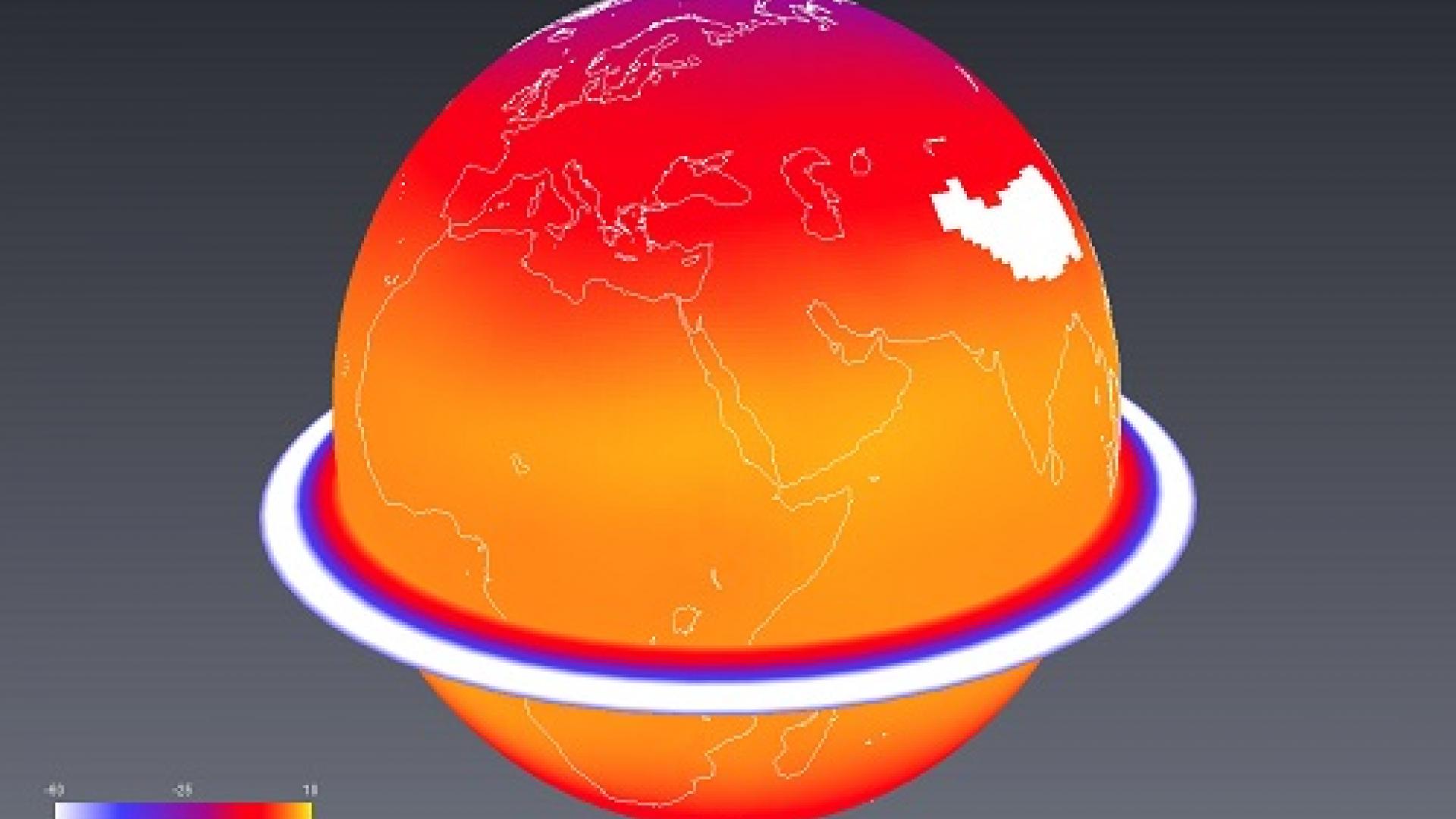

By accounting for the specific statistical structure of global climate simulation data, the University’s Marc Genton partnered with a U.K. researcher to develop a novel and very effective data compression scheme for large-scale climate simulations. The method promises to significantly reduce data storage requirements and accelerate the capacity for climate research.

The ever-increasing power of supercomputers has allowed scientists to become more ambitious when it comes to simulations. In fields such as climate science, researchers are embarking on global-scale simulations at spatial resolutions that would have been unthinkable just a few years ago. Although this has expanded research possibilities, it has also put a huge strain on data storage requirements—a single large-scale simulation can produce many terabytes of data that must be stored and accessed for it to be useful.

“The volume of data produced by these simulations is becoming so large that it is not possible to store it in computational facilities without incurring high costs,” explained Genton. “We see this not just in climate science but also in astronomy, where new telescopes are capable of capturing large images at unprecedented resolution, and in engineering applications, where computer simulations are performed at very high-resolution in both space and time.”

Read the full article